Generative adversarial neural networks are the next step in deep learning evolution and while they hold great promise across several application domains, there are major challenges in both hardware and frameworks.

Nonetheless, we expect to see this approach to machine learning take hold first in enterprise areas where image and video are central before moving out to touch other areas where, for example, simulated datasets can be used to feed into broader HPC applications. It is hard to say when the co-evolution of infrastructure and software will hit a point where we see more use cases but the capabilities of GANs are compelling and far-reaching enough to warrant very directed work toward getting the full stack ready for this next stage of AI.

Before we talk about the challenges in more depth, for those not familiar, why are GANs worth all of this effort when there are already several mature approaches to deep learning? It turns out, these networks go beyond recognition and classification and, as the name implies, actually generate output based on a reference or sample. And as many already know, the results are convincing variations of reality based on real images or streams of images in a video sequence.

Despite the added capability, functionally speaking, a GAN is not much different than other convolutional neural networks. The core computation of the discriminator in a GAN is similar to a basic image classifier and the generator is also similar to other convolutional neural networks, albeit one that operates backwards.

These two competing deep learning forces; the generator network and the discriminator network are based on existing concepts in machine learning, but they work together in a new way. The generator takes a dataset and tries to convert that into an image, for example, does its best to synthesize an image of its own, then passes that off to the discriminator, which gives a simple “yes” or “no” equivalent on whether it is “real” or not. The generator learns its weaknesses from the discriminator, which is a noteworthy feature, but it does make training more computationally intensive and iterative and the networks themselves are not without some challenges.

As clean as all that might sound, in practice, it is not always so clear-cut. On the framework side, there is the problem of mode collapse, which affects the stability of that training and feedback process. In essence, one network can overpower the other. For instance, the generator might make images the discriminator cannot figure out, even when those generated images might not look like they should based on the dataset distribution. In such a case, the generator will never learn because it is not getting feedback about what to do better.

The GAN overpowering issue is one that can be tuned over time but the hardware side of the GAN challenge is a bit more difficult to contend with.

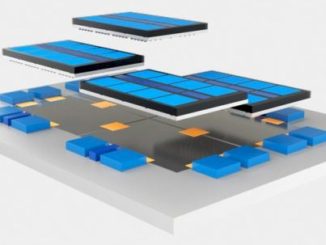

The problem is that training a simple neural network takes some computational effort—training this kind of dueling network puts quite a bit more strain on the system, particularly in terms of memory. We will not even pretend this kind of training work is done on CPU only machines for the moment, which calls to mind the fact that there is limited memory inside a GPU. Even a batch size of one can quickly saturate memory on a Volta GPU and while it is possible to string a bunch of GPUs together into a shared memory space using NVSwitch, for example, that just moves the bottleneck down to the interconnect since there is a great deal of GPU-to-GPU transfer as the discriminator keeps feeding back guidance to the generator in particular.

“For GANs the computational limits are not because this is an entirely new way of solving an ML problem, it is because the models are large and complex and take a lot of communication and memory. The core components are already used in computer vision and for other tasks like semantic segmentation or depth estimation.”

“GANs do require some real computational horsepower and things are changing in terms of infrastructure,” says Bryan Catanzaro, VP of Applied Deep Learning at Nvidia. “You need to have more math throughput because some of these models can be quite large with a lot of parameters, so training takes quite a lot of work—and a lot of memory. Many of the GANs we train are memory limited where even just training a batch size of one or two will fill the entire GPU memory just holding one or two examples since the models are so large.”

It does help to have a bigger system when training, he adds, and it is also valuable to split the batches across multiple GPUs but that stresses even a robust GPU-centric interconnect like NVlink on the DGX-1 that he and his team use for their work on video GANs. On that note, Catanzaro’s work on GANs for interactive video generation for games showcases exactly the capability of these neural networks. In near-real time a GAN generates an environment on the fly. He says that the DGX-2, once his team has one at the ready, will speed their work considerably. “Machines were ready for the GANs of 2014 when they were new but the machines have improved considerably since then and so have the GAN results.”

The large model size problem on GPUs is particularly true with Nvidia’s work on video synthesis GANs. “We care about graphics; we are interested in using these to generate video games as a better way to create content, to make it easier to create virtual worlds by training on video from the real world. But this is complicated—video GANs especially because we are not only generating an image, but a sequence of images that need to be related to other another. The memory requirements are higher, so are the computational requirements.

This generation of sequences was at the heart of recent conversation we had about the potential for GANs in drug discovery. As we noted there, in addition to the dueling networks, a reinforcement learning component in addition to the discriminator’s feedback was required, which upped the infrastructure requirements. As we noted there, drug discovery startup, Insilico Medicine, has had to use high-test GPU clusters to fit their models in the system and while they have had some success, there is an unending need for more compute, more memory, and better memory bandwidth.

Before GANs at any scale can filter into areas beyond image and video generation and into broader use cases in scientific, technical, or enterprise realms, both the hardware and software limitations need to be resolved and even then, Catanzaro says, it is still somewhat early to say that GANs will filter into these other domains anytime soon, despite the interest.

“I have seen people try, and we have done some work here, for text and audio applications for GANs but to be honest, the results have not been as compelling as in image and video. That is not to say they won’t work or people won’t figure things out, after all that’s how machine learning works—it’s hard to prove what is going to work before you try. For now though, I’ve seen the most success in the visual domain, so it is not surprising to see this taking hold in medical imaging, for example,” Catanzaro adds.

While the hope might be there for some startups to begin exploring a broader, more real-world application space beyond image and video in gaming or content generation, there is still some maturity on both sides of the platform. Each day seems to yield some new academic research work or hints about startups looking to these networks (and even, we hear tell, processors and systems configured for just this task) but without high-value applications that can function on existing hardware, these folks might be putting the cart before the horse. But as we have learned watching the AI space unfold from an infrastructure point of view (which requires a close eye on the evolution of frameworks like GANs), optimizations and tweaks can bring a far-off technology into closer sight in a very short period of time.

It should come as no surprise that Nvidia is doing some of the pioneering work on GANs on their hardware since GPUs are the dominant platform for training—and this is a challenging training task, even for their best DGX systems. And it should also not be surprising since Nvidia has a vested interest in the future of graphics and gaming and the performance of both. Having auto-generating content that executes on the fly on their devices could be a game-changer (no pun intended). But from our perspective having watched the evolution of GPUs from consumer gaming devices to powering top supercomputers as accelerators, the real lesson is not to discount a technology just because its pervasive application is to deliver a better gaming experience.

In short, expect to see a lot more about GANs this year—and not just in video and image creation. But when you do, ask questions about how capable the machines are for the models needed and reverse it for good measure; how robust are the models going to be on hardware that exists now?

This and related topics about the changing hardware landscape for deep learning will be the subject of some of our live interviews at The Next AI Platform event in San Jose, CA on May 9. No marketing, no PowerPoints, just the in-depth questions and answers you expect from TNP in a live format (with a happy hour to boot). Pre-register now.

Be the first to comment