Hyperconverged infrastructure has been with us for a while now, and it looks like the technology is still a growing market, if analyst figures can be believed. A recent survey from IDC found that revenue from hyperconverged systems grew 78.1 percent year-on-year for the second quarter of 2018, generating $1.5 billion worth of sales, while the hyperconverged segment now accounts for at least 41 percent of the overall converged systems market.

What this tells us is that hyperconverged infrastructure (HCI) is turning out to be an attractive proposition for a much broader range of use cases within organizations than the niche areas it was originally created to address, which included providing the infrastructure for operating a virtual desktop environment for employees.

There are numerous reasons for this. The hyperconverged model integrates both compute and storage resources into a single appliance-like node, which serves as a kind of building block for infrastructure; to scale, you just add more nodes, which delivers more compute power and more storage simultaneously. This is because storage is pooled across all the nodes, doing away with the need for a SAN, and with a clever software management layer taking care of everything, HCI can be less costly to operate than traditional infrastructure.

However, there have been criticisms levelled at HCI, including that it is less flexible than traditional infrastructure and that users may end up paying for resources they do not need because scaling means adding entire extra nodes, whereas the user may simply want more storage resources for an application or service they are running. Another criticism is that the tight integration between hardware and software can lead to a vendor lock-in.

Partly for these reasons, there has been a move of late towards hyperconverged platforms becoming a software-only play, and allowing the customers a greater freedom of choice regarding the hardware they run it on. Nutanix, one of the leaders in HCI, went down this road at least a year ago, allowing customers to use either its hardware or qualified third-party systems. VMware has also been offering a similar option based on its VSAN software-defined storage, which buyers can run on qualified VSAN Ready Nodes.

Last year also saw the entry of Red Hat into this arena, with the company’s usual rallying cry that community-built software solutions are more open and less risky than proprietary platforms. It pushed out its own stack, Red Hat Hyperconverged Infrastructure for Virtualization, which has just recently been given an upgrade to version 1.5.

So why did Red Hat decide to enter what is an increasingly crowded market? According to the firm, one of the reasons was that it discovered that some customers were already trying to implement hyperconverged infrastructure themselves by cobbling together Red Hat products to deliver the required functionality, leading to a custom-made solution that may not be easy to maintain or update in future.

Red Hat also claims that a software-only solution offers users the flexibility to scale compute resources and storage independently, and sees itself as offering an alternative to a “more expensive VMware lock-in environment” or “transitioning from it under professional guidance” from Red Hat itself.

As is common with many Red Hat products, Red Hat Hyperconverged Infrastructure for Virtualization (or RHHI-V as the company labels it) comprises a number of separate packages bundled together. In this case, the main pillars are its Red Hat Enterprise Linux operating system, Red Hat Virtualization, and Red Hat Gluster Storage, now combined with the Open Virtual Network (OVN) stack for software-defined networking functions, and Red Hat Ansible Automation for provisioning and management. (Companies need to take their names out of their product brands. This is ridiculous. We know it is Red Hat. — TPM.)

OVN was created by the Open vSwitch team at Nicira, before it was acquired by VMware many years ago, providing the foundation for VMware’s NSX virtual network technology. OVN enables the creation of virtual Layer 2 and Layer 3 overlay networks, and can also be used with the OpenStack cloud framework and Red Hat’s OpenShift container platform.

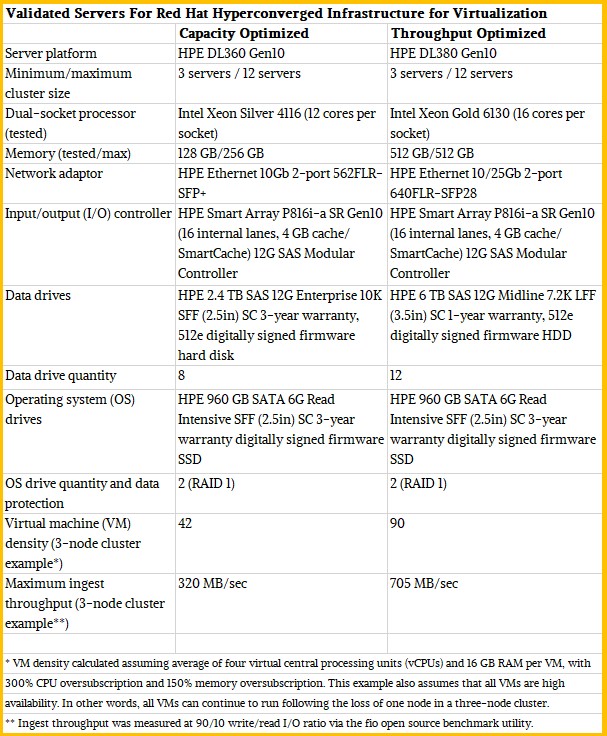

With the RHHI-V 1.5 release, Red Hat has detailed some validated server configurations that customers can use as a reference. These were produced by conducting performance tests using HPE ProLiant server hardware, the DL360 for capacity-optimized scenarios and the DL380 for throughput-optimized scenarios. Both of these systems are widely deployed in enterprises, and Red Hat used the current Gen10 hardware, which is based on Intel’s “Skylake” Xeon SP processors. However, Red Hat states that it carried out the evaluations with the anticipation that similarly configured industry-standard servers from other vendors would yield broadly similar results.

Red Hat states that its hyperconverged platform was originally conceived of as a solution chiefly for remote office/branch office deployments, which is a common target for hyperconverged systems owing to their appliance-like nature and simplified management. But since its launch last year, the firm says it has seen demands from customers to support more mission-critical workloads and make it more of a mainstream data center platform.

This has led to version 1.5 of the platform featuring support for more enterprise-oriented capabilities such as deduplication and compression to reduce redundancy at the storage layer. This is actually delivered through a feature added in RHEL earlier this year known as Virtual Data Optimizer, a kernel module which adds data reduction capabilities to the Linux block storage stack to transparently shrink data as it is being written to storage. (Red Hat gained this when it acquired Permabit Technology Corporation in 2017).

According to Red Hat, Virtual Data Optimizer uses three techniques to reduce storage space; zero-block elimination, whereby blocks that consist entirely of zeros are identified and recorded only in metadata; deduplication is performed in a similar way, with redundant blocks of data being replaced by a metadata pointer to the original copy of the block; finally, LZ4 compression is applied to the individual data blocks.

Also added in this version of RHHI-V is support for GPU virtualization, which enables GPU acceleration to be used in virtual machines for a number of workloads, from handling 3D graphics to number crunching support in demanding applications such as computational science, in oil and gas exploration, and the increasingly important AI and machine learning tasks.

The move to become more enterprise focused is also helped by Ansible Automation, thanks to Red Hat’s acquisition of Ansible (the firm) several years back. Ansible provides configuration management, application deployment and task automation capabilities, and can also be used for orchestration where tasks must be performed in the correct sequence in order to deliver the desired end state. Red Hat says that it provides Ansible playbooks to support capabilities such as remote replication and recovery of RHHI-V environments, for example.

If Red Hat is aiming to compete in a level playing field with the mainstream HCI vendors, as it appears to be claiming, then the new features detailed for RHHI-V version 1.5 can be viewed as an attempt to catch up with its rivals, some of which have been developing their platforms for many years. SimpliVity (now part of HPE), for example, has for some time boasted of a long list of capabilities, including data dedupe and compression, backup and recovery, WAN optimization and a measure of hybrid cloud support such as the ability to use AWS as a target for off-site backups.

This brings us to the cloud, of course, and many of Red Hat’s rivals such as Nutanix and VMware, have moved on from a focus on pure HCI to a hybrid cloud proposition that links their platform with resources running in one (or more) of the public cloud platforms. Nutanix has its Xi Cloud Services that include cloud-based disaster recovery, multi-cloud governance and monitoring tools, while VMware has a slew of cross-cloud tools, including its VMware Cloud on AWS and vRealize management suite. Red Hat’s equivalent management offering here is CloudForms, which can manage virtualized infrastructure (based on Red Hat, VMware or Microsoft platforms), public cloud platforms such as AWS, Azure and Google Cloud, and container-based environments such as its OpenShift platform.

But Red Hat still seems to regard RHHI-V as a straight on-premise virtual infrastructure play, and rather confusingly markets a separate HCI platform for cloud deployment, called Red Hat Hyperconverged Infrastructure for Cloud. This combines its OpenStack distribution with Red Hat Ceph Storage, both running on the same server nodes.

As is the norm for hyperconverged systems, RHHI-V requires a deployment to comprise of a cluster of at least three nodes, in order to provide the required redundancy for the software-defined storage layer. In fact, last year’s release specified that a cluster must consist of exactly three, six, or nine nodes, but in version 1.5, the maximum cluster size has now been increased to 12 nodes, as can be seen from the table above. This is dwarfed by VMware’s platform, which now supports up to 64 nodes per cluster.

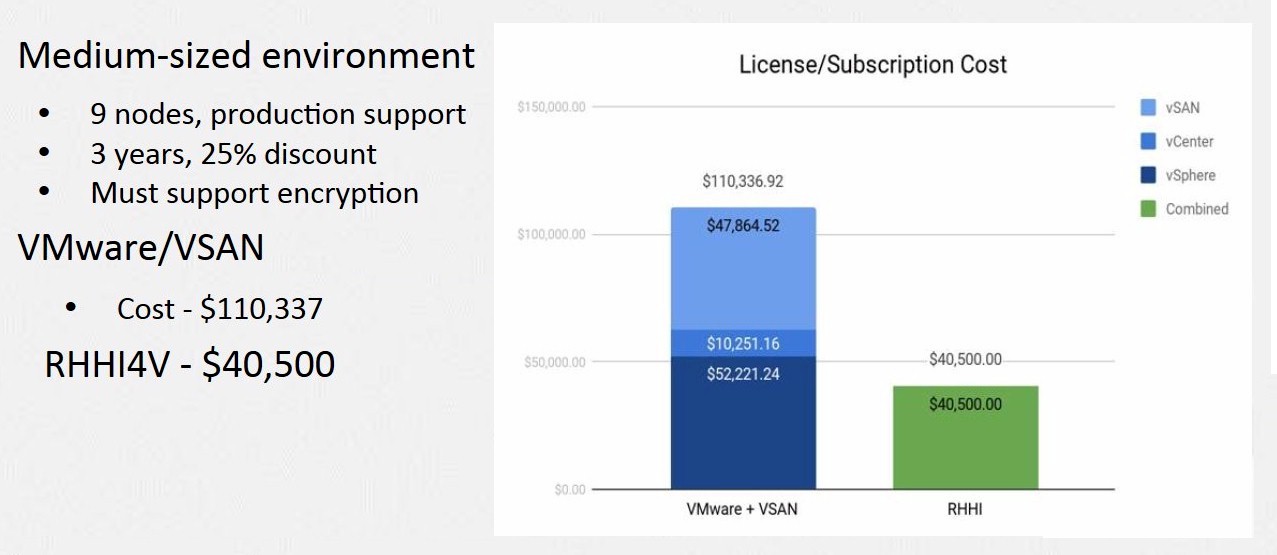

On the subject of VMware, Red Hat is now promoting RHHI-V as the open alternative to proprietary HCI stacks such as VMware’s, claiming cost savings and a greater freedom to innovate as part of its pitch to potential customers. On the cost side, Red Hat recently showed figures comparing the cost of a nine-node deployment over three years when licensing VMware’s platform against a RHHI-V subscription on the same hardware, and claims that choosing VMware would cost a shade over $110,00 against a price of just over $40,00 for its own stack – see the chart below. These figures both exclude the cost of the hardware.

But a straight comparison on cost in this way is somewhat academic, unless a potential buyer has somehow not ventured much into virtualization, or is considering all available options for some new-build infrastructure. The chances are pretty low that any IT shop that has already invested heavily into standardising on something such as VMware’s platform for their infrastructure services is not going to suddenly switch to Red Hat without a very compelling reason, and even the savings claimed by Red Hat may not tip the scales when the cost and complexity of any migration process were to be factored in. In light of this, it seems probable that despite Red Hat’s quest to convince organisations to migrate their virtual infrastructure to Red Hat, the updated RHHI-V is likely to appeal mostly to those that are already Red Hat customers and looking for a simpler way to deploy and manage virtual infrastructure.

Be the first to comment