Having multiple related but distinct product lines turns a company into an economic engine that runs a lot smoother than an it would run with a single cylinder.

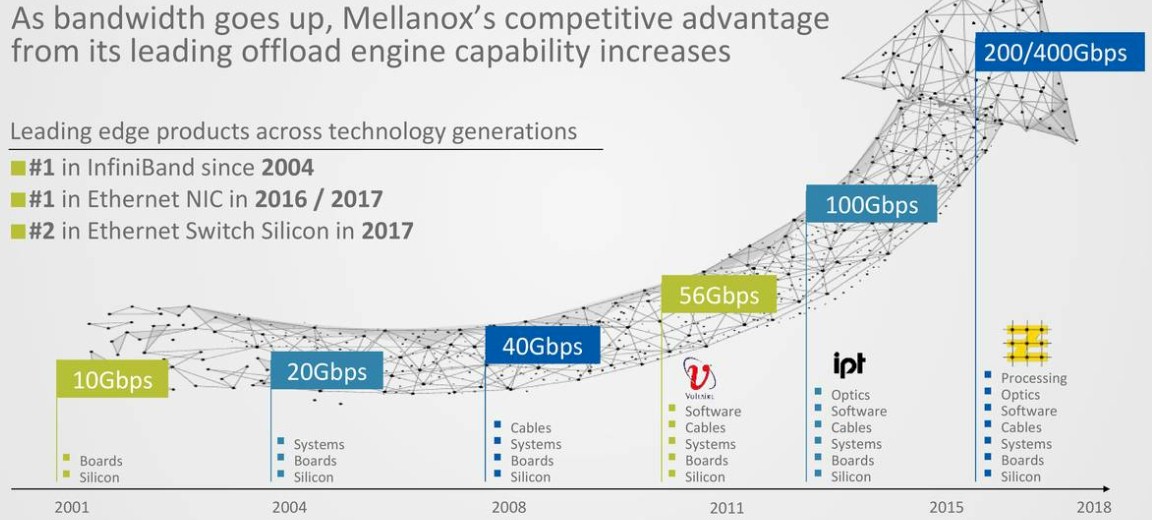

Over the past two decades, Mellanox has evolved from a supplier of InfiniBand switch and adapter ASICs into a company that doesn’t just sell high performance InfiniBand products, but also gear and software that adheres to the Ethernet standard that is far more ubiquitous in the datacenters of the world. Not only does Mellanox make the chips behind these products, but it also sells raw boards to those who want to create their own as well as finished switches and adapters that can just plug into existing networks and systems for those who don’t want or need to make modifications to them (like hyperscalers and cloud builders often do). And on top of that, Mellanox has moved into optical transceivers and cables and processors aimed at accelerating storage and network functions, freeing up compute on the network to do its real job.

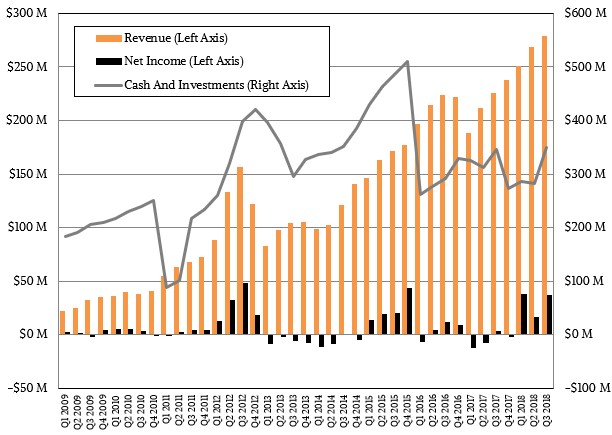

It is this growing mix of products as well as its ability to sell a complete network stack, from the server ports all the way to the spine and director switch, that is going to make Mellanox a $1 billion company this year. And that revenue stream and valuation is probably why Mellanox is rumored to have hired an investment banker and is reportedly exploring its options, which we presume means either making an acquisition or being acquired itself. Marvell Technology, the owner of the Cavium, which supplies ThunderX2 Arm server chips and XPliant programmable network Ethernet switches, had approached Mellanox last year to buy it, but Mellanox said is was not interested. Mellanox has a market capitalization of $4.6 billion as we go to press, so it would not come cheap we suspect.

In the Mellanox business, there is always a variant of InfiniBand that is waxing and another one that is waning, and right now Mellanox is making the transition from 100 Gb/sec EDR InfiniBand adapters and switches to 200 Gb/sec HDR InfiniBand products. The 200 Gb/sec adapters really require PCI-Express 4.0 x16 peripheral slots to handle their bandwidth, so it is not much of a surprise that HDR InfiniBand, sold under the Quantum brand name and revealed conceptually way back in November 2016 with initial products announced for preview a year later, have not taken off yet and EDR InfiniBand, with some help from slower 56 Gb/sec and 40 Gb/sec legacy InfiniBand speeds, is holding down the fort. Network upgrades tend to be timed with processor upgrades, and both AMD and Intel have their future respective “Rome” Epyc and “Cascade Lake” Xeon processors due sometime next year. IBM’s “Nimbus” Power9 chips already support PCI-Express 4.0 and are a little ahead of the market, which is evidenced by the fact that both the “Summit” Power9-Tesla GPU hybrid at Oak Ridge National Laboratory and its related “Sierra” sibling at Lawrence Livermore National Laboratory are using 100 Gb/sec EDR InfiniBand based on the Switch-IB 2 ASICs as opposed to the faster 200 Gb/sec HDR InfiniBand that is based on the Quantum ASICs.

We expect for Quantum InfiniBand to ramp along with the next server upgrade cycle, and the “Frontera” system at the Texas Advanced Computing Center coming in 2019 is an example, which is marrying servers based on Intel Cascade Lake Xeons to HDR InfiniBand from Mellanox.

In the meantime, the ramp of 25G Ethernet, including ConnectX adapters and related Spectrum switches and LinkX cables, is humming along nicely for Mellanox as hyperscalers and cloud builders are adopting offload models for software defined networking and storage and moving to 25 Gb/sec, 50 Gb/sec, and sometimes 100 Gb/sec ports on their servers to marry them to their 100 Gb/sec switches. Mellanox has sold over 2.1 million of its ConnectX Ethernet adapters in the first three quarters of 2108 thanks to the fact that 25G connectivity is moving from the hyperscalers and cloud builders and going mainstream. According to Creehan Research, Mellanox has a 69 percent share of Ethernet adapters running at 25 Gb/sec, 50 Gb/sec, or 100 Gb/sec, which is pretty good market share considering that more companies – Broadcom, Intel, Cavium, and Dell offer adapters during the 25G generation. This is a lot more competition than Mellanox had with 40 Gb/sec ConnectX adapters, when it was pretty much the only supplier other than Cisco Systems and Intel. (Some ConnectX cards support just Ethernet, and others, the VPI models, support both InfiniBand and Ethernet on the server side.)

In the third quarter, sales at Mellanox rose by 23.7 percent to $279.2 million, and net income grew by nearly a factor of 10X to $37.1 million.

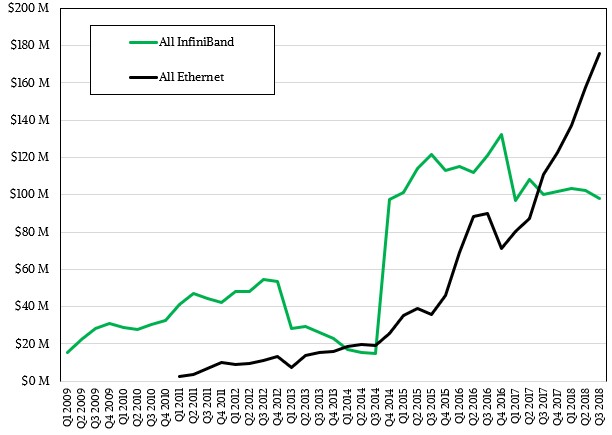

Sales of all Ethernet products comprised $175.5 million in revenues, up 58.5 percent, but InfiniBand sales fell by 2.3 percent to $97.9 million – again due predominantly to the HPC centers of the world who can wait until 2019 for new Intel or AMD processors doing so and timing themselves to the 200 Gb/sec InfiniBand ramping in volume. Those who can’t wait – and there are always some organizations that can’t wait for the next technology to get here – buy 100 Gb/sec InfiniBand and that’s still pretty awesome compared to many of the alternatives or what they have installed on their networks today. There are still plenty of 40 Gb/sec InfiniBand compute, storage, and database clusters out there in the world, and they are sorely in need of an upgrade.

In the quarter, Mellanox sold $47 million in raw chips (flat year-on-year), $131 million in finished boards (which includes the ConnectX adapters of course and which was up 57.1 percent), and $53.1 million in switches (down 6 percent). During the quarter only Dell represented more than 10 percent of Mellanox revenue, with $31 million in sales. Another OEM, probably Hewlett Packard Enterprise, came close to the 10 percent mark.

Those Ethernet ConnectX adapter sales are what is driving the Ethernet business to new highs at Mellanox these days, and as you can see in the chart above, excepting where there was a collapse in InfiniBand sales after Hewlett Packard Enterprise misjudged its own needs and overbought in 2013 and had to burn off its inventory, putting a damper on Mellanox for several quarters.

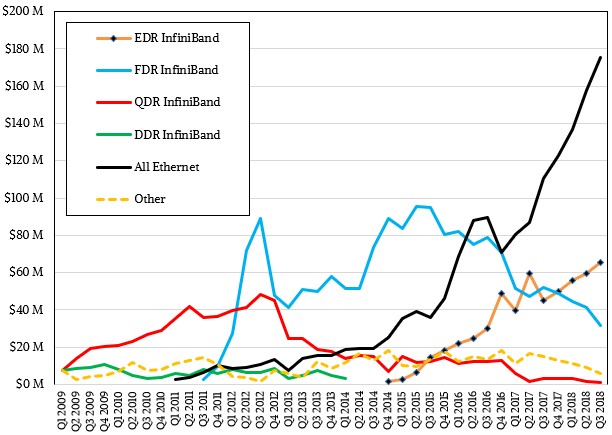

Drilling down into the split among the InfiniBand sales by generation used to be easier, but Mellanox did not provide the breakdown in its call with Wall Street analysts as it usually does. We have done our best to estimate what sales Mellanox had for various speeds of InfiniBand products. Clearly 40 Gb/sec QDR and 56 Gb/sec FDR InfiniBand are on the way out, although some OEM customers are still using these technologies as the backbones of their storage or database clusters and hence they persist.

As you might expect, EDR InfiniBand is following a pretty linear track up and to the right, with a few big deals pushing up at the end of 2016 and in the middle of 2017. (The latter bump is probably the Summit and Sierra machines.) For the reasons we have explained, the older InfiniBand versions have a very long tail of sales, and that is a feature, not a bug.

That said. Eyal Waldman, co-founder and chief executive officer at Mellanox, said on the call with Wall Street analysts that InfiniBand sales were lower than expected as some deals that were expected to close in the third quarter slipped into the fourth. “We believe that InfiniBand sales will grow in the low-single digits in 2018 as we close these deals in the fourth quarter,” Waldman explained. “During the third quarter, we were excited to ship the first 200 Gb/sec HDR InfiniBand solution to customers, driving the next generation of high performance computing, artificial intelligence, cloud, and storage, along with other important applications.”

Wall Street wants to know when the BlueField processors, launched back in 2016 and used in SmartNICs to give them extra computing oomph that is above and beyond what is built into the custom processor at the heart of the ConnectX adapters, are going to do in generating a new revenue stream for Mellanox. The combination of the BlueField processor and the ConnectX adapter is apparently particularly popular.

“We are seeing traction at tens of customers designing with BlueField, both as a storage controller and storage platform and as a SmartNIC for security for bare metal cloud infrastructure and so on,” said Waldman. “I can say that our kind of small revenue is growing quarter-over-quarter for our BlueField solutions out there. We expect to take market share away from other system-on-a-chips that are out there for multiple years, and one of the main reasons is that we have ConnectX-5 inside BlueField and that is becoming the most popular architecture for 25 Gb/sec and above. So if you’re using BlueField inside your system-on-a-chip, you have the same NIC as is the most popular for 25 Gb/sec and above, and that’s what you probably want to use so the software is the same, and the offload engines and the acceleration engines are same.”

We will be adding that line to our charts and start tracking it just as soon as Mellanox makes such data available.

Looking ahead, Mellanox expects to booked revenue of $280 million to $290 million in the fourth quarter, putting it well north of that long-sought $1 billion annual revenue figure. Looking out into 2019, the expectation is that the Ethernet NIC business will grow at 25 percent of more, and Ethernet switches will grow at around 70 percent, and Ethernet LinkX cables will grow at around 50 percent. InfiniBand is expected to grow in the low single digits (including both adapters and switches) and the BlueField processor business is expected to grow in the low-to-middle single digits.

Be the first to comment