The fleet of servers in the enterprise datacenters of the world – distinct from hyperscalers, cloud builders, and HPC centers – are getting a bit long in the tooth. A massive upgrade cycle is cresting in the enterprise, and AMD has been waiting for this moment, as the Epyc processors ramp, for five long years so it can capitalize on this opportunity and help companies get more virtual machines for the dollar and get its server business back on track after that long hiatus.

The timing is coincidental, maybe even lucky considering the issues that Intel is having with security relating to the Foreshadow speculative execution vulnerabilities and with its Xeon processor roadmap for the next two years. But that doesn’t make the opportunity any less real.

A few years back, the lifespan of a server in the field was, on average, around 3.7 years, but according to IDC, that number has now stretched out to nearly six years. Hyperscalers try to turn a third of their fleet every year in a continuous upgrade cycle, and HPC centers tend to get a new cluster every three or four years if they can find the budget for it. Companies always want to work machines until they turn to rust, but at some point, the advantages of a new architecture and the operational cost savings they can bring to bear far outweigh the disruption of having to swap out iron and the capital outlay to invest in the new machines. But for enterprise customers, who pay annual subscriptions or perpetual licenses plus annual maintenance for their software instead of hiring armies of software engineers, sometimes pay a much higher cost to keep old servers with lower core counts and memory footprints in the fleet because each machine has a license, not each core. And even if software pricing was based on cores, the operational costs on older gear per unit of performance is always higher than on newer gear.

Declaring War On Xeon, With Virtualization As An Ally

Unlike a lot of hyperscalers and HPC centers and like most public clouds, the enterprise has spent a decade mastering server virtualization hypervisors and packaging up applications into virtual machines to not only drive up utilization on their servers to save money, but also to create a more automated and considerably faster means of standing up systems and application software; processes that took months now take days or hours and sometimes minutes.

These enterprise datacenter servers, which do not include tower machines sold to small and medium businesses or large NUMA machines and proprietary systems, are overwhelmingly two-socket systems; they accounted for about 55 percent of the overall revenues in the server space last year, racking up $44 billion in revenues, and represented about 60 percent of shipments at some 6.5 million units, according to IDC, says AMD But there are five years of their predecessors still in the field, and those that are four, five, or six years old have to be replaced with good virtualization platforms that have lots of cores, lots of memory bandwidth and capacity, and lots of I/O bandwidth, too.

“We are declaring war on the competition when it comes to virtualized infrastructure,” Dan Bounds, senior director of enterprise products at AMD, tells The Next Platform. “If we look at where Epyc has been good to great, there are certain areas that have been apparent. Public cloud has been one, sort of blending into hyperscale and hosting. HPC has been another – they are more willing to get going faster. But now, we are getting to the fat of the bat that from Day One everybody said this is going to be a killer virtualization platform and we are now starting to get critical mass.”

The competitive landscape between Intel Xeons and AMD Epycs comes down to math, and customers starting to do the math and they are not going to necessarily wait until the “Rome” kickers to the current “Naples” Epyc chips come out next year. With the hyperscalers, cloud builders, and departmental HPC centers as well as academic university HPC centers blazing the trail with the Naples processors, enterprises will be more comfortable choosing the Epyc chips over Xeons – and that is with the Foreshadow vulnerabilities taken into account that affect the Xeons but not the Epycs. (More on that in a moment.)

The math starts with what we have known about Epyc for almost two years now: It has more cores in a socket, and for software with socket-based pricing, those extra cores on an Epyc translate directly into lower software costs for roughly equivalent performance. If you compare the typical Xeon middle-bin part to the top end Epyc, you are talking about roughly twice as many cores per socket, which means one of two things: Companies can deploy a dual socket machine with 64 cores instead of 24 core or 32 cores with a Xeon setup, or they can just deploy a single-socket Epyc and get roughly the same throughput as the popular SKUs in the Xeon SP line. Either way the savings are profound, and AMD does not put caps on the memory capacity as Intel does with its Xeon SP Silver and Gold parts; you have to pay the roughly $3,000 premium per socket to get the full-on 1.5 TB of memory per socket for a Platinum M series chip, and that ain’t cheap in a market where AMD is selling its top bin 32-core, 2.2 GHz Epyc 7601 for a list price of $4,200 a pop and the next step down is the Epyc 7551 with 32 cores running at 2 GHz and listing for $3,400. The Epyc 7551P, aimed at only single-socket machines, has an much-reduced price of $2,100 with the same 32 cores running at the same 2 GHz. (So two-socket NUMA capability is therefore worth $1,300 per processor, or about 38 percent of the cost of a pair of Epyc chips with 32 cores.)

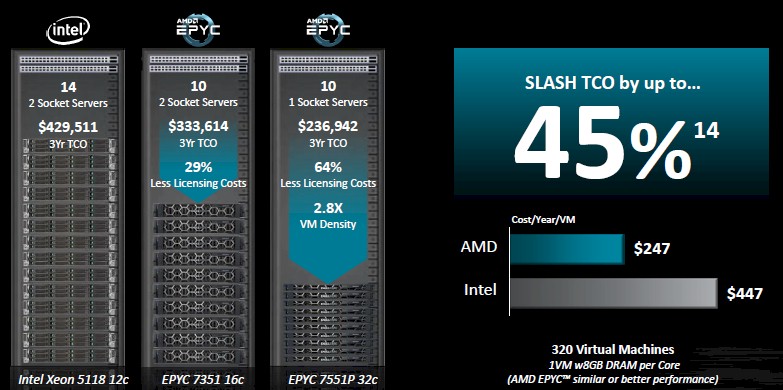

AMD can demonstrate that software licensing costs can be as much as 64 percent lower, and the VM density on the Epyc chips can be as high as 2.8X that of the prevailing middle-bin Xeon SP processors that are commonly deployed in enterprise datacenter; the overall total cost of ownership can be up to 45 percent lower.

“We have been putting tactical plans in place with our OEMs and ODMs to drive an absolute tidal wave of value for our customers,” Bounds says. “We are partnering closely with VMware, Microsoft, Red Hat, and others to make sure we are selling together and that is going to go a long way. And we are really unpacking our value proposition that in a one-socket scenario where we can dial up the core counts that are much, much higher than what is commonly used today and that really jacks the TCO. This is much, much lower than what customers can get in a two socket Xeon server today. We have great advantages, especially when it comes to hyperconverged infrastructure thanks to lower licensing costs. And more importantly, we really drive the density of VMs. Customers almost don’t know what to do with 2.8X greater VM density.”

Here is how the math works according to AMD. In the scenario below, the hypothetical customer wants 320 virtual machines, with one VM per core and 8 GB of memory per core:

The Intel-based machine is a Dell PowerEdge R740 with a pair of dead-center Skylake Xeon SP-5118 Gold processors, which have 12 cores running at 2.3 GHz, plus a 120 GB SATA flash drive for booting and six 480 GB SAS flash drives for capacity. The machines do not include operating systems, which companies usually bring to their own machines, but do include the ESXi hypervisor with the vSphere Enterprise Plus edition that activates the features of the hypervisors (like live migration of VMs) that they need. The total hardware costs come to $199,794, plus $16,004 for power and cooling for a year, a nominal fee for datacenter space, and another $42,880 for administration costs. Add it all up, and this Xeon setup with 14 servers costs $429,511 over three years.

Now, look at the two Epyc alternatives, one a two-socket server and another a single-socket box, both with similar throughput for virtualized workloads. In the two-socket AMD machine above, the machine in the cluster of boxes was a Dell PowerEdge R7425 using Epyc 7351 processors, which have 16 cores running at 2.4 GHz. The power and cooling is about half the cost, and administration costs were 20 percent lower because the number of servers, at ten, was fewer. Including the VMware stack, the total cost of ownership over three years for this cluster based on Epyc 7351 servers with two processors per box came to $333,614, which is 29 percent lower.

If customers really want to push it, they can adopt 32-core Epyc chips – in this comparison it is the Epyc 7551P which has cores running at 2 GHz. Each server has the same main memory (8 GB per core) and the same storage as the two-socket machine, which means that with the ten nodes you still get that 320 VMs. This particular machine is a Dell PowerEdge R6415. The three year total cost of ownership for this cluster of single-socket systems comes to $236,942, which is a 64 percent drop compared to the Xeon cluster, and the footprint is about a third the size of the Xeon cluster, too, thanks to the compute density in these Epyc processors.

It is hard to imagine why anyone would take the dual-socket setup over the single-socket setup given these considerations. (To be sure, some customers will want to load up 64 cores and 1 TB of memory per machine for very heavy virtualization workloads.) We realize, too, that AMD did not compare its top bin two-socket machine to the top bin or even penultimate bin Xeon SP processors.

“One of the criticisms we have had is that we focus too much on comparing to Intel’s top bin parts, which is not what most customers buy,” explains Bounds. “So we said fine. This value proposition gets even better as you get lower in the stack, and nowhere does it get better than when you are talking about cost per year per VM. Where do people actually buy? At the heart of the market. The Epyc machine on the right hand side blows away the Xeon machine that most people buy, which is on the left.”

These are the direct technical and economic benefits of the Epyc platform over the Xeon SP when it comes to enterprise datacenter virtualization, but without taking into account that customers now have to worry about Foreshadow exploits and to be guaranteed to have secure VMs on their systems, they have to turn off HyperThreading on their Xeon systems.

“How would you like it if someone snuck into your datacenter and stole 30 percent of the machines overnight?” Bounds asks rhetorically. “You would be pretty miffed and you would be calling the police. The reality is that this has already happened, but datacenters have not keyed into it yet. We think that the coming server upgrade wave will be very much driven by these vulnerabilities.”

We think that the lack of an exciting Xeon SP roadmap looking ahead is going to be a big factor, too. We all know that “Cascade Lake” is not going to have more cores or more memory controllers than the Skylake Xeon SPs, and we don’t expect much from its “Cooper Lake” follow on because these will still be etched in 14 nanometer processes with little room to cram more transistors on the die. There is every reason to believe that AMD will jack up the core counts with the future 7 nanometer “Rome” Epyc chips that are sampling now and will ship in 2019 – a year before Intel gets its 10 nanometer “Ice Lake” Xeon SPs out the door.

This virtualization war is going to get real, real fast.

I generally agree with this analysis and hold a lot of AMD stock but how does Docker fit into this? Containers vs VMs is one area I rarely see explored in these kinds of comparisons.

In my neck of the woods Docker in production is indeed a rare occasion to find in the wild, both in the C and the Engine room people have been trained for VMs the last 2 decades and contanerization in production is something only early adopters and visionaries ‘dare’ go into. The rest of ’em is IMHO still lost in idiotville.

Enterprise adoption therefore, besides the 2 groups I mentioned, are desperately in need to play catch up yet need a trigger event to do so. ( like virtualisation needed and got one in 2008 )

It is my firm belief that an imminent uh-oh Wile-e-coyote scaling moment ( eg. 7nm yield barriers ) should do the trick in a couple of years ( not months ) time.

Then all bets are, once again, off; if I would own Intel stock I would’ve shorted it last summer.

Aryan Blaauw says:

“Docker in production is indeed a rare occasion to find in the wild, both in the C and the Engine room people have been trained for VMs”

Q; Why is that? A rare occasion?

Q; What VM event in 2008?

Q; Who is in charge of the engine room?

Mike Bruzzone, Camp Marketing

NOTE CORRECTION; original comment post too late in the evening, data rows were not aligned with grade SKUs correctly. Everything lines up now. Sorry for any confusion shown by weekly grade SKUs weight is right on now. mb

By % open market channel inventory holding.

Xeon Sky Lake Scalable and Broadwell v4

Moving into Q4 sales I stated AMD open market processor and graphics unit channel inventories here;

https://www.seekingalpha.com/article/4207997-amd-now

So I will post Intel Skylake Scalable and Brroadwell v4 open market channel inventories as of this week here.

2124 = 0.0007681%

2124G = 0.0061445%

2126G = 0.0000000%

2134 = 0.0084487%

2136 = 0.0084487%

2144G = 0.0007681%

2146G = 0.0030722%

2174G = 0.0000000%

2176G = 0.0076806%

2186G = 0.0000000%

2195 = 0.0069125%

2175 = 0.0015361%

2155 = 0.0291863%

2150B = 0.0000000%

2145 = 0.0238099%

2140B = 0.1374829%

2135 = 0.1006160%

2133 = 0.2242738%

2125 = 0.0499240%

2123 = 0.0000000%

2104 = 0.6013917%

2012 = 0.0000000%

8180 = 0.4462434%

8168 = 0.4454754%

8158 = 0.4216655%

8156 = 0.4055362%

8180M = 0.3195134%

8176M = 0.3640609%

8173M = 0.0307224%

8170M = 0.0944715%

8160M = 0.1635970%

8176 = 0.3318023%

8170 = 0.5330343%

8164 = 0.3732776%

8160 = 0.5967834%

8153 = 0.4738936%

8124 = 0.0000000%

8176F = 0.0000000%

8160F = 0.0675894%

8160T = 0.0422434%

6154 = 0.4869506%

6150 = 0.5983195%

6148 = 0.7680610%

6146 = 0.4808062%

6144 = 0.4577643%

6142 = 0.5645248%

6136 = 0.6044640%

6134 = 0.7465552%

6132 = 0.7089203%

6128 = 0.6305780%

5122 = 0.7557720%

6142M = 0.1551483%

6140M = 0.1175133%

6134M = 0.4593005%

6152 = 0.6966313%

6140 = 0.7995515%

6138 = 0.6528518%

6130 = 0.9746693%

5120 = 0.8633005%

5118 = 0.9454830%

5117 = 0.0660532%

5115 = 0.8318100%

6148F = 0.0921673%

6138F = 0.0445475%

6130F = 0.0230418%

6126F = 0.0906312%

6138T = 0.0192015%

6130T = 0.0145932%

6126T = 0.0145932%

5120T = 0.0614449%

5119T = 0.0084487%

6138P = 0.0000000%

4116 = 0.8617644%

4114 = 0.9224412%

4112 = 0.7841902%

4110 = 1.3702207%

4108 = 0.6682130%

3106 = 0.9063119%

3104 = 0.9032397%

4116T = 0.0168973%

4114T = 0.0261141%

4109T = 0.1006160%

1603 v4 = 0.0076806%

1607 v4 = 0.0130570%

1620 v4 = 0.9808138%

1630 v4 = 1.2980230%

1650 v4 = 0.5506997%

1660 v4 = 0.5192092%

1680 v4 = 0.5146008%

2623 v4 = 4.4447687%

2637 v4 = 3.4370728%

2603 v4 = 3.1275442%

2643 v4 = 3.5261678%

2608L v4 = 0.0000000%

2609 v4 = 4.4647383%

2620 v4 = 5.4724343%

2667 v4 = 2.4324490%

2618L v4 = 0.0000000%

2630L v4 = 0.0376350%

2630 v4 = 4.5484570%

2640 v4 = 4.5000691%

2689 v4 = 0.0076806%

2628L v4 = 0.0030722%

2650 v4 = 3.9263276%

2687W v4 = 1.2120002%

2648L v4 = 0.0069125%

2650L v4 = 0.0353308%

2658 v4 = 0.0122890%

2660 v4 = 3.6682591%

2680 v4 = 3.6621146%

2690 v4 = 3.4585785%

2683 v4 = 2.5991183%

2697 v4 = 0.0207376%

2695 v4 = 3.4954454%

2697 v4 = 2.8802286%

2698 v4 = 3.3510499%

2696 v4 = 0.7526997%

2699 v4 = 2.8218559%

2699A v4 = 0.0007681%

2699R v4 = 0.0000000%

4655 v4 = 0.0483878%

4610 v4 = 0.0268821%

4620 v4 = 0.0115209%

4627 v4 = 0.0422434%

4640 v4 = 0.0122890%

4628L v4 = 0.0015361%

4650 v4 = 0.0414753%

4660 v4 = 0.2020000%

4667 v4 = 0.0314905%

4669 v4 = 0.0268821%

8894 v4 = 0.0015361%

8893 v4 = 0.0890951%

8891 v4 = 0.0199696%

8890 v4 = 0.0867909%

8880 v4 = 0.0145932%

8870 v4 = 0.0061445%

8867 v4 = 0.0844867%

8860 v4 = 0.0107529%

8855 v4 = 0.0030722%

4850 v4 = 0.0115209%

4830 v4 = 0.0138251%

4820 v4 = 0.1405552%

4809 v4 = 0.1182814%

100%