Cloud giants Amazon, Alibaba, Baidu, Facebook, Google, and Microsoft are now designing their own AI accelerator chips. Is this a fad or a short-term phase the cloud industry is going through? We believe that designing custom chips for specific tasks will become mainstream, in and out of the cloud. Few chip market segments will be immune. Processors, network switches, AI accelerators – all will be profoundly affected.

Chip design and manufacturing is being disrupted by a new set of technical and economic enablers. Cloud giants designing AI chips is just the tip of a mass-customization asteroid impacting the computer chip manufacturing supply chain. There isn’t a single cause for this impact, there are many factors colliding at the same time:

- Death of Moore’s Law leaves us with fast, large transistor count chips, even in mature previous-generation processes

- New architecture directions based on multi-chip modules (MCM) and system-in-package (SIP)

- Chip design tools maturing into complete development tool chains

- Licensable intellectual property (IP) blocks make it easy to assemble chips

- Multi-Project Wafers (MPW) democratize fab capacity for prototyping and limited production

- Customers writing in-house software frameworks

- Web giants create scale; emerging IoT giants aggregate into scale

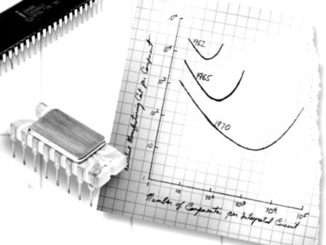

Death Of Moore’s Law

Moore’s law is effectively dead. Semiconductor fabrication companies (fabs) will say otherwise. However, we are at a point in the fab maturity cycle where shrinking our current transistor processes means that transistors become more unreliable and burn more power. As transistors shrink, designers must now use extra transistors to verify that a block of logic is producing correct results. And if designers pack too much logic too closely on a chip, both supplying power and dissipating the resulting heat become a challenge.

The net result is that while transistor counts are exploding at the leading edge of performance, that explosion is producing bigger, hotter chips, but the logic isn’t going to get much faster. At the same time, older fab processes, such as 28 nanometers, are still very useful for an increasing number of applications.

New Architectural Directions

If a chip designer decides not to push semiconductor technology in favor of pursuing architectural performance, then it can step back a silicon manufacturing generation or two, or simply aim for a not very aggressive chip design point in a current process. The result can be smaller, cooler, more affordable chips. Aiming for architectural advantage is the new silicon design “high ground” to get ahead of competition.

For example, Intel’s “Skylake” Xeon Scalable server processor uses about 690 mm2 of its 14 nm silicon area for high-end 28-core server processor designs. While Intel stopped quoting transistor counts, Nvida’s Volta generation of GPU chips has 21 billion transistors in Taiwan Semiconductor Manufacturing Corp’s 12 nanometer process for a reticle and yield busting 815 mm2 chip.

AMD took a different approach with its Epyc server product line. Epyc is based on AMD’s eight-core Zeppelin die. Each Epyc processor package contains four Zeppelin die connected by AMD’s proprietary Infinity Fabric on-chip interconnect, for a potential total of 32 cores using 19.2 billion transistors and 852 mm2 of silicon area. AMD’s innovative Epyc architecture is the result of different architecture and design trade-offs and uses a different mix of interconnect, logic and storage than other processor designs. The result is Epyc’s total transistor count and die area are in the same ballpark as the largest chips from Intel and Nvidia, but at much lower manufacturing cost structure. AMD hints that its architecture has the potential to scale within a single package to larger chips, larger chip counts or both.

Chip Design Tools Maturing

eSilicon, Cadence, Mentor, Synopsys and others offer cloud-hosted design platforms, virtual prototyping and verification services for chip designers with large or small budgets. While designing chips is not yet as accessible as designing web pages, the key to effectively leveraging billion-transistor design budgets is to license and/or reuse IP blocks – parallel use of repeatable structures is the key to success.

A repeatable structure can be a cache memory block, a processor core, a memory controller, etc. A repeatable structure is any function that you need more than one copy of to increase overall throughput. Repeatable structures are how both memory capacity and processor cores are scaled to increase capacity and performance.

Put another way, it is virtually impossible to afford enough human design talent to fill a billion transistors with unique, high-value logic. Repeatable structures and parallel architectures drove the market need to put billions of transistors on a chip.

Licensable And Open Source IP Blocks

There are many sources for general purpose and purpose-built repeatable structures, including the design tools companies, above, and:

- Arm is a credible source for datacenter-rated licensable IP. Arm has various interconnect designs, but for the high-end infrastructure market Arm licenses its proprietary CoreLink Cache Coherent Network (CCN) and CoreLink Cache Coherent Mesh (CCM) products. Arm’s high-end CoreLink designs are optimized for its larger Arm 64-bit Cortex processors.

- Wave Computing bought MIPS recently, which should bode well for both, as artificial intelligence (AI) is likely to be a highly requested IP block for at least the next decade or two.

- RISC-V aims to democratize compute-intensive repeatable structures with open source processor cores. Alibaba, Cadence, Google, GlobalFoundries, Huawei, IBM, Mellanox, Mentor, Qualcomm, and Samsung are listed among its members.

If your sovereign market is big enough, you may have more choices for ultramodern processor cores:

- AMD might license its Epyc server architecture.

- Arm and Qualcomm might license a server-quality Arm 64-bit core.

- IBM might license its Power9 server architecture.

And, while AMD is ahead of the market with its Epyc MCM, others are also investing in interconnect IP:

- Intel has been working on its proprietary Embedded Multi-die Interconnect Bridge (EMIB) point-to-point on-chip interconnect technology, and is licensing a subset of EMIB as the Advanced Interface Bus (AIB).

- Arm has various interconnect designs, but for the high-end infrastructure market Arm licenses its proprietary CoreLink Cache Coherent Network (CCN) products. Arm’s CCN designs are optimized for its larger Arm 64-bit Cortex processors.

- SiFive’s TileLink is an on-chip interconnect for RISC-V processor cores. TileLink appears to be more like AMD’s Infinity Fabric protocol than Intel’s EMIB/AIB point to point interconnects.

- The USR Alliance promotes and certifies its ultra-short reach (USR) system-on-chip (SoC) interconnect to its expanding membership.

Democratizing Fab Capacity

It used to be that a chip design and manufacturing were inseparable, one could not be done without intimate knowledge of the other. As the industry matured, some design could be done separately from the fab, but only with a huge transfer of specialized knowledge. Then, competitive processor companies needed to own their own fabs to push bleeding edge performance. And last year AMD proved that design and fab can be separated even for bleeding edge performance.

The only remaining challenge is lowering the price of manufacturing validated designs for small design companies. Multi-Project Wafers (MPW) manufacturing capacity is now available across the globe. MPW puts many different designs on a common wafer, so that prototypes and low-volume design runs do not have to incur the entire cost of a production wafer. MPW pricing is now available from the largest fabs (such as GlobalFoundries, Samsung and TSMC) and from smaller and boutique specialty fabs (such as KAST’s WaferCatalyst, IMEC / Fraunhofer, Leti / CMP, MOSIS, Muse Semiconductor.

MPW democratizes fab access for smaller design houses and for commercial and academic research and development projects. Larger design customers can then order normal insanely high-volume wafer runs from the usual fab sources.

Software Frameworks Beget Hardware Accelerators

Open source operating environments and applications code have enabled the web giants to co-design and optimize datacenter infrastructure. As chip design and manufacturing further commoditizes, these companies will find it increasingly easy to experiment with and deploy new processor instruction sets, including AI accelerators.

In fact, this is already well under way for AI. Most web giants have in-house deep learning model development environments that they’ve opened or made publicly accessible and they have AI chip designs underway:

- AWS has invested in Apache MXNet and Amazon-developed AI chips for consumer devices

- Baidu created PaddlePaddle and Kunlun chip

- Google created TensorFlow and several generations of TPU chips

- Microsoft created Cognitive Toolkit and its FPGA-powered Brainwave add-in cards

- Tencent created DI-X platform (with proprietary models and algorithms) and ncnn (mobile-oriented), plus a partnership with MediaTek

- Alibaba has not weighed in on software frameworks, yet, but has published many original research papers on deep learning architectures and algorithms and is developing a Neural Processing Unit (NPU)

In the next tier of cloud vendors, IBM has Cognitive Computing and Watson services and partnered with many AI accelerator companies under the OpenPower initiative. And, in the social media world, Facebook has driven development of Caffe and Caffe2.

And then there are several dozen startups creating AI accelerator chips. Wave Computing is at the leading edge with its purchase of MIPS, and we’ll leave the rest as an exercise to the reader, as listing more is a long bit of writing.

It is also not a coincidence that most of the web giants are also investing in quantum computing as a potential wild-card accelerator for neural networks.

Massive Scale

Once a web giant decides to deploy a custom chip at scale, throughout its worldwide datacenter infrastructure, that means it will buy on the scale of hundreds of thousands to millions of chips. If each chip contributes a few watts of improved efficiencies, overall efficiency improvement can easily total tens of megawatts, while at the same time solving profitable new problems faster and with greater accuracy.

Also, web giants often have fab relationships to build custom chips for consumer devices, as well (such as Google Home and Amazon Dot). There are more economies of scale in chip manufacturing when a datacenter AI accelerator chip is only one of several chips running at high volume at a fab.

Impact

We haven’t really seen the real impact of these converging trends yet. As interesting as these current experiments in AI acceleration are, they are only the beginning.

A web giant with control of its own software operating environments and deep learning modeling language will also have access to chip design talent and tools, the best of open source and licensable IP blocks, and can build prototype chips and then run high volume production silicon anywhere on the planet. The web giant can customize its integer and floating-point processor cores. It can build SoC designs with custom processor cores, custom AI accelerators, custom I/O and memory controllers, etc. And it can optimize its in-house software performance on its in-house chips in ways that general purpose software development tools can’t approach on mass-market chips.

The following year, the web giant might design completely different chips. Viruses designed for standardized operating environments and standard instruction sets won’t execute on these chips – black hats will need better social engineering to gain access to the web giant’s systems, but those systems might change on a regular basis.

This is a fundamentally different datacenter than the one we currently live in.

Today a security attacker can examine standard IP blocks for weaknesses over several years. Imagine pairing best practice open source security techniques with unique and undocumented hardware features that change over time.

Perhaps we’re seeing the birth of Cyberpunk’s corporate intrusion countermeasures electronics (ICE)? I smell burning chrome…

Paul Teich is an incorrigible technologist and Principal Analyst at DoubleHorn, covering the emergence of cloud native technologies, products, services, and business models. He is also a contributor to Forbes/Tech. Teich was previously a Principal Analyst at TIRIAS Research and Senior Analyst for Moor Insights & Strategy.

Need to update your sources as Facebook has moved to PyTorch and Caffe2 is getting fused with PyTorch

Thanks for reminding me, lots going on. Facebook’s continuing involvement with PyTorch continues to make my point that the web giants are not letting others control their deep learning destinies!

https://caffe2.ai/blog/2018/05/02/Caffe2_PyTorch_1_0.html

https://developers.facebook.com/blog/post/2018/05/02/announcing-pytorch-1.0-for-research-production/

Follow up article adressing New Tesla AI/Self Driving chip? What is it FPGA/ASIC/Tensor/GPU/Neural Processor?

Will be curious if Google creates a new CPU to go with Fuchsia. Did not make sense when using Linux as assume the plan had been to move to Zircon for awhile.

But once on Zircon it makes sense.

Excellent work. Thanks.

I can’t wait to see what other companies come up to answer what intel and AMD are doing. As of now only intel and AMD have the bulk of the market when it comes to home computing, and so it would be nice to have new competition from other companies such as Amazon.

There is not problem with having own Accelerators or products else OEM products will be stalled in warehouse. What is better is to come to a table of understanding 🙂