It has been a year since Intel launched its “Skylake” Xeon SP processors, and even though the big cloud builders and hyperscalers had even earlier access to these chips, they are still only just now rolling out new hardware systems and related virtual machine instance types based on the Skylakes.

Amazon Web Services, for instance, has debuted three new instance types that are based on custom Skylake processors from Intel, and these were basically the highlight of the AWS Summit in New York this week. The new Z1d, R5, and R5d were crafted for hosting various kinds of high performance computing – not just traditional simulation and modeling, but workloads that can make use of lots of clocks, memory, and non-volatile storage.

The Z1d instances are based on a custom Xeon SP that has up to 24 cores and up to 48 virtual CPUs thanks to HyperThreading. While we are wondering if this machine was based on a single socket server, we don’t think so even though the vCPUs top out at 48 for the Z1d instance type. The fastest standard Skylake Xeon that Intel offers is a 24 core SP-8168 Platinum, which has a 205 watt thermal envelope and spins at 2.7 GHz under normal circumstances. We don’t think Intel can crank the clock speeds up to 4 GHz on this chip without throwing off a lot of heat, and despite the need to deliver a single socket server that can compete with AMD’s Epyc 7000s, we think Intel just jacked up and balanced out the clocks on a 12 core SP-6146 Gold chip, which spins at 3.2 GHz normally and which fits in a 165 watt thermal envelope.

Whatever the underlying hardware looks like, AWS isn’t saying. What the world’s largest public cloud does say, however, is that the custom Xeon SP in the box runs at 4 GHz with all cores running in Turbo Boost mode. Under normal circumstances, the Turbo Boost speed on a Xeon chip is dependent on the number of cores that are actually supporting work, with the higher clock speeds on a running core being available on chips that have the highest number of other cores turned off.

The Z1d instances come with 8 GB of memory per vCPU, which works out to 384 GB per machine, and the server gas a pair of 900 GB flash drives that link to the compute complex using NVM-Express protocols, which reduces latency on the local storage. The Z1d instances have a chunk of their network bandwidth allocated for dedicated links to the Elastic Block Storage (EBS) service, with up to 14 Gb/sec of transfer capability, and the machines also have Amazon’s homegrown Elastic Network Adapter (ENA) that allows for the network bandwidth to scale dynamically with the compute up to a peak of 25 Gb/sec. The machines link to each other and to the outside world using the Ethernet protocol, of course, and AWS allows for machines being used for clustered applications to be placed in close proximity – what it calls a Cluster Placement Group – to reduce latencies as work and data flits around the cluster. Any Amazon image that runs on a C5 or M5 instance can be deployed unchanged on the Z1d instance. AWS says that these instances are particularly well suited for relational databases that have per core software pricing (since these cores do a little more work than standard parts, and therefore require fewer cores for a given amount of work) as well as electronic design automation (EDA) and any other compute-bound simulation or modeling workload from scientific or financial HPC.

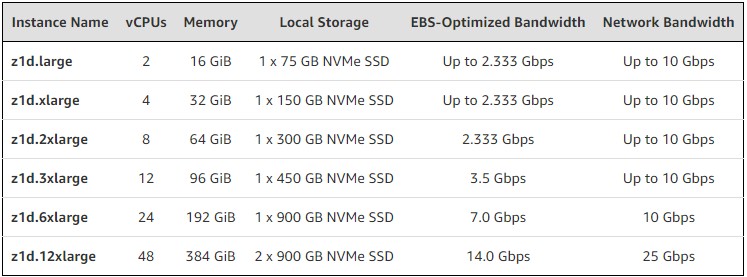

There are six Z1d instance types:

There are two things that are unknown about the Z1d instances: when they are available, and what they will cost.

The other two types of forthcoming instances are called the R5 and R5d instances, and these are aimed at memory intensive workloads such as high speed databases, Memcached and other kinds of caches, in-memory databases, and other sorts of analytics. The R5 instances sport a custom SP-8000 Platinum series chip running at up to 3.1 GHz, also using always-on Turbo Boost across all cores.

We think it is highly likely that the underlying server in the R5 instance is a two-socket box based on a goosed SP-8168, which normally has 24 cores and 48 threads running at a baseline of 2.7 GHz in a 205 watt thermal envelope. Two of these in a system would give the requisite 96 threads to support the 96 vCPUs in the largest configuration of the R5 instance. The R5 instances have the same 8 GB per vCPU, for up to 768 GB, and offer the same EBS and networking options as well as the Cluster Placement Group feature.

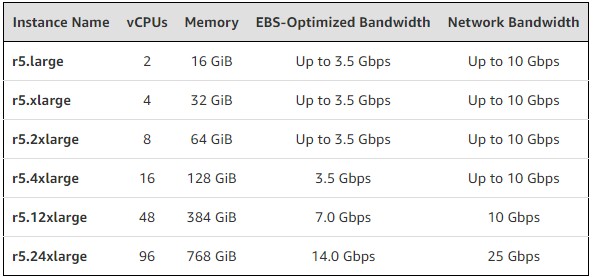

Here is what the six R5 instances look like:

The timing and pricing of the R5 instances were also not divulged, but AWS said that it would eventually offer NVM-Express flash storage on these instances, with up to 3.6 TB of local flash for the biggest R5 instance with 96 vCPUs. These flash enhanced R5 instances will be called the R5d, and they will carry their own pricing.

“We don’t think Intel can crank the clock speeds up to 4 GHz on this chip without throwing off a lot of heat, and despite the need to deliver a single socket server that can compete with AMD’s Epyc 7000s, we think Intel just jacked up and balanced out the clocks on a 12 core SP-6146 Gold chip, which spins at 3.2 GHz normally and which fits in a 165 watt thermal envelope.”

As a newby, it doesnt sound impressive vs amd offerings, and a glaring indication of the problems they face with ring bus and doing battle in multicore.