It has been difficult for the supercomputing community to watch the tools they have honed over many years get snatched up by a more commercially-oriented community like AI and popularized.

Without tireless work at supercomputing sites around the world much of what AI hinges upon simply wouldn’t work. For instance, those GPUs that are all the rage for training deep learning models? Those would certainly not be ready for AI primetime, to say nothing of the many programmatic approaches that allow for massive scalability of complex problems and countless other techniques for extracting performance across different model types.

But oddly enough, the birthplace of some of the core tech the AI community rests upon can look a little outdated in comparison–at least from a generalist’s point of view. And herein lies the tension that bubbled forth at many a session this week at the International Supercomputing Conference (ISC) and others like it as far back as 2016.

This summer the conversation in HPC is about how AI fits into the future of supercomputing systems has shifted from one of skepticism about whether there would be any impact from AI at all to one of acknowledgement—albeit begrudgingly at times as revealed during an AI in HPC panel at the International Supercomputing Conference this morning.

The HPC vendor ecosystem, which has been very quick to tout AI prowess, has not had much to say about where HPC fits in AI (and vice versa) but a first step in that direction came from the one company whose story is strongest in terms of standard system share but shaky in terms of future strategy for both AI with Nervana chips still a ways off and the Knights family of HPC processors no longer on the chessboard.

Instead of spending time wondering at what the future holds for HPC, Raj Hazra, Intel’s VP and General Manager for Enterprise and Government (and a long-time speaker at HPC events on behalf of the chip giant) cut to the chase and made the argument that supercomputing in the post-exascale world of 2025 would look quite different from a systems and workflow standpoint—and those differences highlight just how much AI has innovated on HPC’s back in recent years.

In his many years on the HPC speaking circuit given his former title as general manager of that business unit at Intel, Hazra has given a similar talk before—one about the need to evolve HPC systems into a larger platform. The last time was when big data was all the rage and companies were looking for new ways to market the same systems and software to meet a perceived fresh need.

This time things are different. AI might be overhyped generally, but technologically speaking it is not a flash in the pan or media cycle spike–at least not as far as HPC is concerned. It is also not a natural extension of some much more glacial IT phenomenon like the rise of big data (cheap deep storage and new data creation capabilities). It is a truly unique technical inflection point—and instead of HPC trying to learn to capitalize on the movement, it is HPC that has remained central to it on both hardware and software fronts, which makes this unchartered territory in some ways for the supercomputing set to grapple with.

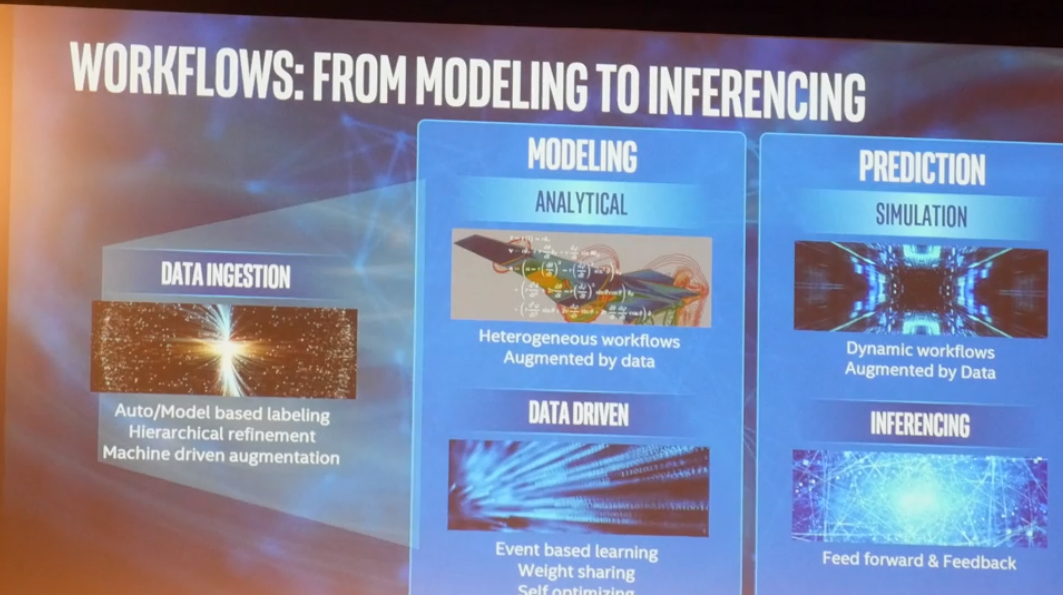

So instead of talking about iterating on old systems to meet a new demand, Hazra focused on the redefining of supercomputing as a whole. Turns out it looks a lot less like the modeling and simulation-bound present with its floating-point valued systems and way more like machine learning. The shift also portends little concern about peak performance beyond what it means for the self-teaching analysis. And so, a reimagining, indeed.

Supercomputing in the future looks like the current reality of systems handling AI workloads. Not necessarily in terms of individual hardware or software elements but in terms of problem solving approaches on current hardware. Hazra talked about supercomputing in terms of “new techniques and tools in this golden age of data” instead of new processors or co-processors and as for modeling and simulation, the real limitation for future HPC is not hardware but the analytical models that constrain target problem domains.

To reach efficiency and productivity by 2025, supercomputing needs to move from analytical models and simulations augmented with data that predict an outcome to a new world that is not constrained by a physical equation or set of behaviors or a distribution function. HPC must move toward using causality, context, and knowledge about the tasks to create a model; not using just data but its own execution to find the answers, he argued.

We have discussed how deep learning might displace some traditional modeling and simulation areas, especially those largely dominated by image data as in astronomy, weather, and seismic analysis, and this is key to the shift in HPC Hazra sees coming. This requires a rethink of HPC workflows starting with data ingest and following through the to model, which he says will infer rather than predict.

It is more complex than this. There are plenty of scientific problems with physics that cannot be simulated by neural networks. But we can see where Intel is going with this.

The end goal of future supercomputing is to enable intelligent applications, Hazra argues. To get to this point, HPC needs to integrate three properties common in more mainstream current AI shops. Anomaly detection is one key; being able to move beyond mere points on a graph and finding what is different and being able to apply that same functionality across a number of other applications and situations. The eventual goal of these intelligent applications is autonomous learning or putting all of the above into practice so it can go on its unsupervised way and deliver results effortlessly.

One will notice that for an Intel presentation, this was light on hardware and heavy on lofty concept. While the conceptual story Hazra tells about a future of HPC where simulations matter far less than self-teaching algorithms that execute with raw data as but one guidepost has merit, what is quite clear is that it is hard to tell how existing HPC hardware systems will fit into this paradigm.

One thing that is certainly true is that if AI offers an opportunity for fast time to reliable scientific results in an energy efficient manner that doesn’t require billion-dollar investments in machines that are pushing general purpose CPUs and accelerators to their limits this concept will move toward reality.

But for now, most HPC problems are rooted in numerical simulation because that is what is stable, time-tested, and practical. Getting that to change will be an uphill battle for Intel and others—and maybe not a wise war to wage for any chipmaker.

As complex dynamic systems comprising a minimum three (3) interactive material/physical (“real”) elements in spatio-temporal context, macro-scale phenomena are causative, discrete, amenable to objective-rational (“quantifiable”) analysis. On molecular scales, however, per Edward Lorentz’s notorious “butterfly effect” (1960), all-determining chaotic-fractal, random-recursive processes are necessarily unpredictable both in detail and outcome.

Though indeterminate in practice, micro-scale cause-and-effect circumstances remain subject to if-then syllogistic reasoning. Not so quantum-theoretical phenomena: Governed by whilom “chance-and-necessity”, abstract potentiam mysteriously translates to discernible –that is, measurable– outcomes only as empirical observations resolve statistical conundrums.

What this means is that each-and-every material/physical “fact” is fundamentally anomalous, a complex/virtual (“imaginary”) artifact whose stable, real-world existence masks an underlying qualitative dynamic immune to any extrapolation whatsoever.

We find “strict indeterminacy” liberating… but in hyperlinked nigh-immortal, sentient AI contexts it may well be that incomprehensible “Cloud Minds” will perceive qualitative “higher realities” in potentiam and create their own milieus accordingly. What that entails, who knows… but odds are that by c. AD 2100 we’ll find out.

Are you SURE you are not A Man From Mars?