The relationship between the HPC and AI communities is not unlike sibling rivalry; both come from the same stock computationally speaking but are fundamentally different individuals. They pull from similar elements but to entirely separate ends and are not always sure which one is most prized–mostly because that changes depending on the task being judged.

This concept was center stage at a panel at the International Supercomputing Conference today in Germany (ISC18), “Will HPC Transform AI or Will AI Transform HPC?” where the central question was “does the HPC community benefit from joining a larger AI marketplace, or will it be smothered by the embrace of a market that is much larger and more powerful than itself.” If this isn’t classic sibling rivalry, then it’s pretty hard to tell what wouldn’t be.

These questions are complex but there are various other technical issues at play as well which were addressed. For instance, should the HPC community have to work with half-precision arithmetic in order to gain the benefits of leading-edge accelerators in the future? These are other issues require HPC to rethink how it operates whereas AI, springing from different roots, can take a native approach, free from legacy.

The HPC community is essentially now falling into two specific camps. “The HPC purists”, and the “The AI agnostics”. There is a third, a slightly smaller but growing camp, “The HPC and AI evangelists”. We want to explore the configuration of this third camp in a little more detail.

The Next Platform have been discussing the convergence and importance of AI for a long time, specifically around the significance of accelerators and their importance to HPC. We have explained in detail the subtleties of being able to even separate HPC and AI. It’s complicated. Many in the field are wrestling with the concept, even experts have a tough time trying to explain the nuances.

So, Will HPC Transform AI or Will AI Transform HPC?

At ISC, a panel of experts moderated by John Shalf of Lawrence Berkeley National Laboratory that included Peter Messmer of NVIDIA, Alessandro Curioni of IBM and Satoshi Matsuoka of RIKEN Tokyo Institute of Technology targeted the challenges of HPC and AI specifically around the comment “what are the applications, and what will science look like 20 years from now if a merger of AI and HPC is successful?” The experts discussed a whole multitude of ways that AI and “traditional” HPC are not only merging but do in fact have a healthy symbiotic relationship with each other.

There are two main threads at play here, one is around the integration of AI into the scientific process, as a simple example, say removing simulation steps by using machine learning techniques ahead of time to distil or reduce data prior to having to then compute the big lift simulation with our traditional models. The second is about how the physical act of performing an AI training routine impacts the potential selection of the hardware itself. Often these two things collide, and have tinge of “cart before the horse”, where the community think that the machines are being built only to run all AI, all the time at the expense of more important simulations. This isn’t the case, one hand scratches the other.

Messmer of NVIDIA obviously has some significant skin in the AI/HPC game. In explaining how deep learning comes to HPC, Messmer supplied specific use cases such as a 5,000 times speed up for LIGO signal processing to 50% better accuracy for ITER and clean energy to running 300,000 molecular energetics predictions to a 33% improved accuracy for neutrino detection, it was clear that the numbers alone are just as impressive as the areas of science that AI is being recruited into.

There are basically two major forcing functions that AI and HPC are perfectly best suited to work through. The more data the better the predictions become. This forces both storage and the network to have to perform at ever more advanced levels. There has always been a motivation for systems architects to build faster machines, but now the also need to ingest large data sets and computationally chew on them.

Curioni from IBM discussed how AI is changing traditional research and development efforts to be based on a “Cognitive Discovery” method, essentially inverting the triangle of discovery to apply comprehension by cognitive methods first to reduce the number of brute force experiments that need to be carried out. Curioni explained that data is the forcing function for machine learning, and plotted out where each of the disciplines sit on an X/Y graph of both data and compute.

Matsuoka’s approach was obviously much more systems based. Rather than explain high level AI/HPC interactions, he discussed the practical nuts and bolts of what you need to be able to build world class competitive computer systems. Turns out that these systems also just happen to be phenomenal devices to run demanding AI workloads. It is by design. Matsuoka clearly has the classic engineering rigour behind him that supports each of his technology decisions. It isn’t an AI first approach, but more of one where AI workloads are changing how physical networks, interconnects and storage are best aligned for performance for both simulation and data ingest.

Matsuoka explained the challenges of the existing AI benchmarks and how few ways there are currently to characterize the specific performance knobs and levers you need to push and pull to achieve good results. As Post-K is being developed, Matsuoka said that, “Post-K could become the world’s biggest and fastest platform for DNN training.”

This is interesting in two ways. First yes, Post-K is very much a super computer. But secondly, because it is also constructed with devices that can manage arbitrary precision and has an extremely fast and low latency network, with gobs of bandwidth to advanced storage systems, it can also do this whole AI thing really rather well. It is a chocolate and peanut butter solution, the two things go hand in hand with each other and the result is better science.

AI is making interventions not only in algorithmic approach, but also in how we design and build modern computer systems. The interaction of humans with high level language interfaces that abstract the mathematical complexity of the matrix operations to speed up computation can’t be underestimated.

The Hardware

Any conversation about modern AI isn’t complete without talking about the physical tin and silicon, it is a critical component of how we design computing systems. In walking the show floor of ISC it is clear that major industry is not only riding the AI wave, but are also a critically active forcing function. It isn’t all GPU, all the time either. However, this also isn’t the space where a single box and a second hand graphics card, a copy of tensorflow with a dodgy python script is going to cut it. This is about multi million dollar investments in a future where AI isn’t just a buzzword, but being fully baked into the scientific and research process.

For example the NEC SX-Aurora TSUBASA vector engines we have covered in the past are now on show with 8 cards on a motherboard. This A300-8 tugs at the top end of 2.8KW of power it needs to run but also provides 19 teraflops peak, but more interesting 9.6TB/s of memory bandwidth. In a single box. That’s a significant amount of compute, but then again they are up against the NVIDIA HGX-2 platform which as Supermicro promised recently we found on show floor showing off a 10U server with 16 volta delivering 80,000 cuda cores.

For example the NEC SX-Aurora TSUBASA vector engines we have covered in the past are now on show with 8 cards on a motherboard. This A300-8 tugs at the top end of 2.8KW of power it needs to run but also provides 19 teraflops peak, but more interesting 9.6TB/s of memory bandwidth. In a single box. That’s a significant amount of compute, but then again they are up against the NVIDIA HGX-2 platform which as Supermicro promised recently we found on show floor showing off a 10U server with 16 volta delivering 80,000 cuda cores.

This is significant in a few ways, but importantly, the complex compute systems that were originally only available as bespoke hardware are now being delivered by slightly more white box vendors, this will really help to bring further economies of scale.

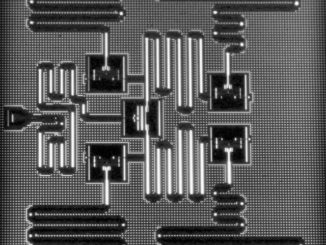

There is as always, a clue in the name “HGX-2 platform”, it is exactly that, a platform upon which others may now build against. As you can see from the picture, this is certainly a more bare bones kind of affair than the shiny integrated DGX-2 systems you can purchase from NVIDIA, but this is actually no bad thing.

What it means is that you will now see a whole lot more AI capable systems at ever more balanced price points in the future. We say this because that is exactly how the Ge-Force business took off, the technology was licensed to a large number of OEMs who were then able to turn around and bring massive cost savings to the hungry gaming industry. Now the hunger is for advanced hardware to further accelerate science, and if that can be delivered in a cost effective manner, then everyone wins.

What it means is that you will now see a whole lot more AI capable systems at ever more balanced price points in the future. We say this because that is exactly how the Ge-Force business took off, the technology was licensed to a large number of OEMs who were then able to turn around and bring massive cost savings to the hungry gaming industry. Now the hunger is for advanced hardware to further accelerate science, and if that can be delivered in a cost effective manner, then everyone wins.

The HGX-2 Platform

It’s not just AI and HPC that have sibling rivalries. The words Cloud and HPC in the same sentence also cause the same levels of indigestion within the community. Dan Reed, provost at Utah and expert in distributed computing spoke at seperate ISC panel that turns out was actually very similar to the AI and HPC panel. Albeit this time, it was about the emotive subject of HPC and clouds and trying to unravel the complexity and interaction of the two to try to understand the opportunities. Reed’s statements, “Has the revolution started without us again?” and that “The big questions don’t change, but the approaches and answers do” both clearly rang true, he could also have been talking about AI and HPC. Reed is also correct, the more things change, the more they remain the same.

If cloud and HPC is a challenge, imagine the interface of cloud, HPC and AI. It is a literal trifecta of confusion and chaos. It need not be. The wealth of experts who came together to the ISC meeting have in the past have been focused on accelerating complex simulation. Originally massive data ingests were not the main driver, but more one of rather staggering computational complexity of n-body problems with billions of elements was really driving innovation of both the computational horsepower needed. This also drove the innovation of advanced low-latency networking to be able to communicate between devices. However, all that work over decades is now starting to pay off, it was important to understand the interaction of complex systems.

So rather than thinking of AI and HPC as two bickering siblings, think more of them as a beautiful moment on a family holiday each sitting roasting marshmallows by the campfire and singing songs together.

Modern high performance computing is AI, and AI is modern high performance computing. It is a good thing.

Be the first to comment