Three months ago, The Next Platform promised a three part conversation about practical computational balance with the final part focusing on software. We have already discussed unplanned data and how to strike computational balance and in this meer three month window, we have also covered over twenty other topics ranging from astrophysics, artificial intelligence, GPU profiling, quantum computing, healthcare, advanced genomics and workflow automation, to containing the complexity of the long tail of science.

The underlying thread of each of these stories has really been a focus on a single theme, that of phenomenal and yet ever more complex software, mostly driven by an increased global focus on artificial intelligence. However, in this exact same three month window, we have also provided insightful and detailed teardowns with in depth reviews of brand new, modern hardware systems such as HGX-2, DGX-2, hybrid X86-FPGA, AMD Epyc silicon and novel Arm server processors. It is fair to say that the world of advanced computing is undergoing somewhat of a hockey stick growth that we have not seen in a few decades, especially when it comes to new silicon and ever more tightly integrated networking.

Each of these hardware announcements and software innovations have each taken place inside the last three months, server and component revenues are also up across the board, it is a boom town, a proverbial gold rush of novel hardware and systems. Albeit in theory we have access to a seemingly never ending stream of more highly performant and exotic hardware, many of these devices have actually yet to fall into the hands of the masses who will need to understand how to best accurately code against them. How will we be able to most efficiently bring actual real world exascale grade science and research into reality with these rapidly evolving boxes?

Conversations about high performance computing without software have always resulted in a subtle and uncontrolled disconnect. Imagine, if you will, the lone researcher, alone in an R&D laboratory working to solve complex questions with computing. Few lone wolf researchers are now building their own systems, they are either renting them from public clouds or being provided with service from a centralized HPC team, be it either local or from a national scale facility. Importantly, the software they need is also often inordinately fragile. It can break and produce unintended output in strange, unintended and mysterious ways. Package management to the rescue, right? Just do apt-get install, yum install, emerge, and all their friends should be all that you would ever need. Alas, if only it was that easy…

Of Modules And Software

Pioneering HPC environments understood the difficulty of software layering, and one of the canonical systems to first get a handle on this was Lmod, which managed the user environment, effectively setting up all the LD_LIBRARY_PATH and environment and modules to essentially trick the operating system to load very specific versions of HDF5, LAPACK, BOOST et. al. The Lmod software originated from The Texas Advanced Computing Center, which understood very early on that managing software and reducing the backpressure on the humans to allow them to support more science was going to be key. So much so that the development of Lmod was bolted onto a $56 million petascale system award from the National Science Foundation. The NSF wanted large centers to not only buy a supercomputer, but also provide the software and skilled humans to be able to run it.

A huge amount of the success of centralized academic supers is in the software they make available to their community. Building software is incredibly time consuming and fraught with potential disaster. As the software continues to become more complex we have spoken at length about technologies to contain it, but what we are going to focus on here, is what actually goes inside the container. A vessel without water is still an empty bucket, you can’t put out fires with empty buckets, and you can’t drink from empty vessels.

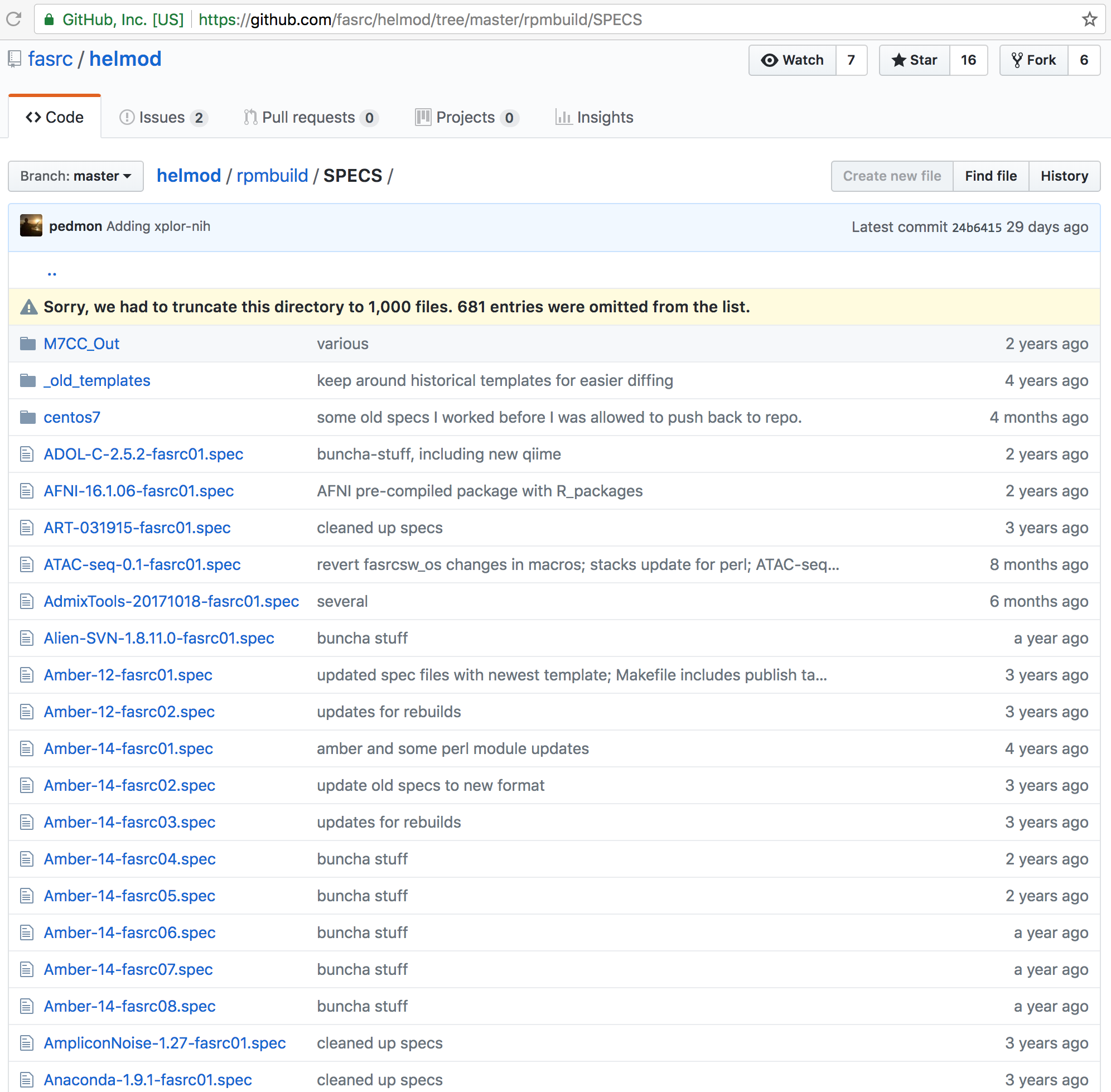

Over time, additions to the TACC Lmod have been made to improve provenance of software from groups such as Harvard University Research Computing, with their cutely named HeLmod, or the Harvard Extensions to Lmod, they use RPM spec files as a basis for their reproducible scientific software stack. The name was obviously a subtle hat tip to the “hell” of circular and complex software dependencies. The GitHub for HeLmod for example currently truncates the list of SPEC files because there are literally too many to show in the web-based user interface.

Even this project does not capture all possible versions and types of software that are available, the Harvard team needed to add a further set of documentation for users to install their own software. A brief glance at the instructions albeit clear, shows it to be not exactly what one would call “user friendly”.

So we clearly have a problem, we know this because there are now a plethora of distinct projects to make building and maintaining scientific software “easy”. One in particular even has the word EASY right in the name. Easybuild has software configurations available for over 1,400 scientific packages using over 30 different compiler toolkits. 30 toolkits. That’s a lot of complexity, every group in the package game are trying as hard as they can to make it as easy as possible for end users. Spack from Lawrence Livermore National Laboratory operate under a similar theory of trying to simplify the problem of installing packages in HPC. We have spoken about singularity hub before that can also manage scientific software as a set of controlled container registries. However, one of the bigger challenge is in the interpreted language space, Each of R, node, go, ruby, python, etc. each also have their own integrated and bespoke embedded sub package management systems. Some like Go are starting to realize they also have a problem with versioning, stating “We need to add package versioning to Go. More precisely, we need to add the concept of package versions.”

Add up all of these individual pieces, and there have to be literally billions of potential combinations of software, compilers and versions. It is totally bewildering and exhausting even for an expert in the field.

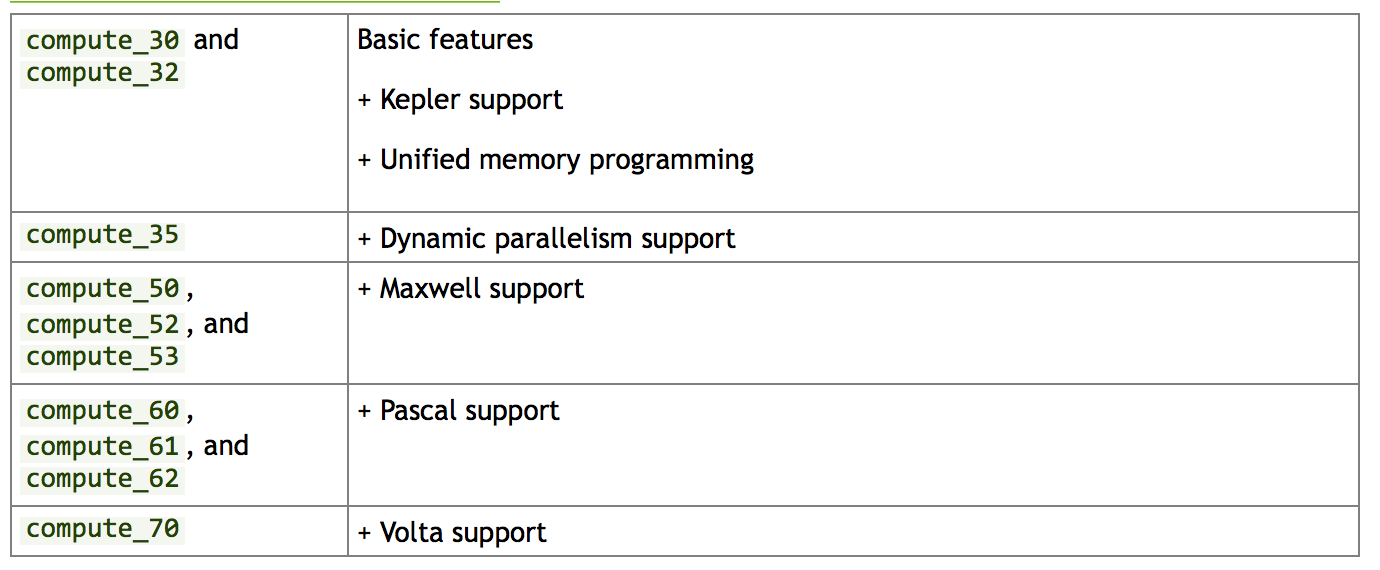

Here’s the thing, remember we mentioned earlier that new and exotic chunks of hardware are now being released at such a rapid rate of knots? Well each one of these new and shiny systems also has an architectural dependency buried right inside their build environments. Not all Arm processors are the same, and not all GPU, FPGA, and X86 systems will provide optimal levels of performance for computing. The CUDA toolkit alone provides multiple optimizations based on assorted and evolving GPU chipsets which can affect software performance significantly and while being forwards compatible, they are just like everything else, not backwards compatible. Built in obsolescence has been a hallmark of computing, everything moves forward, and the software is the child that is unfortunately left behind.

So new hardware, new danger. None of this is exactly new, either. In the 1990s, back when SGI Irix, DEC VMS, Hewlett Packard HP-UX, Sun Microsystems Solaris, and IBM AIX ruled the earth, we managed this whole what binary to run challenge with the ever growing list of hieroglyphics placed inside of ~/.cshrc and ~/.login files. These shell dependencies and a series of if, then, else statements helped us to try to navigate through what was a horrendously complex set of /usr/local/dec,hp,irix,x86, etc. file paths containing both highly duplicated and staggering architectural complexities. It was a time where there was “one of everything.” Calm did appear on the landscape when Linux started to be adopted and stabilized with Red Hat Enterprise Linux and other more serious software distributions in the 2000s. The Intel/AMD 64 bit extensions also started to provide good levels of binary compatibility. The old dinosaurs died out and for a while, the X86 mammals thrived, we only needed to run a few sets of binaries. However, inside of this calm period, software complexity also exploded mostly because a whole bunch of software mostly “just worked” and the environment was a whole lot more rich and inviting, especially without all those dinosaurs wandering around the place.

So new hardware, new danger. None of this is exactly new, either. In the 1990s, back when SGI Irix, DEC VMS, Hewlett Packard HP-UX, Sun Microsystems Solaris, and IBM AIX ruled the earth, we managed this whole what binary to run challenge with the ever growing list of hieroglyphics placed inside of ~/.cshrc and ~/.login files. These shell dependencies and a series of if, then, else statements helped us to try to navigate through what was a horrendously complex set of /usr/local/dec,hp,irix,x86, etc. file paths containing both highly duplicated and staggering architectural complexities. It was a time where there was “one of everything.” Calm did appear on the landscape when Linux started to be adopted and stabilized with Red Hat Enterprise Linux and other more serious software distributions in the 2000s. The Intel/AMD 64 bit extensions also started to provide good levels of binary compatibility. The old dinosaurs died out and for a while, the X86 mammals thrived, we only needed to run a few sets of binaries. However, inside of this calm period, software complexity also exploded mostly because a whole bunch of software mostly “just worked” and the environment was a whole lot more rich and inviting, especially without all those dinosaurs wandering around the place.

This was also a time where computational interconnects were evolving – 1 Gb/sec Ethernet was ratified, InfiniBand was slowly soaking up the majority of the large low latency interconnect budgets in the big shops, with a handful of MPI verbs and protocols, and MVAPICH mostly ruling the roost. It’s a long way from those days now, with completing Omni-Path, InfinbiBand, Ethernet, and now NVSwitch, each providing high speed and low latent interconnects, but also with totally different software stacks. We have also covered the “battle of the InfiniBands” inside this exact same three month window, these network components also need new software, and the old software needs to be relinked and recompiled against new verbs and libraries in order to function correctly.

Left Out In The Cold

Did we mention OpenACC versus OpenMP? Turns out that we have spoken at length about this, even going as far to say that OpenACC was the best thing to happen to OpenMP. Leaving out aspects of any potential computational religion, each version of software and each chunk of shiny new hardware has the serious potential for the users of a new system with an intent to bend the curve of science, being left out in the cold looking in through the window as the cool kids get to play with the latest and greatest of this season’s toys.

As ex-HPC center managers, we are excited about any new technology announcement, but there is a serious problem resulting in major hangovers after a night of over indulgence in new technology. It always leaves us all with serious software headaches, we never seem to learn from our mistakes. These hangovers are also directly reflected in the cost and complexity of external ISV codes. Many advanced mathematics packages are now falling outside the financial reach of independent researchers, further adding to the complexity of “free” open source software such as R. We have discussed the challenges of documentation of software even going as far as stating that the actual documentation is driving the algorithm. However, the real cost of open source software is never exposed, so folks think that it is free. However, when the commercial vendors do have to expose their costs and folks see the bill, and see it is rising, it causes immediate friction. Their costs are rising because the ISVs also need to support this exact same ever more complex and diverse set of computing platforms, they also have software hangovers, and they also need to provide a clean and simple user experience with electronic notebooks and integrated programing environments. However, no one seems to want to pay for it, though.

The Notebook

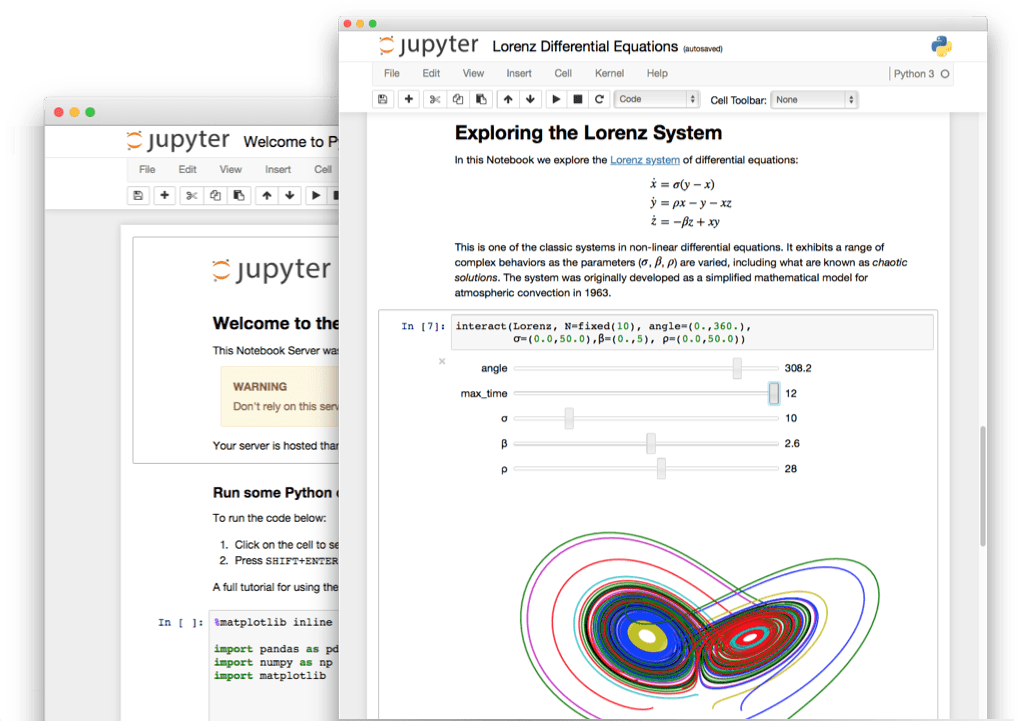

Undeterred as always, members of the academic community reacted to the rising costs of software toolkits by building their own notebooks. This is a kind of app, not a physical machine you put on your lap. Notebooks are clever software where provenance was built right in, providing not only beautiful integrated windows into complex language kernels, but also the ability to store workspaces and replay the results. None have been more popular than Jupyter. Its founders state that the project exists “to develop open source software, open standards, and services for interactive computing across dozens of programming languages.” Dozens of languages, interactive computing. These notebooks are now so ubiquitous across campuses both in academia and industry, and a lot of work is now being carried out inside notebooks as the development communities are further and further away from the actual hardware.

These are bright and interesting days for how humans interact with complex software. Do not misunderstand us here, it is a good thing. The scientific gateways project has been supplying similar ways to reduce the cost of entry for humans and how they can best interact with supercomputers for a long time now. So much so, an actual institute was formed. However, at some point be it a gateway or a notebook, all those identical interdependencies we discussed earlier that used to be managed with Lmod et. al. still need to be installed on an actual computer system, be it sitting inside a contained or not. Especially when the notebooks are then coupled with “big data integration” engines such as Apache Spark and others, all those plethora of versions of the Jupyter and kernel code all still have to line up and everything has to match.

At some point, someone needs to be the system manager and go and physically install it. The documentation for Jupyter states simply python3 -m pip install jupyter, and you are off to the races. That command will work for all but the very most simple of use cases, unless for example you happen to need access to TensorFlow or well basically anything else that touches a modern system it is never going to be a one click install. Re-enter dependency hell. More scientists, spending more time installing more software, enjoying it less and not being able to develop new methods. Still, we must not give up hope, these really are truly amazing times from a hardware perspective, hockey-stick growth in new systems, more sustainable and open software systems will continue to make the landscape a fascinating and interesting place.

However, we absolutely must continue to not lose sight of our software complexity. New hardware systems albeit shiny and interesting, with AI driving all kinds of innovation and associated data flowing into and out of custom systems at an ever higher. But the underlying software will have to be built, linked and integrated so we can have real world functioning end-to-end systems that must deliver actual results that are not only informative and easy to use but also accurate and reliable. The true art of achieving computational balance requires that software (especially scientific software) be integrated directly into the systems. Unfortunately to achieve this balance, there will always be a cost. This real cost will show in either full time employee and salary budget to develop, build, and manage it, or by paying significant money directly to ISVs to build the appropriate stack of software for you so that works directly with the hardware you are purchasing. Software has always had a cost, the new growing complexity of our physical systems and software now has real, and escalating costs that are way beyond the list price of that shiny new “integrated” system you spot and immediately want to adopt and take home from the show floor at the next technology conference.

Software is not just for Christmas. Software is for life.

Be the first to comment