Intel’s many-core “Knights Landing” Xeon Phi processor is just a glimpse of what can be expected of supercomputers in the not-so-distant future of high performance computing.

As the industry continues its march to exascale computing, systems will become more complex, and evolution that will include processors that not only sport a rapidly increasing number of cores but also a broad array of on-chip resources ranging from memory to I/O. Workloads ranging from simulation and modeling applications to data analytics and deep learning algorithms are all expected to benefit from what these new systems will offer in terms of processing capabilities.

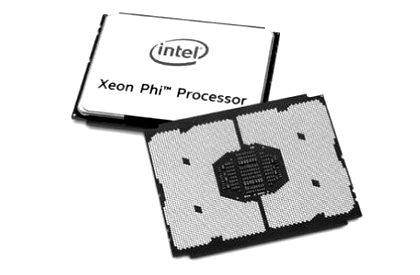

Intel officials unveiled the company’s Knights Landing chips in 2015 and made them generally available last year. Now known as the Xeon Phi 7200 family of processors, Knights Landing is the latest generation in the company’s many-core initiative that is designed to help Intel address the next generation of supercomputers in the HPC space. Xeon Phi grew out of Intel’s “Larrabee” project to develop the chip maker’s first GPU that could compete with the GPU accelerators that were being offered by rivals Nvidia and AMD. After the project was scrapped in 2009, Intel engineers turned their attention to building a many-core coprocessor chip using its X86-based Intel architecture that could fill the same role of working alongside main processors to drive system performance while maintaining energy efficiency.

Knights Landing, with up to 72 cores based on a havily modified “Silvermont” Atom microarchitecture that has been targeted at HPC, is different from its predecessor. It can be used as the primary processors as well as a coprocessor and includes an integrated fabric. In addition, along with two memory controllers for DDR4 memory, Knights Landing also comes with eight memory controllers for MCDRAM (Multi-Channel DRAM), a 3D stacked on-package memory, with both memory systems totally separate.

The Xeon Phi 7200 chips – with a growing number of cores and multiple memory systems – are representative of what’s to come as supercomputers become even more complex on their way to exascale computing. Applications will be built to take advantage of the many cores and other capabilities and new resources within the chips. What will be a challenge for software developers is figuring out how to best run legacy applications that haven’t been tuned for the upcoming capabilities but are still crucial to their operations.

One idea is co-scheduling such workloads to run on the same chip. The goal is to choose workloads that leverage different parts of the chip to allow them to run at the same time on a set of cluster nodes while not competing for the same resources, rather than the traditional method of allocating nodes to a particular application. A group of researchers from Germany recently tested the idea using Knight Landing chips, expecting that by running the applications at the same time on a shared set of cluster nodes, any slowdown in the individual tasks could be offset by a reduced overall runtime. Co-scheduling already is being used on supercomputers with multi-core processors to leverage underutilized nodes. The researchers – Simon Pickartz and Stefan Lankes of the Institute for Automation of Complex Power Systems at Aachen University and Jens Breitbart of Bosch Chassis Systems Control – wanted to see the impact on co-scheduling scenarios by running two applications at the same time that leverage different memory hierarchies, such as those provided in Knights Landing chips.

The details of the study, titled Co-scheduling on Upcoming Many-Core Architectures, can be found here. One possible cluster mode for the tests was the quadrant mode, where the chip is divided into four virtual quadrants. However, the researchers chose to run the cluster in the Sub-NUMA Clustering (SNC) mode, which enables the software to see the quadrants as individual NUMA domains and allows NUMA-aware applications to take advantage of lower latencies by reducing memory access to remote quadrants. Memory was configured in flat mode, where “the memory extends the physical address space of the system and is explicitly exposed as NUMA domain to the software. Thus, in the quadrant cluster mode one additional NUMA domain shows up while there are four in the SNC mode,” they wrote.

The researchers used two kernels – the EP and CG – of the NAS Parallel Benchmarks (NPBs) suite to test both performance and power efficiency. The CG kernel figures out the approximation to the smallest eigenvalue of a large sparse matrix while EP computes stats from Gaussian pseudo random numbers. Both have distinct resource demands, which fits with a co-scheduling scenario: CG tends toward irregular memory accesses and communication, with performance limited by available memory bandwidth, while EP is not dependent on available main memory bandwidth. Both were tested for scalability and power consumption using DDR4 and MCDRAM respectively, and then when co-scheduled in disparate configurations.

What they found was that when running individually, the CG kernel at eight threads runs better using MCDRAM than DDR4, with performance bumps of 10 percent to 20 percent for thread counts of 40 or more. Power consumption increases as the thread count rises, but while performance is best at 60 threads, optimal power efficiency is hit at 36 threads (for DDR4 memory) and 40 threads (with MCDRAM). Energy efficiency is improved by up to 20 percent with MCDRAM. For the EP kernel, there is almost constant increases in power consumption as the thread count rises. In the co-scheduling scenarios, the kernels were tested with both allocating memory from MCDRAM and then from DDR4, and then tested accessing them separately.

What the researchers found that co-scheduling on many-core architectures can be useful, with an increase in overall efficiency by up to 38 percent. However, they were surprised to find that they couldn’t further improve the performance when having the CG kernel exclusively access MCDRAM while dedicating DDR4 memory for the EP kernel. They suggested the problem could be conflicts within the on-die mesh that was caused by EP kernel threads.

“Although this scenario realizes an exclusive assignment of the available resources; that is, the two different memory types, the interconnect is still a shared resource and becomes the bottleneck,” they wrote, adding that more study needs to be done to determine for sure whether that is the case, including whether “a more sophisticated pinning of the threads could reduce the average path length to the memory controllers.”

Be the first to comment