The thing we hear time and time again from the hyperscalers is that technology is a differentiator, but supply chain can make or break them. Designing servers, storage, switching, and datacenters is fun, but if all of the pieces can’t be brought together at volume, and at a price that is the best in the industry, then their operations can’t scale.

It is with this in mind that we ponder Microsoft’s new “Project Olympus” hyperscale servers, which it debuted today at the Zettastructure conference in London. Or, to be more precise, the hyperscale server designs that it has created but that still need some work, which the company is soliciting from the Open Compute Project Community in the hopes of getting more users adopting the machines and therefore creating a broader and deeper user base and that in turn cultivates a richer and more diverse supply chain. (Breathe in now.)

Not that the Open Cloud Server designs that Microsoft donated to the Open Compute Project in January 2014 have done badly. As we outlined in a history of those machines and how Microsoft is building out its vast fleet of servers (which we hear is well north of 1.2 million machines and growing very, very fast), Microsoft was doing semi-custom servers, tweaking here and there, for years before it finally decided to build a single, modular server that would meet the diverse needs of its workloads and allow it to get better economics of scale from manufacturing. The Open Cloud Server design, including various server and storage sleds, was completed in 2011 and in 2012 the supply chain, with Quanta Computer as the main manufacturer, was lined up. The first Open Cloud Servers rolled into Azure datacenters in early 2013, almost four years ago, and Microsoft added WiWynn as a second supplier. Since that time, Hewlett Packard Enterprise and Dell have also started selling variant of the Open Cloud Server (presumably to Microsoft but also to others who want to emulate Microsoft), which means companies that like the Microsoft design can, if their volumes are high enough, find multiple sources for the gear.

This is the real struggle that all of hyperscalers, cloud builders, telcos, and large enterprises wrestle with. And, to be honest, of you tried to place an order for 10,000 machines through the regular channels of HPE, Dell, Lenovo, Inspur, or Sugon, you would have trouble, too.

The Microsoft Open Cloud Server machines may have expanded the supply chain and the market for Open Compute iron, but this would have happened if Microsoft had just adopted Facebook’s own Open Compute system and storage designs. But Microsoft’s engineers have their own ideas on how to build a system that supports its very diverse workloads (which are arguably much more compute intensive and varied than what Facebook needs, as you can see from the social network’s system designs), and rather than switch to Facebook’s variant of Open Compute gear five years ago and when it had a second chance last year when it started work on Project Olympus machines, Microsoft did its own iron.

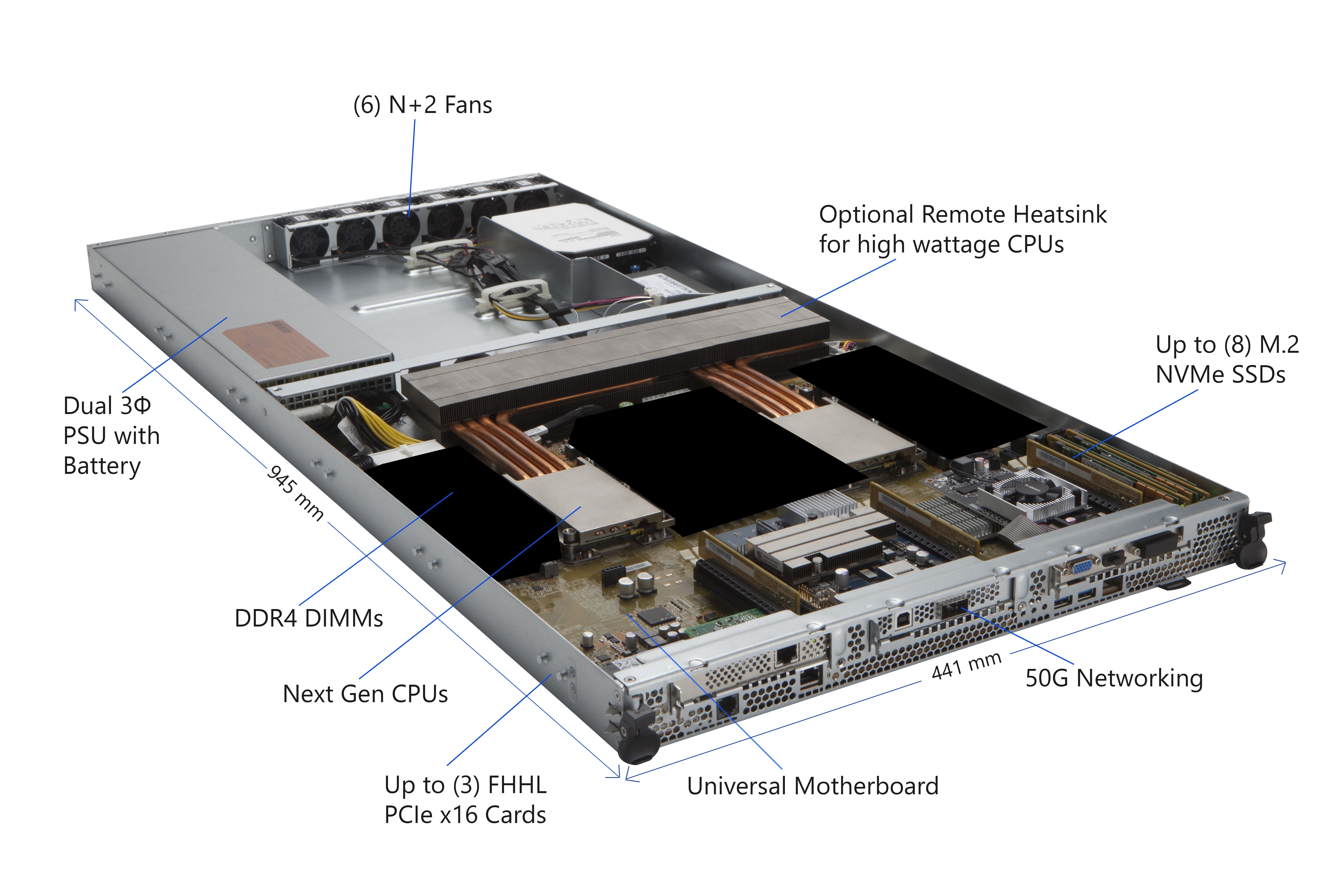

The interesting bit is that Microsoft is going retro and is creating a streamlined, free-standing, rack-based server node that can have storage extension shelves added to it for a much more intense storage-to-compute ratio where necessary. The Olympus server is designed to have CPU compute (presumably starting with Xeon E5 processors from Intel) as its centerpiece, but with a new “universal motherboard” design it looks like it can accommodate other kinds of processors. And, importantly, because the server node is not clogged up with disk drives or flash SSDs, it has sufficient room to add in various kinds of accelerators that come in standard PCI-Express form factors. This is in stark contrast to the Open Cloud Servers, which had room for a mezzanine card in each two-socket sled that could be used for a pretty modestly powered GPU or FPGA accelerator or maybe a SmartNIC with FPGA acceleration for networking functions.

It is interesting to us that Google has always used rack-style machines and never indulged in building blade or modular servers, despite some of the obvious benefits. In a way, a Google datacenter is a blade server, and each compute element in each rack is a blade that hooks into a ginormous Clos network built from homemade networking gear. (It depends on how you want to look at it.) What seems obvious to use is that the 1U and 2U server form factors are the most common ones in the datacenters of the world, and finding manufacturers who can build such machines is a lot easier than requiring custom enclosures and server sleds.

What seems clear now in hindsight is that some of the shiny new racks of servers and JBOD disk enclosures that we spied on a recent tour of Microsoft’s datacenters in Quincy, Washington, that are going into its brand new hyperscale facility there are probably not Olympus machines. Microsoft has not confirmed what the machines in the right-hand racks in the photo below are:

The machines on the left sure look like the 12U Open Cloud Server enclosures, with three bays of machines per rack on the bottom and some switching on top plus extra room for expansion. The machines on the right could be a mix of protoype Olympus machines and storage bays – it is hard to be sure from the photo, and intentionally so. After a second hard look, these machines might be a switch row. But they are probably not a modular or blade server design as we initially expected, and given that the Project Olympus specs are not done, they probably are not Olympus machines, either. What is clear that Microsoft will be phasing out Open Cloud Server iron and moving to Olympus gear, and that over time that new Gen 5 datacenter in Quincy will be loaded with Olympus iron. (For all we know, Microsoft has created the Olympus chassis to do serving, storage, and networking workloads, with the machines functioning as modular switches as well as storage. That would be truly interesting.)

In any event, Kushagra Vaid, general manager of server engineering for Microsoft’s cloud infrastructure, has previewed the Project Olympus machines and they are retro while at the same time being modern. Take a look:

Vaid explained in a blog post announcing the Project Olympus effort that the Olympus machines would come in 1U and 2U rack form factors and would have what he described as a universal motherboard and a new rack design that had a power distribution system that would be compatible with various power intakes that are available around the globe.

Vaid showed off the 1U server design in the exploded view above, and as you can see, it has two processors in the dead center of the board, wrapped by DDR4 memory sticks that have their own dedicated heat sinks to help pull hot air off the memory. Our guess in looking at this is that each socket can support eight memory slots, and with 64 GB sticks, that means a two-socket Olympus machine using Intel Xeon E5 processors will be able to hold 1 TB of main memory. (Swap out the processor sockets, and the Olympus machine could also support a Cavium ThunderX or an Applied Micro X-Gene2 ARM server chip, or maybe even a future IBM Power9 chip.) The system shown has copper water blocks that come off the processors into an optional remote heat sink, which leads us to believe Microsoft is positioning the system to have hotter processors but not require very tall heat sinks. (That is a clever bit of engineering, there.)

The 1U Olympus server has room for two SATA storage devices in the back of the chassis (you can see one drive installed on the back right side), and interestingly has room for up to four M.2 flash sticks, which can be used for local storage and which offer better thermals, better engineering flexibility, and perhaps better pricing than flash SSDs and hence why Microsoft is using them. (The picture says Olympus motherboards can support eight, but the motherboard spec below says it can only support four. We believe the motherboard spec.)

The Olympus machine has a power supply with a battery built in, and Microsoft is very big on using batteries inside of a server instead of giant datacenter-sized uninterruptible power supplies, as Vaid explained last year at the Open Compute Summit.

This Olympus machine with a 1U rack form factor has three PCI-Express x16 slots, which is the neat bit as far as we are concerned. All three slots are front accessible, which makes it easy to swap out network cards or various kinds of accelerators. All of the PCI-Express cards are standard full height half length cards, not like the non-standard mezzanine card used in the Open Cloud Server systems. In the machine shown, the center PCI-Express slot has a 50 Gb/sec Ethernet adapter card slotted in, and the card on the right looks like it has some kind of accelerator in it while the one on the left looks like it has a placeholder in it. The Olympus motherboard has two USB 3.0 ports and an RJ45 1 Gb/sec Ethernet connector for management that come out of the front of the chassis underneath the PCI-Express slots. The motherboard also supports one 10 Gb/sec SFP+ connector (not off the front panel) and a VGA connector if you want to attach a monitor to the device locally. The whole shebang is cooled by six 40 mm muffin fans at the back. Despite all the stuff crammed in there, it looks pretty airy, which is a good thing.

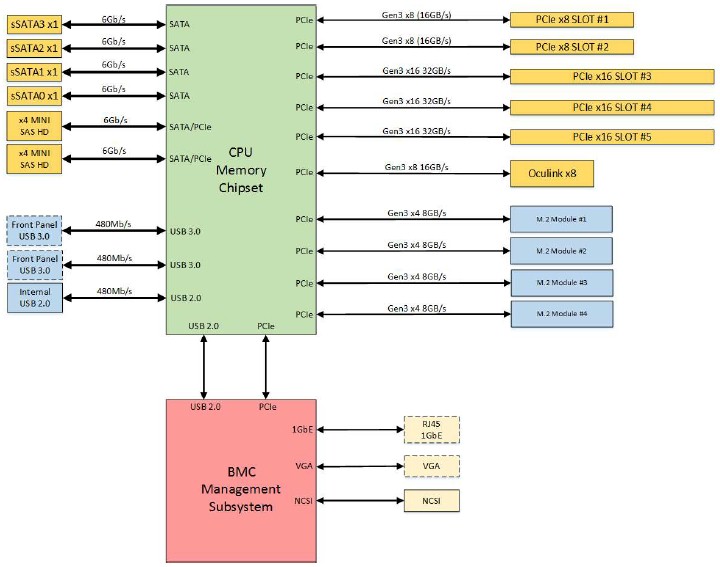

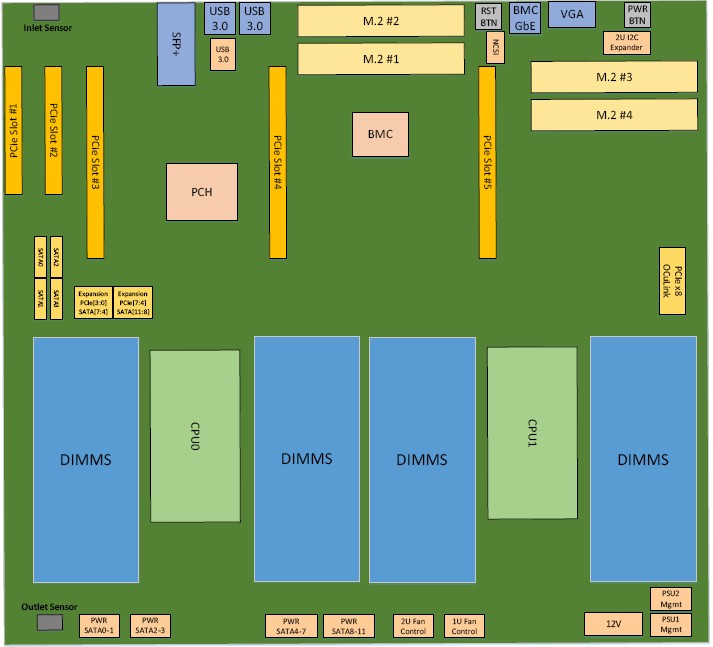

Here is what the motherboard layout looks like:

If you look at the Olympus motherboard spec above carefully, you can see what a 2U version of this machine might have if all of the ports and slots were turned on.

The spec supports not only three PCI-Express x16 slots, but also two x8 slots. And if you look at the bandwidth on those, you can see that the spec is supporting the future PCI-Express 4.0 protocol. As far as we know, the future “Skylake” Xeon E5 v5 processors are not supposed to support PCI-Express 4.0, but perhaps Intel has moved that up to be more competitive with Power9, which will support the faster PCI-Express when it ships in the second half of 2017. Interestingly, the Olympus motherboard spec has an OCulink x8 port, which is an optical port used for supporting remote graphics and in this case is used to have a second pipe into the FPGA accelerator. It also includes four SATA ports and two x4 Mini SAS ports. So we imagine the 2U variant of this Olympus system will have more storage and peripheral expansion for specific workloads. The spec calls for support for up to 32 memory sticks and a dozen SATA devices.

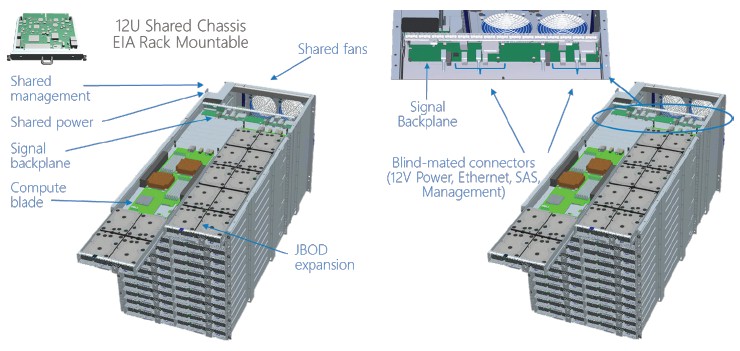

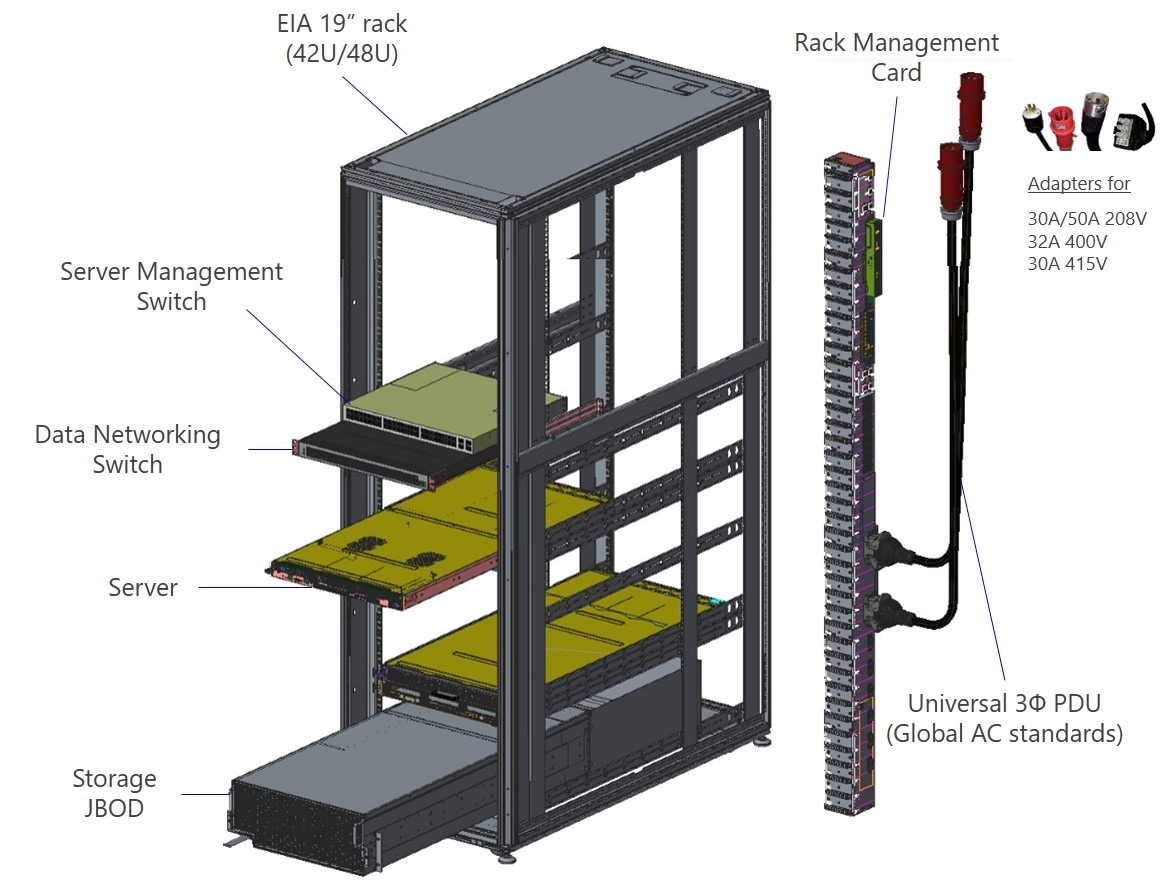

As for storage expansion for these nodes, what is clear from this exploded rack view is that Microsoft will be employing JBOD enclosures that will presumably link to the server over those Mini SAS ports.

Microsoft is building standard 19-inch racks in 42U and 48U heights for the Olympus systems and has also created a universal power distribution unit for the rack, which meets AC power specs that are common in datacenters around the globe, including 208V at 30A and 50A, 400V at 32A, and 415V at 30A. You can look at the specs for the motherboard and the initial system at the Open Compute GitHub site here.

“To enable customer choice and flexibility, these modular building blocks can be used independently to meet specific customer datacenter configurations,” Vaid explained in his post. “We believe Project Olympus is the most modular and flexible cloud hardware design in the datacenter industry. We intend for it to become the foundation for a broad ecosystem of compliant hardware products developed by the OCP community.”

Vaid said that the Project Olympus designs were about half complete, and that Microsoft was opening up the specs before they were done to solicit input and engineering help from the Open Compute community.

So why the big change? We spoke to Vaid via email, and he explained the rationale behind using the new designs. “We are moving to a full 1U design to accommodate high VM density scenarios, accelerators, high speed networking, and headroom for future hardware for technologies such as machine learning and so forth,” Vaid wrote. “Additionally, the 1U design is more efficient at cooling and has higher power efficiency.”

Vaid said that the final specs and production hardware for the Project Olympus machines would be done by the middle of 2017, and that Microsoft would be rolling machines into its datacenters after that. As for our speculation that these machines could support all kinds of different processors, all Vaid would say is: “As an open source solution, we hope to attract a range of partners across the ecosystem. More details on the components and partners will be reveled in the future.”

We think it is a safe bet that Skylake Xeons from Intel and Zen Opterons from AMD will be supported initially in the Olympus machines, and it may be other parties that create a variant of the universal motherboard that supports ARM and Power chips if Microsoft itself doesn’t do it. We wonder if the “Zaius” Power9 motherboard from Google and Rackspace Hosting can slide into the Olympus chassis. . . . The half-done observation that Vaid was talking about might mean that it has done the work to create a Skylake Xeon system and that Microsoft was looking for the community to do the Opteron, ARM, and Power bits. That is what it looks like to us.

A fundamental flaw in the cheap rack server model is that while cpu, cache, memory and flash storage technologies have increased exponentially in the last 10 years, local LAN network latency (TCPIP stack, NIC buffers, router hops, firewalls, load balancer times etc) has not improved at all.

Next generation compute models need to handle extreme amounts of data which means getting data closer to the compute engine in much shorter time-frames.

In relative terms, cpu-cache,cpu-memory, cpu-flash storage can be measured in secs/mins/days whereas network write / replication updates (as in N-Tier models with lots of small systems) can be measured in months.

Even if cheaper and more available, how does one reconcile using rack models with the above when the over-riding goal is to reduce overall solution latency?

We are at the beginning of network tier consolidation whereby next generation models will be based on fewer, but much larger blade type servers (TB’s non-volatile local memory) that utilize high speed interconnects (e.g. CAPI, RoCEv2, others) e.g. clusters of big systems.