The most successful players in the information technology space are those that can adapt, again and again, to tumultuous change. With a vast installed base and plenty of technical talent, it is unwise to count VMware out as the enterprise customers who have embraces its server virtualization tools ponder how they want to evolve to something that looks more like what a hyperscaler would build.

Many companies have faced these moments, but here is perhaps a pertinent parallel.

IBM’s vaunted mainframe business reacted successfully to the onslaught of minicomputers in the late 1970s and early 1980s, spearheaded by Digital Equipment and its venerable VAXes, with its System/36, System/38, and AS/400 systems and baby mainframes like the System 9370s that scaled down in performance and price to meet DEC on the field and expand IBM’s own market. When Unix systems took off in the late 1980s, IBM jumped into Unix despite the cannibalization of its mainframe and minicomputer lines and, after years of being an also-ran against Sun Microsystems (now part of Oracle) and Hewlett Packard (now a separate enterprise company that is looking for its own future), Big Blue put itself on a very aggressive technology roadmap and competed aggressively to win the lion’s share of the Unix market.

IBM survived these onslaughts, but there were consequences. For one thing, the whole point of the System/360 mainframe – the very reason Big Blue in 1964 bet the company, in a very serious way – was that it provided a unified product line for its diverse products that would scale from small customers to the largest glass houses. Computing was young back then, but IBM had the right idea. And to defend against competition in the decades after the mainframe ascended to the top of the datacenter hierarchy, IBM made its own product lines so complex, with so much competition between them, that its own sales force as well as its customers were confused about what they should buy. Moreover, as IBM started to win battles in the Unix space, it was bleeding mainframe and AS/400 customers, who moved off IBM software platforms and have never returned. IBM won the Unix battle, but lost the datacenter war. It did not become the dominant supplier of X86 iron, it did not even make its own X86 server chips as it could have, and it did not have any control over the Windows Server or Linux operating systems that came to dominate data processing.

The point is, transitioning from a well-built, profitable legacy platform to another is a very tough thing to do. IBM is still in business, obviously, but it is not the systems company that it could have been. But VMware learned very carefully by watching Microsoft’s embrace and extend strategy in the 1990s as it had to pivot its business for the Internet. And it has learned.

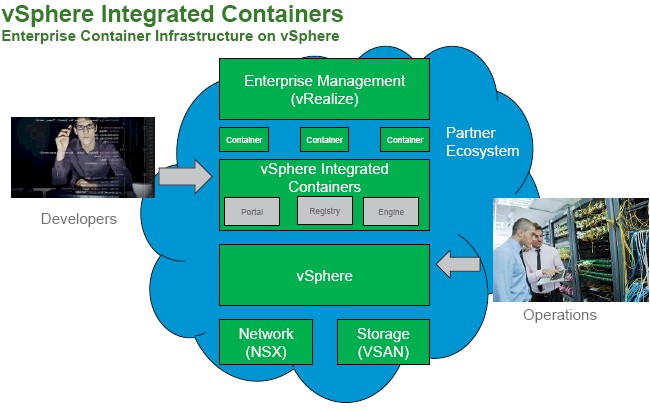

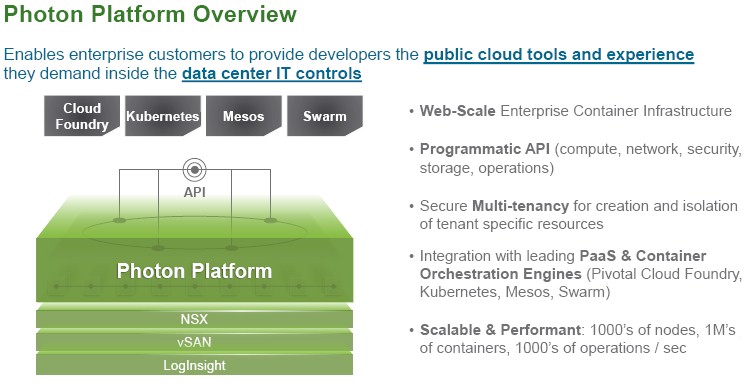

VMware has similarly and brilliantly built a base of 500,000 users of its ESXi hypervisor and vSphere management tools for server virtualization, and it has embraced both the OpenStack cloud controller and Docker containers atop ESXi with vSphere Integrated OpenStack (VIO) and vSphere Integrated Containers (VIC), and it even has its own Unix system analog for the containerized datacenter called the Photon Platform, which is intended for greenfield installations where VMware shops want to move away from server virtualization to have a clean-slate container environment. The former VIO and VIC allow VMware to maintain its installed ESXi base by weaving actual OpenStack and Docker code around the hypervisor, while the Photon Platform allows VMware to create a new virtualization substrate for containers and platform clouds that gives it something to sell to those who are looking at other alternatives to server virtualization – usually open source – that are emerging, such as stacks based on Mesos, Kubernetes, and Docker Swarm.

For the first version of VIC, which was announced this week as a public beta starting in the third quarter after a run of private betas to harden the code a bit, VMware is supporting the Docker APIs and will be able to run Docker images as virtual machines on top of ESXi 6.0 and higher.

“Here is the problem with containers we are trying to solve,” explains Karthik Narayan, senior product manager of cloud native apps at VMware. “Today people do two things. Some people run containers on bare metal, and the problem with that is that you are limited to the size of the hardware you are running on and if you want to have additional servers you have to have some kind of clustering mechanism, and that is what Docker Swarm and these other things are for. The other, more established way that people run containers is inside virtual machines. This way, you already have policies and practices on how developers and administrators can go and get VMs and deploy them, with service portals and roll-based access controls and so on. But once developers get the VMs, they have to set up their own containers.”

This container-on-VM approach has problems. Seeing what is running inside of a VM, which generally has lots of containers, is not trivial. VMs assume they have an operating system, not a container with code snippets. Moreover, says Narayan, just sizing the VMs to run different kinds and numbers of containers is difficult; customers get it wrong, and frankly, this is precisely the kind of thing that should be automated. Finally, because the containers are statically configured on the VMs, which are locked in place and size because of the configurations required by the containers, if there is excess capacity, you don’t know it and can’t reclaim it easily. This defeats the purpose of virtualization, which is to leave no clock of CPU, no byte of memory, and no bit of bandwidth unused if possible.

The neat trick with VIC is that VMware is taking a Docker image and running it as a VM rather than within a VM. VMware creates a virtual container host, which is analogous to a physical server that runs containers in bare metal mode, and the Docker images run on top of a Linux kernel that itself is running on top of the ESXi hypervisor. (In essence, the container engine, like Docker Engine, is embedded into vSphere itself.) That bare bones Linux kernel is a trimmed down variant of the Photon Linux that VMware created for the Photon Platform, and it has been cut back to about a 25 MB footprint – just enough to run a Docker image. These virtual container hosts are linked together into a pool and you can expand the pool by adding servers.

The interesting bit in doing containers this way is that the container VMs and the normal VMs are all exposed equally inside the vSphere tools and vCenter management console. Each container is fully visible, which was not the case when you put a lot of containers inside of a real ESXi VM as companies have been doing. The important thing is that a container is managed like a regular VM, and NSX virtual networking and Virtual SAN virtual storage can underpin the container environment.

“We will be supporting Docker in VIC version 1.0 and then we will start expanding,” Narayan tells The Next Platform. “We will be looking at rkt depending on how the adoption is, and things like Kubernetes and Docker Swarm.”

In addition to opening up the beta for VIC 1.0 at VMworld, VMware added to bits of software to the VIC stack, which were requested by the companies that participated in the white-glove private beta testing phase, according to Narayan. The first is called Admiral, which is a container management portal that snaps into the vCenter console and into the vRealize Automation cloud extensions to vSphere (formerly known as vCloud). The second new feature for VIC is called Harbor, and it is a container registry system is based on the open source Docker Distribution registry and that has hooks into the access control and auditing features of vSphere. Unlike the core ESXi and vSphere software, VIC and its Admiral and Harbor features are open source and available for free on GitHub.

VIC is not being offered as a commercially supported product yet, which is what VMware’s enterprise customers need. But Mike Adams, senior director of product marketing, tells us that a commercially launched version is “pretty imminent” and will be here “sooner rather than later.” The commercial-grade support for VIC will be on a perpetual software license like the vSphere stack and its extensions are today.

The Photon Platform, which we detailed back in March, has been in production since June, when it was bundled up with the Pivotal Cloud Foundry platform cloud framework for a 1.0 release. VMware is charging $3,500 per application instance per year for a subscription, and is distributing Photon Platform on top of the freebie version of the ESXi hypervisor (which does not have hooks into vSphere and vCenter) for those who want to run it and self-support. Photon Controller is also available on GitHub, but ESXi is not open source and will likely never be. Pricing for the supported versions of the Photon Controller and the Photon OS Linux variant have not been set as yet, according to Adams. (To our mind, something is a real product when it has a published price. And VMware, like Oracle, Microsoft, and Red Hat, is very good about giving out pricing on every piece of code it sells and supports.)

Fighting On Two Fronts

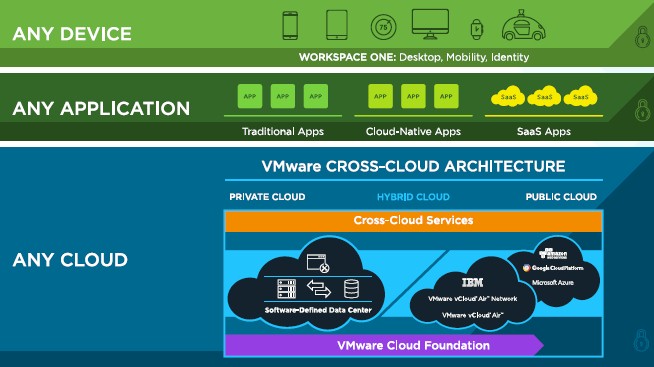

The availability of vSphere Integrated Containers and Photon Platform makes VMware a contender in all kinds of clouds, since the code can be run on private infrastructure as well as on public clouds. VMware CEO Pat Gelsinger talked about VMware’s Cloud Foundation, which is just the mashup of vSphere/ESXi, NSX, and VSAN, and how it gave VMware a cross-cloud architecture.

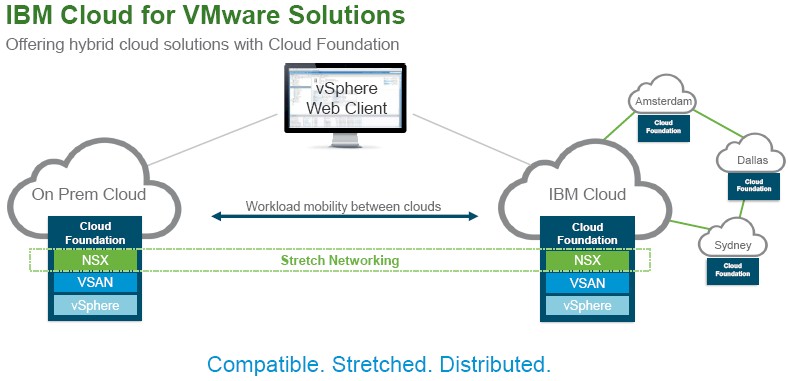

VMware trotted out IBM’s SoftLayer cloud as the first big partner to offer cross-cloud services, and this is possible because IBM has bare metal machines on which it can load up the vSphere, VSAN, and NSX software to create public cloud slices that it can sell to customers. Microsoft, Google, and AWS do not expose their clouds as bare metal and it is not clear how you might run VMware out on top of Cloud Platform (which is based on KVM and containers), Azure (which is based on Hyper-V), and AWS (which is based on Xen). But the presentations sure made us want to believe this could be done:

Interestingly, Rackspace Hosting has bare metal machines and hosted services and it could be a cross-cloud partner with VMware if it chose to; its own cloud is also based on Xen, just like the cloudy slices at SoftLayer are as well.

It is not at all clear to us how running VIC or Photon Platform on a public cloud will work unless that cloud provider is willing to wall off a chunk of its infrastructure and only run the VMware stack on it. And even if you could run either VIC or Photon Platform on top of cloudy and virtualized infrastructure at AWS< Azure, or Cloud Platform, one has to imagine there would be quite a bit of overhead running so much infrastructure code.

We have said it before, and we will say it again. Large public clouds almost by necessity compel the creation of absolutely compatible private clouds. So there has to be a private AWS, a private Azure, and a private Google Cloud platform. The problem that VMware has is that it is, to a certain way of thinking, the default substrate for server virtualization for a lot of enterprises, but it does not even come close to having that role in the public cloud. Its own vCloud Air public cloud never really gained critical mass because even VMware, which will soon have $9 billion in cash if Michael Dell doesn’t spend it all, cannot spend on hardware and datacenters at the levels that AWS, Microsoft, and Google can.

VMware might have done well to buy Rackspace itself, had it not been so distracted with being acquired by Dell.

Be the first to comment