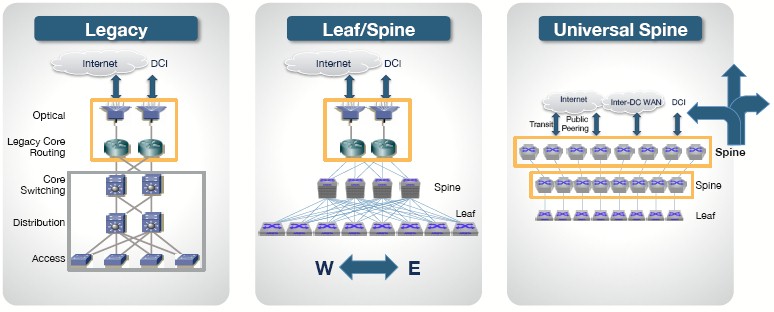

Convergence is a recurring theme in all kinds of layers of the datacenter these days. Servers and storage are being mashed up, and in other cases servers and networking are. Various layers of the network fabric are also starting to be converged, and now it is time for switching and routing in the datacenter to come together.

That was the opinion of switch chip maker Broadcom when it launched its “Jericho” line of ASICs this time last year. The Jericho ASICs are part of the “Dune” family of chips that the company acquired when it bought Dune Networks for $178 million back in November 2009. Broadcom told us a year ago that one potential use case for the Jericho ASICs was for WAN traversal, which is done by edge routers that sit above the datacenter network and use MPLS or Layer 3 protocols to link the datacenter gear to other datacenters or the outside world. This is precisely what Arista Networks is doing with its new 7500R “universal spine” device, which combines the functions of a high speed modular switch and a router.

Having routing functions be part of the switch infrastructure is nothing new, of course. Plenty of switches have some routing capability. But machines like the 7500R from Arista are going to mean that for certain kinds of routing between datacenter rooms or whole datacenters as well as for hooking into the Internet itself, one kind of box will be able to suit the needs of a spine switch in the network fabric as well as routers higher up in the network. The combination of the two, Martin Hull, senior director of product management at Arista, tells The Next Platform will improve the scale of networks while at the same time reducing complexity.

Blasting The Datacenter Walls With Jericho

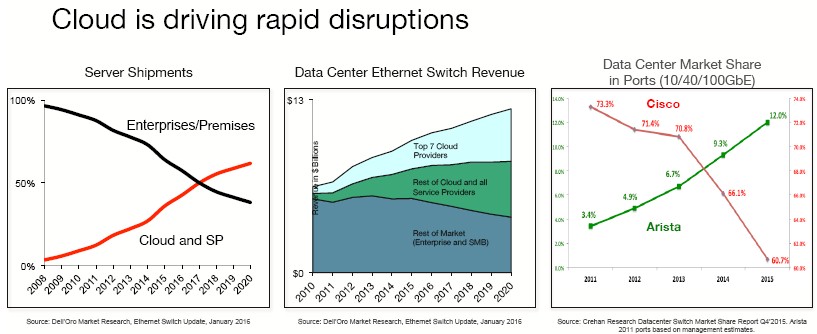

“What we have seen over the past few years is that cloud deployments just continue to grow,” Hull says. “Soon server shipments at cloud builders and other service providers are going to outstrip enterprises, and the largest cloud providers will account for about a third of Ethernet switch spending.”

Other service providers account for another third of the market, as you can see above, and enterprises and SMBs comprise the rest of the remaining third of the market by 2020. Last year there were over 22 million ports of switching capacity sold worldwide for datacenter-class iron that had ports running at 10 Gb/sec, 40 Gb/sec, or 100 Gb/sec, according data presented by Hull, and these generated around $9 billion in sales.

“We have been a big beneficiary of that growth. But within the datacenter, there have been a number of transformations in system architectures, and the next transformation is going to be around the routing tier. The cloud and service providers want to be able to scale out their networks, but in order to do that they have to keep it simple. They need simple, consistent building blocks, and they want software control.”

These were the founding principles of Arista, of course, which created its own Linux-derived network operating system, called the Extensible Operating System or EOS, although the company has stopped short of open sourcing this code as Hewlett Packard Enterprise, Dell, Big Switch Networks, Cumulus Linux, and Pica8. Arista may yet be compelled to open up its stack by market forces, particularly if it wants to sell to hyperscalers and cloud builders.

The other big change is that the merchant silicon for switches has become more capable, and a lot of the routing functions that used to be only available on custom ASICs are now in chips like Jericho. Just as is the case with the X86 processor, which is a compute engine that can do many different kinds of jobs, some hyperscalers are going to want a flexible switch ASIC that can also handle routing.

The routing functions that are part of the Jericho chips are not embedded in the “Trident-II” or “Tomahawk” switch chips (The StratusXGS line in the marketing catalog) that Broadcom has created for leaf and spine switches and, in the case of the Tomahawks were deployed in a line of switches last fall that adhere to the 25G Ethernet standards pushed by the hyperscalers to reduce their costs while boosting bandwidth and port counts. And because of the extra expense of the ASICs as well as the increased capability they offer (including routing functions, scalable deep packet buffers and tables), the Jericho chips (which are in the StratusDNX family as the Dune devices are known externally) cost more and so do the switches that employ them. (We will get to that in a moment.)

There are different Broadcom horses for different datacenter courses. The Trident-II delivers 1.28 Tb/sec of switching bandwidth and the follow-on Tomahawk almost triples that up to 3.2 Tb/sec; they have a few megabytes of buffers for holding data when it busts in faster than the line rate of the ASIC can handle. The Jericho chip, which is slower at 720 Gb/sec of aggregate switching bandwidth, is quite a bit slower, but you can link up to four of them together without a chip fabric to create a four-socket virtual ASIC with 2.88 Tb/sec of bandwidth, and for larger configurations, switch makers can create a spine fabric with 144 of the Jericho chips with 103.7 Tb/sec of aggregate bandwidth that could, in theory, support 6,000 ports running at 100 Gb/sec in a single-tier fabric.

While Arista would love to have customers use the new 7500R switches based on the Jericho switches in both the top layer of the stack where routing is done and in the middle layer where the spine aggregation is done, the odds are that most datacenter customers will use some 7300-class modular spine switches based on the Tomahawk chips (which we detailed last fall) in their fabrics. Others will build leaf/spine networks using fixed port switches, also based on Tomahawks, and feed up into a converged switch/router layer.

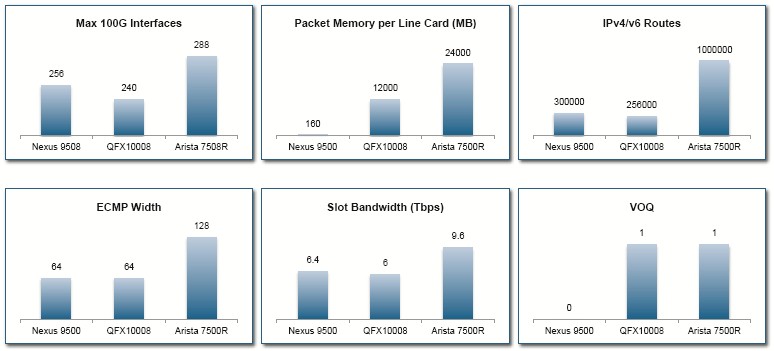

The Internet has about 600,000 routes on it right now, with the vast majority of them still being IPv4 rather than IPv6. And with support for storing over 1 million routes in the high-end 7512R modular switch/router, Arista can house the routing tables for the entire Internet on its devices and have room to spare. Hull says that the Nexus 9500 switch from Cisco Systems can do 300,000 routes while the QFX10008 from Juniper Networks can do 256,000 – or about half of the Internet. Here is how Arista stacks up the new 7500R against those two machines:

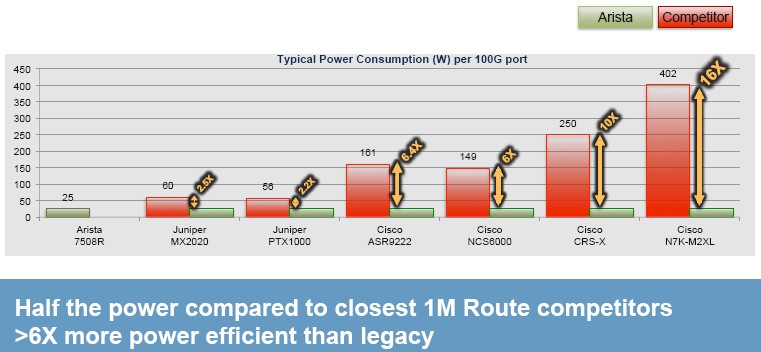

The number of maximum 100 Gb/sec interfaces per system is not that different, as you can see, but the packet memory per line card is way different, and if you look at it on a per-port basis, at 500 MB per 100 Gb/sec port, the 7500R has packet buffers that are 100X deeper than fixed port 100 Gb/sec switches do. The bandwidth per line card is also higher on the 7500R, and as the following chart illustrates, the power consumption per port is also a lot lower:

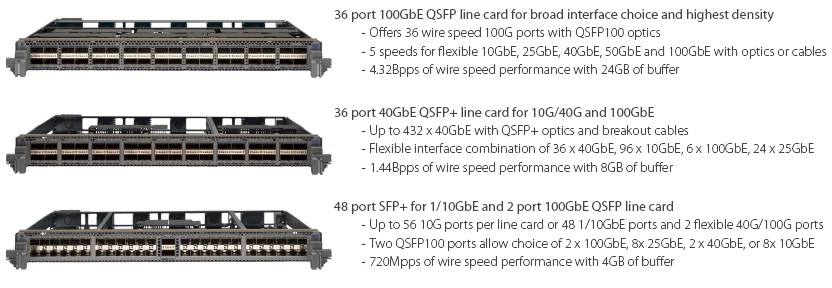

The largest configuration of the new 7500R modular switches has 115 Tb/sec of aggregate switching bandwidth and can process up to 51 billion packets per second while forwarding data. With 64 byte file sizes, the 7500R can do a port-to-port hop in 3.5 microseconds (which is nothing like InfiniBand mind you, which comes in at somewhere between 85 nanoseconds to 100 nanoseconds these days). That top-end 7512R switch comes in an 18U chassis and can be equipped with a dozen line cards. With the 7500R-36CQ card, which has six of the Jericho chips on it and supports 36 QSFP ports running at 100 Gb/sec speeds, the chassis can have 432 ports running at full speed or with four-way splitters (which will probably be common in hyperscale and cloud datacenters soon) it can support up to 1,728 ports. It has 288 GB of dynamic deep buffer memory and 9.6 Tb/sec of system capacity per slot. That is enough, according to Arista, to support future 200 Gb/sec and 400 Gb/sec Ethernet protocols when such ASICs become available. (See our take on the recent Ethernet roadmap for more on when to expect those speed bumps.)

Hull says that the largest 7500R system with the best price will cost about $3,000 per port, or about $1.3 million, which works out to $750 per 25 Gb/sec downlink to the servers when it is used as a spine switch for the leaves. We presume that this 7500R is considerably less expensive than a router and that, on average, the combined cost for separate spine switches and router gear will be higher for such customers than buying one type of hybrid switch/router and using it for both jobs. (Simplification is one thing, but hyperscalers and cloud builders want to save money, too.)

As with the initial 7500 modular switch, which came out in 2010 offered 10 Gb/sec for the spine and 10 Gb/sec down to servers, and with the 7500E, which came out three years later with 40 Gb/sec ports on the spine and 10 Gb/sec ports down to the servers using four-way cable splitters, the 7500R is keeping on a Moore’s Law curve, delivering 3.8X times the capacity and delivering 100 Gb/sec on the spine and 25 Gb/sec down to the server (with four-way splitters) and 50 Gb/sec down to storage (using two-way splitters). This is precisely the bandwidth pipes that hyperscalers and cloud builders need, Hull says, and it is not using a Tomahawk chip you will not. (Ah, but will all of those open source, Linux-based network operating systems be ported to the Jericho chips? There’s the rub for many of the hyperscalers and cloud builders, but not for all. Microsoft, for one, just needs support for SAI and SONIC.)

For those who still want to use a mix of old and new speeds, the 7500R chassis has a line card that provides 36 ports running at 40 Gb/sec (which can be busted down using breakout cables) plus six 100 Gb/sec uplinks. Another card has 48 ports running at 10 Gb/sec plus two 100 Gb/sec uplinks. Moreover, not everyone will need a dozen line cards in their systems to build out their networks, so as with the prior generations of Arista 7500-classs modular switches, the 7504 chassis is a 7U machine with four slots and the 7508 chassis is an 11U machine with eight slots.

The four-slot and eight-slot 7500R modular enclosures are available now, with the twelve-slot machine coming out in the third quarter of this year. The three line cards will ship in the second quarter, which starts on Friday and ends three months from then.

A word about pricing. On the fixed port Tomahawk switches, a 100 Gb/sec port on the 7060CX-32 1U entry machine has a list price of around $1,000 per port (which works out to $250 per 25 Gb/sec port if you use splitters), and the larger 2U 7260-CX switch costs $2,000 per port (or $500 per 25 Gb/sec chunk). Clearly, there is a wide variety of functionality and price going on here, and at $3,000 per 100 Gb/sec port, the 7500R modular switch is not the cheapest option. But, for applications that require deep packets and large tables – and that might have performance hiccups otherwise – then it might be worth the money at the spine layer to think about the big bad switches. Arista is no doubt counting on that.

very nice, what’s missing on these posts is something related to feature set. when i look to the arista’s 7500R docs i see only a lot of DC stuffs and a ridicolous BGP command line set. what’s the deal? 1M routes and 3.2Tbps does not make an internet facing router, at all.