All flash storage arrays can be sprinkled around the datacenter to provide zippy block storage for database virtual server applications. And while the current crop of all flash arrays are useful and affordable for such applications, they are not scalable enough to take on some of the big file and object workloads that are dominating the storage landscape in the glass house.

Pure Storage has set out to change this with a new line of all flash arrays, called FlashBlade, that are aimed at the petabyte-scale jobs that are at the heart of the modern platforms being built today. The FlashBlades have plenty of headroom and will be scaling out further to allow all but the largest hyperscalers and cloud builders to have massive amounts of storage on high performance flash.

People are still arguing about what the future of disk and flash are for the storage tiers, the answers keep changing as the prices for disk and flash capacity reach parity after wear leveling, compression, de-duplication, and other techniques are added to flash. We are of the opinion that enterprises will be willing to spend on relatively expensive all flash arrays for a growing amount of their mission critical data kept in house and will be equally willing to dump relatively cold and archived data out to cloud storage providers such as Amazon Web Services, Microsoft Azure, and Google Cloud Platform, who will all be trying to figure out how to make disk drives do a better job at supporting massive amounts of data that basically comes into their datacenters like sediment and settles down to become rock over the ages.

There is a reason why Google wants to reinvent the disk drive, and that is because the public clouds will get stuck housing enormous amounts of data on the cheap. With the PC business shrinking, it will not be long perhaps before hyperscalers and their cloud building peers account for the majority of disk drives, and enterprises will be more than happy to not have to deal with disks ever again. As we pointed out in covering Google’s desire to reengineer disks for its purposes, enterprises will need petabytes of hot and warm data, not exabytes, and they will be able to justify all flash in a way that the hyperscalers and cloud builders cannot for many years to come.

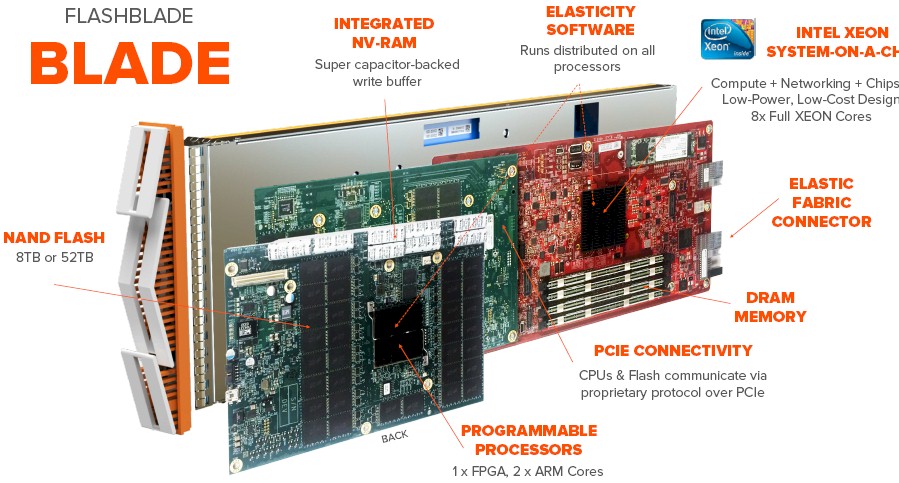

But Pure Storage, which probably should have called itself Pure Non-Volatile Storage if it wanted to be more accurate, wants no part of a disk drive except some of the bits that get pulled out of the crusher as a kind of trophy. So its engineers have been hard at work designing their own flash cards with Xeon, ARM, and FPGA computing to create an all flash array that can scale to the levels necessary in the enterprise for the gargantuan unstructured file and object storage that utterly dwarfs the structured data that can be housed on the block-style FlashArray line that it already sells and that is analogous to many other products out there in the market.

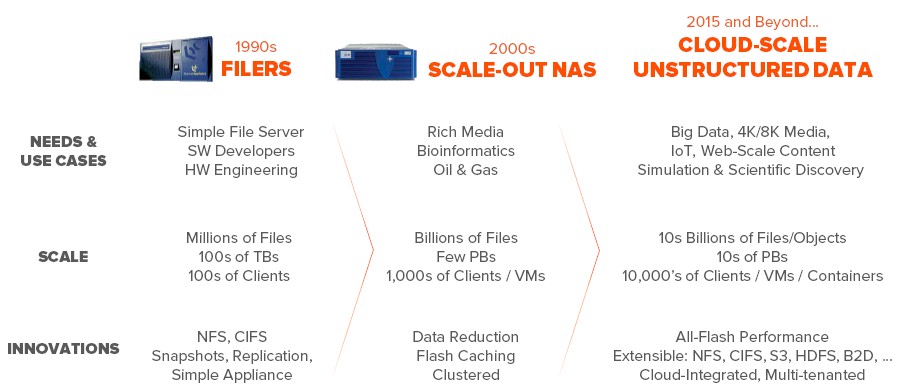

The way Pure Storage paints the history of data, each era of computing required its own technologies to meet the scale needs of the time. And, not ironically, in every case, as a new technology came into the datacenter, it was quickly adopted and its limits pushed to the breaking point.

Back in the 1990s, when EMC brought its revolutionary Symmetrix RAID disk arrays from mainframes to Unix, Windows, and then Linux systems and when the commercial Internet was just getting going and NetApp was the innovator for network attached storage, disk filers had to scale to millions of files and hundreds of terabytes. A decade later, NAS started to dominate the enterprise datacenter and quite a few HPC centers as applications needed affordable storage that could scale to billions of files and several petabytes of capacity; again, here NetApp tended to dominate, but others got their share, too. In the current era, flash has become viable and cost competitive with disk and customers are not willing to accept poor and equally importantly inconsistent performance in exchange for cheap capacity. They want to be able to scale up to tens of petabytes of capacity and tens of billions of files and objects, hosting tens of thousands of containers or virtual machines or client programs.

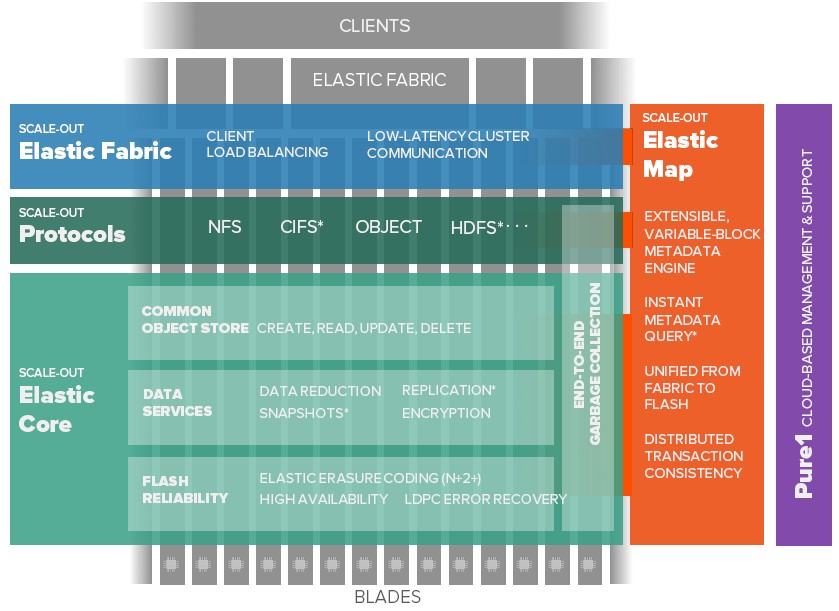

Rather than just take the FlashArray machines, which we profiled last June when the line was extended, use a mix of DRAM, NV-RAM, and flash modules to create a line of machines that scale up to 400 TB of usable capacity in an 11U setup and deliver 300,000 I/O operations per second with an average latency of under 1 millisecond. When it comes to block storage, Jason Nadeau, director of product and vertical marketing at Pure Storage, customers do not want a fault domain any larger than 500 TB excepting a few that are asking for 1 PB. With unstructured data with file and object access, that is a different thing entirely, and that caused Pure Storage to create different NAND flash cards of its own, with lots of compute to accelerate parts of the workload and to run the new Elasticity fabric software that will eventually be able to span across ten enclosures and 16 PB of usable capacity.

This is the largest all flash array in a single domain that we have heard of that does file and object storage. (If you know better, let us know.)

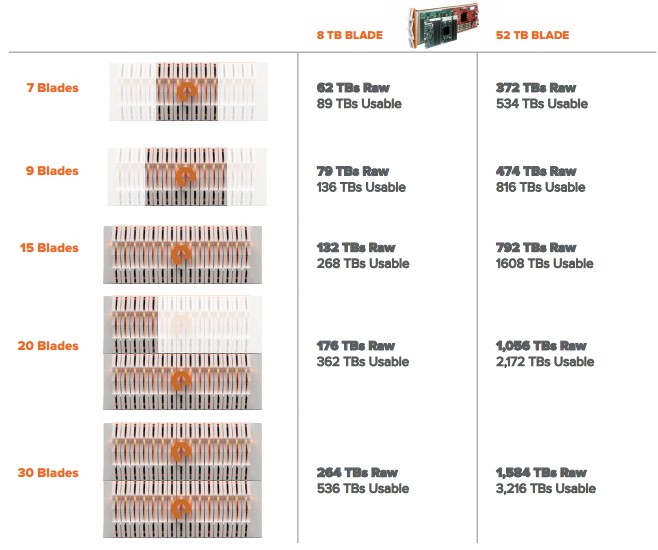

But equally important to scale, the FlashBlade systems are designed to start small at under 100 TB of usable capacity and then scale up by orders of magnitude. Many high-end systems do not scale down even if they do scale up well. Companies want to invest in one thing and build it out over three or four years. Maybe even longer for storage, which tends to last longer than servers but maybe not as long as network switches. And equally importantly, says Nadeau, the price of the FlashBlades scales down as well as up, and the price stays relatively consistent at under $1 per usable gigabyte as companies add storage modules to the base configuration.

Let’s Take A Look Under The Hood

The FlashBlade storage modules are essentially baby hierarchical DRAM, NV-RAM, and flash storage arrays in their own right, with scads of different compute elements to do various kinds of processing on data and to make the flash and networks linking it behave themselves.

Pure Storage is not revealing what NAND flash it is using, but Nadeau planar MLC NAND that comes from multiple vendors. One of the key things about the architecture – just like that of EMC’s recently launched DSSD D5 all flash arrays – is that they do not use SSD flash but rather homegrown flash cards that have much better performance and higher capacity than SSDs can deliver. While EMC focused on low latency, high throughput jobs with the DSSD arrays, the FlashBlades aimed to have high capacity scalability with decent performance at a price that bests disk arrays without any question.

“At the densities that we are talking about for flash,” explains Sandeep Singh, director of product marketing at the company, “we found that by mapping to discrete NAND flash pages versus to virtualized block ranges, we can get much more efficient and much more concurrent communication as well as being able to have a system-level flash translation layer that can be much more intelligent and optimized to use that overall NAND flash capacity and I/O.”

There are two different capacities of modules, one with 8 TB of usable space and another with a much fatter 52 TB of space. On both types, different compute elements do different things and the performance of that compute scales to span the larger storage.

On the 8 TB module, the compute elements include a six-core Xeon D system-on-chip from Intel, plus an Altera FPGA and a two-core ARM processor. The 52 TB module has an eight-core Xeon D processor, plus three Altera FPGAs and a six-core ARM processor. The 8 TB module has 64 GB of DDR4 main memory linked to the Xeon D processor, and the 52 TB module has 128 GB. For every 8 TB of flash capacity, Pure Storage is putting on 4 GB of NV-RAM to act as a high-speed, write buffer cache, which has supercapacitors to back it up in the case of power failure.

The FPGAs do the scheduling of data movements as well as encryption of data and error correction as data comes into the NV-RAM and heads towards the flash through the memory hierarchy in each blade. The ARM cores do the overall scheduling of traffic on the flash and manages the NV-RAM. The Xeon D processors are used to run the Elasticity fabric management software that keeps the metadata for the whole shebang and makes a bunch of these modules and arrays of these modules look like one logical storage unit. This metadata is sharded and distributed across multiple modules and arrays.

To link this all together, the flash and processing elements communicate with each other using a proprietary interconnect This proprietary protocol is optimized for concurrent communication and uses PCI-Express as a transport, and our guess is it looks a bit like the Coherent Accelerator Processor Interface (CAPI) that is part of IBM’s Power8 chips. The individual flash modules are linked to each other over a midplane that is running 10 Gb/sec Ethernet internally that has also been optimized for concurrent communication and does not make use of the RDMA over Converged Ethernet (RoCE) protocol. Singh says the modified Ethernet offers data transfers between elements of under 100 nanoseconds – that is almost as low as a port hop on 100 Gb/sec InfiniBand these days. The switch inside the array has a total of 64 ports, with eight ports exposed externally to servers and to other arrays for clustering.

A single 4U enclosure with fifteen of the 8 TB units has a usable capacity of 268 TB assuming around 2:1 compression on data. The FlashBlade uses the same compression and de-duplication algorithms employed in the block-style FlashArrays. For the file and object workloads that Pure Storage expects on the FlashBlades, de-duplication does not help much, but it will be adding it later to the product line to perhaps boost the data reduction by 3:1 or so. With the 52 TB flash modules, Pure Storage can get 1.6 PB of compressed and usable data into that 4U enclosure. The system can deliver 15 GB/sec of bandwidth and handle 1 million NFS operations per second per enclosure, according to early tests.

To scale the FlashBlade system up, companies can use 40 Gb/sec Ethernet to link two enclosures together using the existing fabric module. In the future, Pure Storage will roll out a new fabric module that will allow up to ten enclosures to be linked into a single storage domain, reaching that 16 PB upper capacity limit.

Given the claims that the FlashBlade costs under $1 per GB, then a single 4U system loaded up to the gills should cost under $1.6 million. How does that compare to other disk and flash alternatives? Singh says that a disk array with 15K RPM SAS drives will run somewhere between $1.50 to $2 per GB, but after you deal with performance issues, it is more like $3 to $4 per GB, and with software add-ons that are not bundled in, the price is more like $4 to $5 per GB. Archival arrays might be as low as $1 per GB, but they don’t have performance, and high performance arrays from NetApp and EMC that do block storage are in the $1.50 to $2 per GB range.

Raw cost is not the only measure of money in the enterprise. Time is as well. In one use case, a chip maker’s EDA stack was accelerated by about 20 percent by moving from NetApp and Isilon arrays to FlashBlades, which equates to removing one year from the five-year chip development, validation, and testing product cycle.

The FlashBlade arrays will be in directed availability, meaning you have to sign up and be approved for early units, in the second half of this year. General availability has not been announced, but the goal is to have it shipping by the end of the year.

Be the first to comment