Offloading parts of the network stack from processors to specialized circuits on network adapter cards is not a new idea. The InfiniBand cards used by HPC centers have been doing this to speed up message passing between nodes for years. Similarly, adapter cards have been created using FPGAs to accelerate processing on data as it comes in from exchanges to reduce the overall compute latency of trading and risk analysis systems at financial institutions. And now, it is time for virtual switching and routing to get accelerated at the adapter.

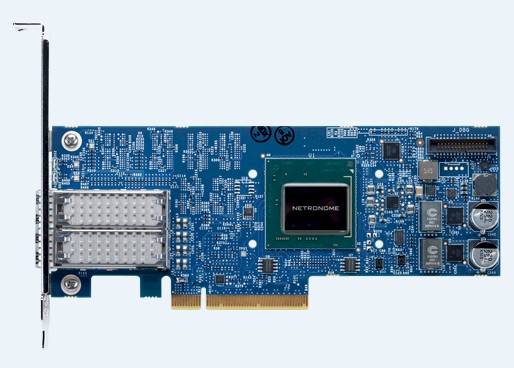

It may seem strange to offload a workload that has only recently been created to run on server infrastructure. But the advent of the Agilio network adapter cards from Netronome, with switching and routing software built into their circuits, is yet another demonstration that work will try to migrate to the cheapest platform on which it can run and that for some customers, freeing up CPU capacity in the system is important enough to pay a little more for the networking.

The kind of acceleration that Netronome is supplying is inspired by the so-called “smart NICs” and server-based networking that have been created by the big hyperscaler and cloud builders, made available for the rest of us. Amazon is rumored to have bought Annapurna Labs a year ago to create smart NICs, and Microsoft uses FPGA-based adapters to accelerate the network on its Azure public cloud. (The same FPGA cards are also deployed to speed up page ranking algorithms on the Bing search engine and to run deep learning algorithms.) Google has created its own server-based networking, switches, and network operating system, and could have its own smart NICs, too.

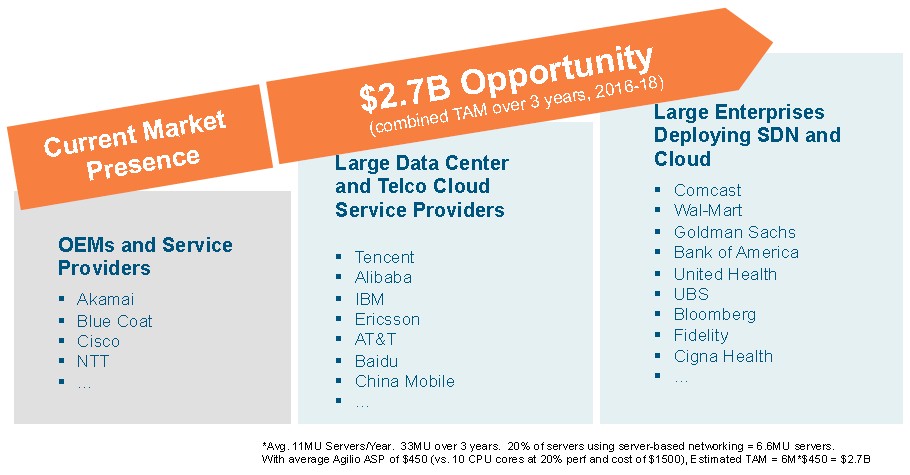

To be precise, Netronome is aiming at the next 10,000 clouds that will be built in the world by service providers, telecom companies, and large enterprises, Sujal Das, general manager of corporate strategy at the networking upstart, tells The Next Platform. “We see the server and networking in the datacenter at a critical inflection point,” Das says, and the new Agilio adapters are aimed at pushing workloads over that inflection point, off the CPU and onto the adapter.

Netronome, which was founded in 2003, licensed Intel’s XScale IXP28XX series of ARM-based network processors four years later to create a series of adapter cards that could do some control plane functions like routing tables. Netronome has significantly enhanced the IXP line, and with the existing Agilio-LX family, it has carved out a tidy little niche in intelligent adapters, driving $38 million in revenues in 2013, $65 million in 2014, and $93 million last year. The LX series of adapters were aimed mainly at appliance makers and network service providers who needed to accelerate security protocols, load balancing, or software-defined networking protocols. Key early customers for Netronome include Akamai Technologies, Blue Coat Systems, Cisco Systems, NTT Communications, and ZTE. With the Agilio-CX family launched this week, Netronome is bringing out a smaller form factor that is much more appealing to hyperscalers and cloud builders that also has a much lower price point and that can also be used to offload virtual switching and routing from the server to the Agilio adapters.

The way Das does the math, the total addressable market for the Agilio adapters is on the order of $2.7 billion over the next three years (inclusive), which is about 6 million adapters at an average cost of $450 a pop. That math assumes that an average of 11 million servers will be sold per year and that about one-fifth of them will be doing some form of server-based networking application that can be largely offloaded from the Xeon CPUs in the system to the network adapters. For these network functions, the acceleration in the adapter card replaces about 10 CPU cores running the same software, according to Das, which he estimates costs somewhere around $1,500 if you get a two-socket machine using sixteen-core Xeon E5 v3 processors. The network software load on an X86 server can be as high as 12 cores, he says, which makes that number even larger, and if you assume that 16-core Xeon E5-2698 v3 chip costs around $3,000 (Intel does not have a list price on it), then the network overload can be as high as $2,250. So the value of the Intel chip cores replaced is somewhere between 3X and 5X the cost of the Agilio adapter cards that Netronome has tailored for hyperscalers or cloud builders and the enterprises that want to emulate them. Call it somewhere between $9 billion and $13.5 billion in displaced Xeon core sales, if Netronome was able to capture the entire market.

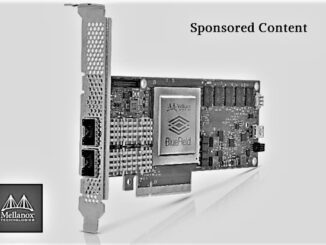

That will not happen, of course, but clearly even getting a piece of the SDN action and offloading it to Agilio cards could be a boon for Netronome. Perhaps even enough for Intel or Mellanox Technologies or Cisco or Hewlett Packard Enterprise or Dell to want to acquire the company. Intel Capital, the investment arm of the chip maker, participated in the company’s last round of funding back in April 2013; Netronome has raised a total of $73.2 million that has been publicly announced.

At the moment, Netronome has more than ten customers who are early adopters of the Agilio-CX series, including telecom operator China Mobile and telecom equipment maker Ericsson. And it is not just because the network function coprocessing in the Agilio adapters is cheaper, but because a specialized ASIC like the IXP family often offers better performance than a general purpose CPU like a Xeon or Opteron that is made to run a very wide variety of workloads. When you take a virtual switch or a virtual router or a firewall and run it on these machines, you get the benefit of putting it on a standard server, and you can tweak and tune that software often and keep adding features to it. Hyperscalers and cloud builders want this kind of continuous development in their network infrastructure, which is why NFV is taking off there and the flexibility it affords is why companies like Big Switch Networks, VMware, Juniper Networks, and others are commercializing this SDN approach.

Compounding the problem is heightened security, which is moving from a firewall on the perimeter of the network to policy-based security with network rules governing access to virtual machines and now software containers. Das says that it is common to require 1,000 security policy rules per VM in clouds these days, which works out to 48,000 rules for a two-socket server with a total of 48 VMs, and moreover that at some infrastructure running inside of the datacenters in China, a single server might have to juggle as many as 1 million – yes, more than a factor of 20X more – security policy rules. Shifting from VMs to containers, where a single server might have thousands of containers and yet a large number of security policy rules, will make the situation even worse.

Hearing this, you might expect to see Intel Xeons having more network acceleration functions in the future to sop up this computing demand. It might even be a function implemented on an on-package or on-die FPGA, with the gate programming sold like software by Intel. That’s one possible justification for the company’s $16.7 billion acquisition of FPGA maker Altera. Look at the value of those lost Xeon cores if all of this functionality shifted off the CPU to the network adapter. (Intel could put similar functionality on its own adapters, too.)

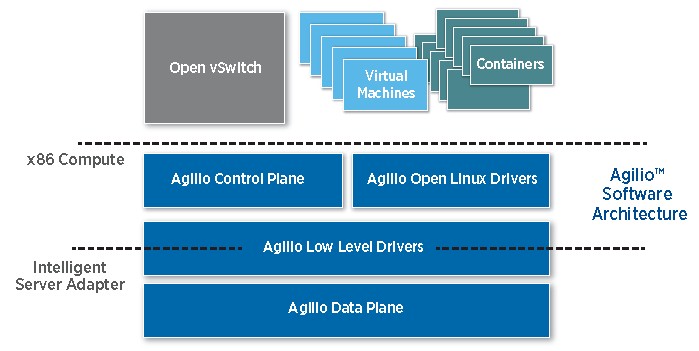

Aside from the performance hit, servers running network jobs can’t handle the workload, says Das. The twelve cores on a Xeon processor can only handle about 5 million packets per second, but you need somewhere around 28 million packets per second to feed a 40 Gb/sec Ethernet pipe. With the Agilio-CX cards, the network software runs largely on the adapter card and only one Xeon core on the server is needed to run a chunk of that code. The offloading is transparent to the server and the application, just like it is with MPI offload on InfiniBand networks.

Right now, the Agilio-CX line supports the offloading of Open vSwitch virtual switches, which provide the linkage between the VMs running on server hypervisors and actual physical switches in the network. (Open vSwitch is not the only virtual switch. VMware, Cisco, NEC, and Microsoft have their own as well.) The Agilio-CX adapters support Open vSwitch 2.3 and 2.4 at the moment, and will support Open vSwitch 2.5 sometime in the middle of 2016. Linux-based firewalls can be offloaded, too, and the Contrail vRouter virtual router from Juniper will be available for offloading sometime in the next four to six months. Virtual LAN overlays such as VXLAN (championed by VMware) and NVGRE (championed by Microsoft) can also be offloaded to the Agilio adapters, as can MPLS tunnel encapsulation and de-encapsulation. Bulk cryptography and hashing algorithms can also be offloaded to the NFP engines.

The NFP-4000 processors that Netronome has created for the Agilio-CX adapter cards are a derivative of the XScale IXP chips from Intel (which everyone has been very careful to not point out are based on ARM cores) and is capable of supporting up to 2 million security policies and driving data at 28 million packets per second, Das says. The cards have 2 GB of DDR3 main memory to store policies and help with the processing.

The prior generation of Agilio-LX adapters, which started shipping in the middle of last year, cost on the order of $3,000 for adapters with one 100 Gb/sec or two 40 Gb/sec Ethernet ports, but these cards had up to 24 GB of memory and used the more powerful 96-core NFP-6000 processor from Netronome for supporting truly monstrous workloads.

The Agilio-CX series has a smaller memory footprint and presumably the NFP-4000 processor it uses has fewer active cores, too. (Our guess is 64 cores are active and they are the same chip essentially.) But the CX series also has a starting price of $450 a pop at high volumes of sales per customer, which is a hyperscale kind of price. The CX cards come in two flavors: one with two 10 Gb/sec ports and another with one 40 Gb/sec port, which is not exactly hyperscale. But cards with 25 Gb/sec and 50 Gb/sec ports will start sampling in the middle of this year, aimed specifically at the hyperscalers and cloud builders who want better thermals, performance, and pricing per bit than either 10 Gb/sec or 40 Gb/sec on the server currently gives.

The Agilio-CX cards support Red Hat Enterprise Linux and its CentOS clone as well as Canonical Ubuntu Server, and will support Windows Server 2016 when it ships later this year. The KVM hypervisor is supported now, and Hyper-V will come later this year, too. VMware’s NXS virtual switching is also in the works.

Why in the world would MLNX want to buy Netronome after they just plopped down $811M for another fabless semiconductor network processor company (EZChip)?

What’s your rationale here?