When it comes to issues that keep cropping up in exascale computing circles beyond the expected hardware, parallelization, and power consumption topics, the matter of reproducibility is often on the list.

While it might not have the weight of attention, as say, saving millions on supercomputing site power bills, it is nonetheless a critical barrier to taking the full benefits from exascale-class computing resources through a peer-review scientific process—not to mention bringing valid results from experiments into the mainstream.

In the era before complex computation simulations, scientific results and observations were scribbled in notebooks and transcribed into extensive documentation before hitting publication. The code evolution could thus be tracked and back-tracked, but the limitations there are obvious. And while some might suspect that, with the bevy of tools available in 2015 for snapshotting large-scale research running on modern supercomputers, the solution should be clear, there is actually a sad lack of “automagic” tooling for this. Because, after all, shouldn’t this be relatively simple what with everyone containerizing applications and shipping changes around seamlessly?

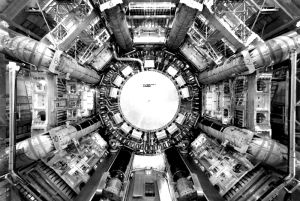

This lightweight VM-based approach might work for more common applications, but when it comes to hefty codes in areas like high energy physics, for example, creating simplified reproducibility is something of a golden grail. The problem, in a word, is dependency. For codes that have been developed and distributed across wide geographic and time spans with multiple contributors, data sources, formats, and associated file systems, there are some emerging methods to create verifiable research. But there is still quite a bit of work to be done.

One such code is TauRoast, which is used by the high energy physics community to look for Higgs Boson markers in vast streams of data. More specifically, the code scans for detector events with certain signatures that show various spots of interest (that’s the quick version—more details here if you’re feeling distractable). To say the core code itself is complex is not necessarily fair. It’s actually a relatively small amount of custom code, but, the problem is, it cannot run without making use of many dozens of software dependencies, some of which are based on the operating system, some based on software choices made in the high energy physics community, and others that are specific to software used by individual researchers or groups. So how can this be sorted and tracked dependencies-wise to provide a reproducible application?

According to a group of researchers from the University of Notre Dame who are interested in this very question, the process is rife with complexity up and down the stack. “Implicit dependencies, networked resources, and shifting compatibility all conspire to break applications that appear to work well.” And so they have targeted the reproducibility problem via a new toolkit, which has been demonstrated against very large high-energy physics simulation with some noteworthy success.

The challenges of application preservation, especially for codes that are complex and involve large-scale data are somewhat easier to imagine, but add in the many dependencies, and the process of creating an image to represent an entire application becomes next to impossible. For instance, the high energy physics code used in their work employed five different file systems and did not account for changes of platforms, hosting locations for libraries—it just generally was delivered as it was created to run on a system that had been turned over to the creator’s system administration to run. Further, when it comes to reproducing a code like TauRoast, that reproduction only needs a relatively small number of the existing files (which themselves are part of the patchwork quilt of code for the larger application). This means that storing and re-executing the code in a different environment would be hugely wasteful. This is simplifying a set of problems that are anything but simple for the sake of brevity, but the entire process is hugely inefficient—at least for complex codes.

“While it might be technically possible to automatically capture the entire machine and all the connected file systems into a virtual machine image, it would require 166 terabytes of storage, which would be prohibitively expensive for capturing this one application alone,” the researchers note.

To begin to create a reproducible application, they evolved it through several stages, creating a map of dependencies to allow the ability to “move, transform, and manipulate the dependencies of the artifact without damaging the artifact itself. “Given an abstract script and dependency map, it is straightforward for an automated tool to examine the dependencies in the map, download the missing ones, then modify the map to point to the local copies of the dependencies.” To package these dependencies the team developed a prototype tool to help them measure and preserve the implicit dependencies and used another tool called Parrot to record all files accessed by the application to see how many dependencies came into the picture. This creates what they call a reduced package that has only the files the application uses—eliminating all the waste and overhead that the application would have otherwise come with.

To rest the results they ran the repackaged code on Amazon EC2 (which uses a modified version of the Xen hypervisor), on a KVM-based system at Notre Dame, and on static cluster at the university, showing significant speedups. The full results of the research can be found here.

The researchers admit that there still plenty of gaps in creating efficient, reproducible, packaged applications, but this is a step in the right direction. It builds on the work going on across the high performance computing community as the next batch of pre-exascale systems begin to arrive and, hopefully, prop up even beefier parallel code that will distill a new generation of scientific discoveries. This is certainly not the only work in this space generally (and it’s a target for several domains and their specific applications) but it’s worth calling out as the issue will only compound as scientific codes continue to evolve–which as we know, they do quickly.

Be the first to comment