The core counts keep going up and up on server processors, and that means system makers do not have to scale up their systems as far to meet a certain performance level. So in that regard, it is not surprising that the new Sparc M7 systems from Oracle, which debuted this week at its OpenWorld conference, start out with a relatively modest number of sockets compared to its architectural limits.

It is not without a certain amount of irony that we realize that Oracle is pushing its own Sparc-Solaris scale-up machines while at the same time saying that the similarly architected Power Systems from IBM are not suitable for the cloud and should be replaced by Oracle systems.

The fact is, only about two-thirds of the Oracle installed base is running its software on Intel iron (about 200,000 customers), while the remaining 110,000 in the Oracle customer base are running on a variety of other machines, mostly Sparc, Power, and Itanium machines based on a Unix operating system. The fact that Oracle continues to invest in Sparc machines and the Solaris operating system – and importantly is doing a better job at making chips and machines on a regular schedule than Sun Microsystems – speaks to the company’s continued commitment to the Solaris platform. How long this commitment continues will depend largely on investments companies make in Sparc gear. Oracle would no doubt like to be able to control its entire stack, but it is hard to put that X86 genie back into the bottle.

Oracle did not, by the way, launched entry and midrange machines based on the “Sonoma” Sparc processor, which it unveiled at the Hot Chips conference back in August of this year.

The Sonoma processors, which presumably will not be called the Sparc T7s but maybe they will, are interesting for two reasons. First, the Sonoma chips are designed for scale-out clusters and include on-chip InfiniBand ports, the first time that Oracle has added InfiniBand links to one of its homegrown processors and quite possibly the first time that any chip maker has done so. The move mirrors Intel’s own plans to add Omni-Path ports to future Xeon and Xeon Phi processors. Logically, these Sonoma chips would have been called the Sparc T7s, but the servers that are called by that name are actually based on the Sparc M7 chips. (Yes, this will confuse customers.)

The second reason that the Sonoma chips are interesting is that last year at this time, Oracle said it was canceling the T series chips, and Sonoma looks like what a future T series processor might look like. So what gives? We suspect Oracle was being cagey, as happens, and that the Sonoma chips are aimed at slightly different targets. It would be logical that Oracle might call Sonoma the Sparc C series, short for cloud, and the systems will similarly be branded with Sparc C, and that Oracle will be, as many have suggested along with us at The Next Platform, using it to build its own infrastructure supporting its cloud aspirations.

A Year Of Waiting

The Sparc M7 processors made their debut at the Hot Chips conference in 2014, and it is one of the biggest, baddest server chips on the market. And with the two generations of “Bixby” interconnects that Oracle has cooked up to create ever-larger shared memory systems, Oracle could put some very big iron with a very large footprint into the field, although it has yet to push those interconnects to their limits. The first generation Bixby interconnect, which debuted with the Sparc M5 machines several years ago, was able to scale up to whopping 96 sockets and 96 TB of main memory in a single system image, although Oracle only shipped Sparc M5 and Sparc M6 machines that topped out at 32 sockets and 32 TB of memory. With the Sparc M7 processors, Oracle has a second generation of Bixby interconnect that tops out at 64 sockets and 32 TB of memory, as John Fowler, executive vice president of systems, told us last year when the M7 chip was unveiled. The Sparc M7 systems that use this chip are currently topping out stretch to 16 sockets and 8 TB of main memory, which is considerably less than the theoretical limits that Oracle could push.

To put it bluntly, having 512 GB of main memory per socket, as the Sparc M7 chip does, is not that big of a deal, not when IBM can do 1 TB per socket with its Power8 chips and Intel can do 1.5 TB per socket with the Xeon E7 v3. (The Xeon E5 v3 chips have half as many slots and half as much capacity as the Xeon E7 v3 chips.) Such large capacities on these Power8 and Xeon E7 v3 machines require 64 GB memory sticks, which Oracle is not supporting with the Sparc M7 servers – at least not yet – and if it did it could match the capacity of the Power8 chip on a per-socket basis. But with Oracle doing so many tricks in hardware to compress data and to make this available to its systems and database software, and with Oracle using flash to accelerate storage performance and to augment the main memory capacity, it makes a certain amount of sense for Oracle to stick with slightly lighter memory configurations and leave some headroom with 64 GB sticks should customers need them for larger memory footprints.

Inside The Sparc M7

The M7 chip is the sixth Sparc chip that has come out of Oracle since it paid $7.4 billion in January 2010 to acquire the former Sun Microsystems. Oracle and Sun were the darlings of the dot-com era and together comprised the default hardware and database platform for myriad startups of that time, driving their revenues and market capitalizations skyward. Oracle had been branching out into applications and kept growing through the bust, while Sun was crushed by intense competition from IBM’s Power Systems and then besieged by legions of Linux systems based on X86 processors. The Sun inside of Oracle may be a lot smaller, but say what you will – it is a lot more focused and it is delivering step functions in performance gains for real enterprise applications, and with a real sales and marketing machine behind it. Sun did not do this as an independent company, and suffered the consequences.

The M7 is a beefy motor, with over 10 billion transistors in it. The chip it etched using 20 nanometer processes, and Taiwan Semiconductor Manufacturing Corp is Oracle’s foundry, as it has been for prior generations of Sparc T and M series chips and was for Sun Microsystems towards the end of its era.

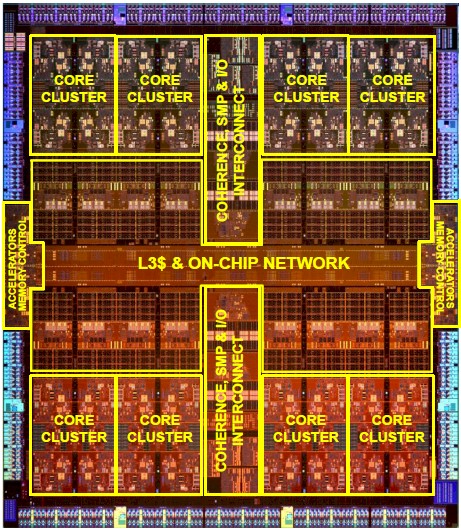

Like the Sonoma Sparc chip that Oracle talked about this year, the Sparc M7 chip is based on the S4 core design, which offers up to eight dynamic execution threads per core. The cores are the M7 chip are bundled in groups of four, with each S4 core having 16 KB of L1 data cache and 16 KB of L1 instruction cache. Each set of four cores has a 256 KB L2 instruction cache, and each pair of S4 cores also has a 256 KB writeback data cache. There are eight core groups on the M7 die, for a total of 32 cores and 256 threads. There is an 8 MB shared L3 cache for each core group, for a total of 64 MB of L3 cache on the die.

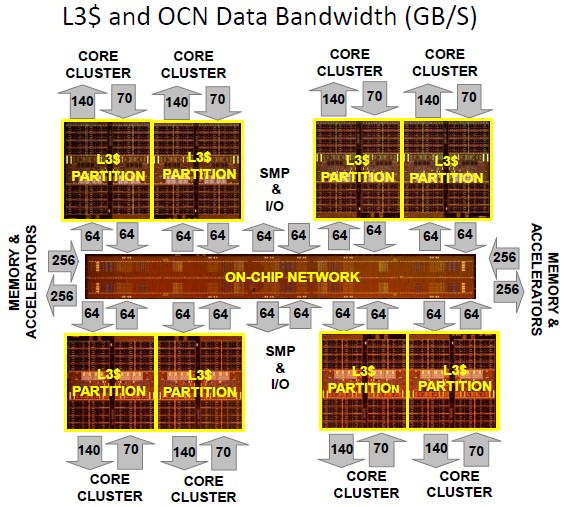

At 1.6 TB/sec, the aggregate L3 cache bandwidth for the Sparc M7 is 5X that of the Sparc M6 chip it replaces, and 2.5X of that for the Sparc T5 chip used in entry and midrange systems. The M7 chip has four DDR4 memory controllers on the die and using 2.13 GHz memory has demonstrated 160 GB/sec of main memory bandwidth. The four PCI-Express 3.0 interfaces on the chip have an aggregate of more than 75 GB/sec of bandwidth, which is twice that of the Sparc T5 and M6 processors. Depending on the workload, the M7 chip delivers somewhere between 3X and 3.5X the performance of the M6 chip it replaces – the tests that Oracle ran include memory bandwidth, integer, floating point, online transaction processing, Java, and ERP software workloads – so you can understand why the biggest Sparc M7 server only has 16 sockets instead of 32 or 64, as is possible with the Bixby-2 interconnect. By the way, IBM did the same thing moving to Power8, boosting the per-socket performance and cutting back on the sockets, and arguably the Superdome X machine from Hewlett-Packard does the same but also including a shift from Itanium to Xeon E7 processors.

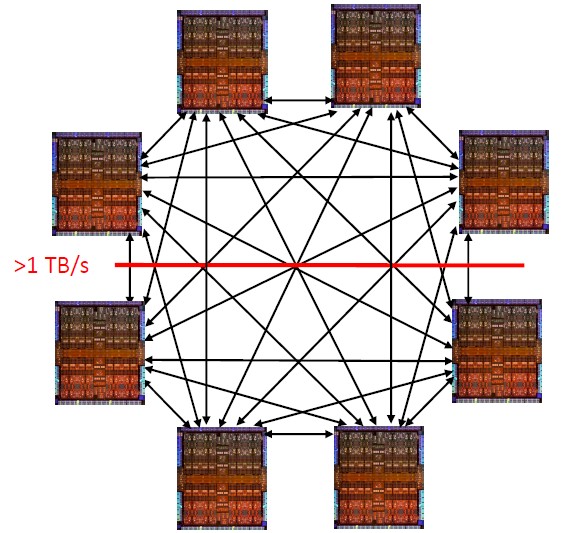

The Sparc M7 has on-chip NUMA clustering capabilities that allows for up to eight of the processors to be linked together in what looks more or less like an SMP cluster as far as Solaris and its applications are concerned. This allows, in theory, for a single system with up to 256 cores, 2,048 threads, and 16 TB of memory – with 1 TB/sec of bi-section bandwidth across that NUMA interconnect. The links making up the NUMA interconnect run at 16 Gb/sec to 18 Gb/sec, and there is directory-based coherence as well as alternate pathing across the links to avoid congestion.

The NUMA links can be used to make four-socket system boards that are then in turn linked to each other by the Bixby-2 interconnect ASICs, and to make a 32-socket machine, it looks like this:

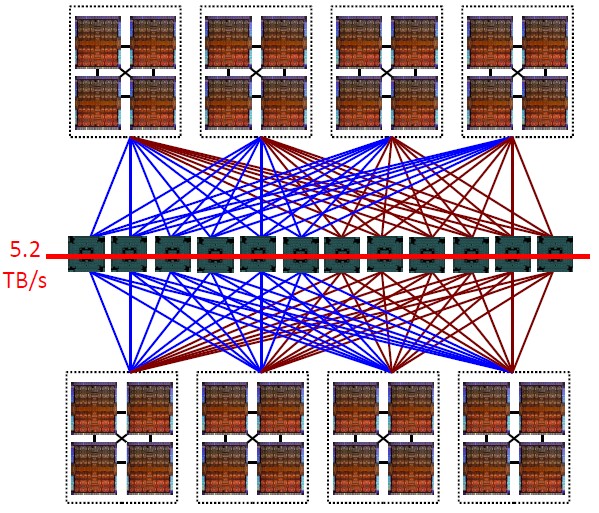

That is two Bixby switch groups with six ASICs each, with a total bi-section bandwidth of 5.2 TB/sec. That is 4X the bandwidth of the 32 socket Sparc M6 system that it replaces, although you cannot get a 32 socket machine from Oracle out of the catalog. The M7 machine with 16 sockets is just half of this setup. The 32 socket M7 machine allows up to 1,024 cores, 8,192 threads, and up to 64 TB of memory (in theory, remember).

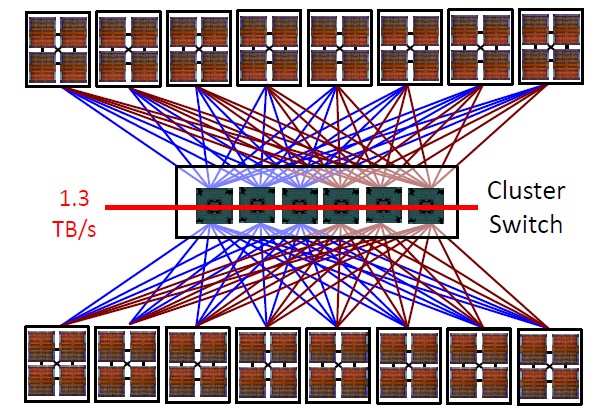

For those who want an even larger Sparc M7 machine, the architecture can push up to 64 sockets, as shown:

In this case, a set of six Bixby ASICs with 64 ports each are set up as a switch cluster and customers can build NUMA clusters using four-socket nodes. If you really want to push the limits, you can use eight-socket M7 server nodes as elements in this coherent memory machine, or you could, if your workload needed it, use two-socket nodes, too. The issue here is that the bi-section bandwidth across that Bixby switch cluster drops to 1.3 TB/sec. It is not clear what this bandwidth drop does to performance, but it may be largely academic unless someone wants to build a 64 socket machine with 128 TB of memory.

Oracle would surely take the order should it come in.

One interesting thing: The Bixby interconnect allows for coherent links for shared memory, but it also has a mode that is non-coherent, allowing for Oracle Real Application Clusters (RAC) clustering software to be run on the machines and use the Bixby network to synchronize nodes in the RAC instead of InfiniBand. Presumably, Bixby has lower latency and higher bandwidth than the 40 Gb/sec InfiniBand that Oracle has been using in its database clusters. (It would be interesting to see how Bixby stacks up against 100 Gb/sec InfiniBand and Ethernet, both of which are now available, too.) The Sonoma Sparc chip has two 56 Gb/sec InfiniBand ports on it, as we previously reported.

The Sparc M7 chips one native encryption accelerators on each core that support just about every kind of encryption and hashing scheme that is useful in the enterprise. The processor also has what Oracle is now calling the Data Analytics Accelerator, or DAX, which are in-memory query acceleration engines that speed up database routines. By the way, the eight DAX accelerators on the M7 chip are not tied to Oracle’s own software, and are enabled in Solaris and available for any database maker who wants to tap into them. The M7 chip also has compression and decompression accelerators, which run at memory speed and allow for Oracle to get a lot done in the Sparc server’s memory footprint. The chips also sport what Oracle calls Silicon Secured Memory, a fine-grained memory protection scheme, which our colleague, Chris Williams, over at The Register, provides an excellent explanation of.

One other interesting thing about Oracle’s chips. They have one SKU, as has been the case for many years, and it runs at 4.13 GHz in this case. It makes the choosing and capacity planning easier, that’s for sure.

The Sparc T7 And M7 Systems

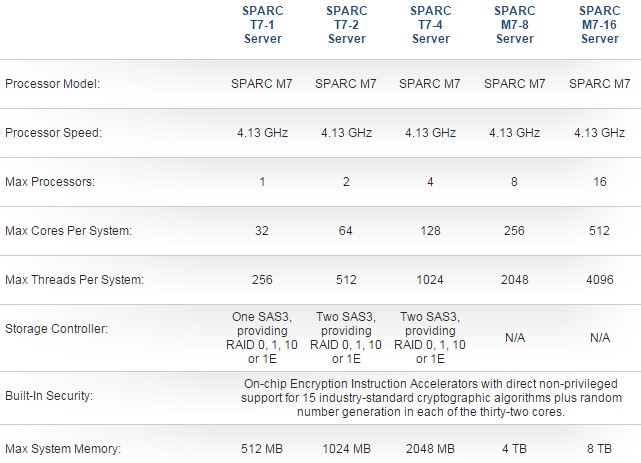

For systems, Oracle is keeping the Sparc T name for entry and midrange machines even though the T machines are not using Sparc T series processors. (Yes, this is probably not a good idea.) Here are the basic feeds and speeds of the machines using the M7 chips:

As you might expect, Oracle does not perceive of these machines as commodities and it is not pricing them as such. A base configuration of the entry rack-mounted Sparc T7-1, which means it has one processor, is configured with 128 GB of memory, two 600 GB SAS 2.5-inch disks in its 1U pizza box enclosure. It has Solaris 11 preinstalled and costs $39,821, with the first year of premier support for systems (which covers the hardware and the software) running $4,779. A heftier configuration with the full 512 GB of memory and eight 1.2 TB disks will cost $58,610 and support runs to $7,033 per year. (Yes, support scales with system price, at 12 percent of the cost, not with the processor configuration or other base features.)

The Sparc T7-2 system is a 3U rack-mounted machine that has six drive bays. The base machine has two M7 chips, as the name suggests, with 256 GB of main memory and two 600 GB SAS drives, and it costs $81,476. A full Sparc T7-2 configuration with 1 TB of memory and six 1.2 TB drives will cost $110,204.

Oracle is not providing pricing for the four-socket Sparc T7-4, the eight-socket Sparc M7-8, or the 16-socket Sparc M7-16. But generally speaking, Oracle has kept its prices pretty linear in recent years across the T and M series of machines and within the two lines as well.

So that would lead us to believe that a configured four-socket Sparc T7-4 machine should run maybe $235,000, an eight-socket Sparc M7-8 machine around $500,000, and a sixteen socket Sparc M7-16 machine around $1 million. These are with full memory and a decent amount of local disk storage. A year ago, a Sparc M6-32 with 32 of the 3.6 GHz Sparc M6 processors (that’s 384 cores) and 16 TB of memory cost $1.21 million. (That was the same memory per socket.) The Sparc M7 has considerably more performance – again, somewhere between 3X and 3.5X depending on the workload, with about half of that coming from core count and clock speed increases and the rest coming from architectural changes. If our guess about pricing is right, Sparc shops are in for a big boost in bang for the buck – something on the order of a factor of 3.9X if you can believe it, and we did the math three times because we thought it could not be right, too. But customers will have to shell out some dough to get that value.

Our guess is that the Sonoma systems, whenever they come out and likely around this time next year if history is any guide, will push the price points quite a bit lower for systems and therefore for the clusters that Oracle no doubt wants to build for itself and its customers.

“so you can understand why the biggest Sparc M7 server only has 16 sockets instead of 32 or 64, as is possible with the Bixby-2 interconnect.”

No I didnt. Kindly elaborate. a little easier to understand explanation would help/

I thought the first sentence conveyed it, but maybe not.

The M6 had 12 cores, and the M6-32 server had 32 sockets, for a total of 384 cores running at 3.6 GHz. With the M7, Oracle has 32 cores per socket, and the cores run at 4.13 GHz, for a total of 512 cores. That’s 33 percent more cores and 15 percent more clocks in half the space. Add in the other features, such as microarchitecture improvements and accelerators, and the M7 socket has around 3.25X the performance of the M6 socket on real-world code, according to Oracle. So you don’t need a 32 socket M7 machine to have more oomph than a 16-socket M6. You can do it with a 16-socket M7 and have room to spare in the future for upgrades, if customers hit the performance ceiling.

The M7 goes to 2TB ram per chip, I don’t understand why the entry level T7 server maxes at 512MB?

For business enterprise workloads, SMP risc servers beat clusters such as x86 SGI UV2000. The archetype is SAP benchmarks. The fastest SAP benchmarks are all risc CPUs. Fujitsu 32 socket has 844.000 saps, whereas all x86 are far far below. Because x86 does not scale to large SMP servers. Compare the fastest x86 to UNIX and you will see that x86 does not stand a chance.

There are no UV2000 benchmarks on the sap list. The reason is that 256-socket UV2000 runs only HPC number crunching workloads. HPC workloads typically run a tight for loop on each CPU, solving the same PDE repeatedly on the same grid, over and over again. This means that there are tiny data used over and over again, which fits into the CPU cache. So each node can run full speed, only accessing the data in the CPU cache. These servers serve only one scientist that starts up on large compute job running for days.

OTOH, business software servers such as SAP serves thousands of users at the same time on one single server, each user doing something else. One user does accounting, another doing salary, analytics, etc. This means the the source code branches all over the place, no tight for loops. This means all the user’s data can not fit into CPU cache, serving thousands of users at the same time. This means the software needs to reach out to slow ram all the time, bypassing the CPU cache. Say ram latency is 100 ns, which is the equivalent of 100 MHz CPU. Remember those?

The problem with SGI UV2000 and similar clusters is that when go beyond 64 sockets, say 256 sockets, all CPUs are not connected to each other, there would be 256 over 2 = roughly 65.000 connections. Compare how many connections there are in the 64 sockets topology above, such a mess! Now imagine 256 sockets and 65.000 connections. That is not doable, therefore you need to introduce switches to connect each CPU to another when they need to communicate. There are no connections between CPU unless they are created by the switches. This takes additional latency, making it worse than 100ns. This is the reason you can not go above 64 sockets for business workloads. This is why nobody runs SAP on SGI clusters. You need an all-to-all topology, not switched topology between the CPUs created by NUMAlink7

Here are some Sparc M7 world records, typically 3x faster than the fastest x86 v3 CPU, and IBM power8. Going up to >10 faster for database workloads

https://blogs.oracle.com/BestPerf/

SGI UV2000 is NOT a cluster, it’s a ccNUMA platform, similar to an HP Superdome, or Intel x86 dual or quad socket server. i.e it’s a single system image shared memory system, with a single OS. A cluster in contrast is a distributed memory platform, e.g. Each cluster node is a shared memory machine with it’s own OS copy.

Also to say UV2000 will only run HPC applications is dumb. It may not run them as efficiently as a crossbar based SMP, but it will run them.

@ToddRundgren,

Sorry to bust your illusions, but SGI UV2000 is only suitable for scale-out clustered workloads. There are NO customers using the UV2000 for scale-up workloads, such as enterprise business work like SAP, databases, etc.

Sure, even a 512MB PC loaded with Linux might be able to run the largest SAP configuration with large enough swap space on disk – but it will not be pretty. It would be stupid to run that in production. The same with UV2000, it is impractical for use of anything other than clustered scale-out workloads, such as HPC number crunching, or analytics running on each node such as Oracle TimesTen in memory database which UPC does.

I have actually seen ONE database benchmark on the UV2000 for persistent storage, storing data on disks, but the database benchmark used…8-sockets. Not larger. Any database configuration larger than 8-sockets, and performance grinds to a halt. If you look at SGI web site on the UV2000, all the 30+ customer stories, are about clustered workloads. No one use them to run SAP or databases (for storing data on disk like Oracle, DB2, etc). The database use case with UV2000, are all about analytics: read only in memory databases. Which means you dont have to do complex locking when several processes try to edit the same data on disk at the same time, etc. In memory databases just scans the data in parallel on each node in the UV2000 without worrying for edits on disk. In memory database, does not store data on disk. They are used just like Datawarehouses – that is, for analytics.

BTW, I actually emailed a SGI sales rep asking for articles, customer stories, anything, using UV2000 for scale-up workloads such as SAP, databases, etc. The SGI sales rep told me that they recommend the UV300H with 16/32- sockets for scale-up workloads instead. I asked why UV2000 won’t do instead, and he just did not answer. I pressed hard, and he kept talking about the UV300H instead. At the end he wondered why I asked these questions about the UV2000, and he stopped replying to my emails. I have the entire mail conversation here.

So, SGI can call the UV2000 whatever they want, SMP, ccNUMA, etc – but in practice it is only used for clustered scale-out workloads. So, in practice, it is a cluster for all intents and purposes. No one use it for scale-up workloads. You are free to call the UV2000 whatever you want, but in effect it is a cluster. If you disagree, you are welcome to post scale-up benchmarks, such as SAP or TPC benchmarks or anything else. You will not find any such benchmarks. You can start with SGI website with all customer stories (all are about clustered workloads). So, you need to relearn. Just look at the use cases and customers – ALL of them are about scale-out.

The SPARC M7 supports up to 2TB per chip using latest generation DDR4-2133/2400/2667 MHz. Apparently the technology for higher density DDR4 DIMMs is relatively new, and Oracle is evaluating those to offer increases in the memory capacity for the systems sometime in the future. Also note that SPARC M7 has SWiS including an In-Memory Query Accelerator allowing the SPARC M7 to deal with decompressing in real-time compressed data in memory, therefore requiring less memory.

FYI. The pricing in article is much higher than actuality. And FYI, SPARC M7 price/performance is quite linear across entire product line so theres no “penalty” moving to higher end, more socket, higher RAS configs. Something that neither x86 nor Power8 platforms have.

SPARC T7-1 from $39,821 (128GB Ram) to $58,610 (512GB Ram)

SPARC T7-2 from $81,476 (256GB Ram) to $110,204 (1TB Ram)

SPARC T7-4 from $106,151(256GB Ram) to $221,639 (2TB Ram)

SPARC M7-8 approximately 2x SPARC T7-4

SPARC M7-16 approximately 2x SPARC M7-8.