Sometimes, the customers get out ahead of the vendor community and they lead. This is precisely what happened to Univa, the commercial entity behind the Grid Engine cluster workload scheduler, with regard to Docker containers.

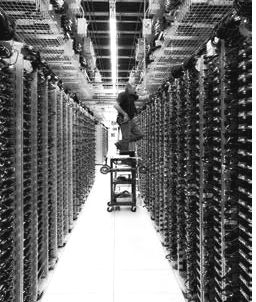

A workload scheduler like Grid Engine is at the heart of the distributed computing platform at HPC centers, enterprises, and government and academic research institutions, and companies do not want to have multiple schedulers running silos of workloads on multiple clusters if they can avoid it. Moreover, the most efficient way to a cluster is to make the largest cluster possible and then schedule the most jobs possible on that big machine rather than a bunch of smaller clusters. Scaling out jobs across more nodes allows them to finish quicker in many cases, so the bigger cluster also can boost overall throughput.

Given all this, it is understandable why customers using Grid Engine have been pushing Univa to integrate support for Docker containers with the workload scheduler. In fact, a number of customers wanted Docker so badly that they figured out how to hack it onto Grid Engine themselves, Gary Tyreman, CEO at Univa, tells The Next Platform. One such customer was the Centre for Genomic Regulation in Barcelona, Spain, and Univa dispatched some of its techies to the research institution to compare notes with how it was implementing Docker on top of Grid Engine. The result, along with input from a bunch of other spearheading customers, is Grid Engine Container Edition, which is shipping to some early accounts now and which will be generally available shortly, says Tyreman.

Grid Engine Container Edition automates the process of deploying the Docker Engine runtime for containers on top of clusters of servers and then dispatching container images from a repository such as Docker Hub, the public repository run by Docker (the company), or Docker Hub Enterprise, the on-premises variant of the repository. In a way, the Docker container and runtime is just another kind of application that Grid Engine deploys on top of a Linux instance on a server, so technically speaking, that is the easy part. The hard part – and what makes the official integration useful – is that it plug into the metering, chargeback, security, and load balancing features of Grid Engine, just like any other workload does. This latter bit is what is important to Grid Engine customers, particularly those in large enterprises who depend on these features so lines of business only get their fair share of capacity and pay their fair share of the IT bill.

“What we have done already we are going to bring to Kubernetes. That is our intent, although we have not put our product plans out yet. We bring to this group experience with enterprise at scale with complex, messy, heterogeneous environments – often with different architectures and with a mix of bare metal and virtualization – that we drive high utilization and efficiencies from.”

The extensions for Grid Engine to natively support Docker will work on Grid Engine 8.3 and 8.3.1, the two latest releases, and Tyreman says there is no technical reason why it can’t work with Grid Engine 8.2 although the company is not sure if it will backcast support that far. Container Edition does not require the Kubernetes container controller open sourced by Google and that is controlled by the Cloud Native Computing Foundation that the search engine giant set up back in July when the 1.0 release of the software was set into the wild. The Container Edition similarly does not have any dependencies on the Mesos cluster controller or the Universal Request Broker add-on that Univa announced back in April. With this latter bit of software, rather than graft Mesos on top of Grid Engine, Univa has implemented the Mesos APIs on top of Grid Engine so it can look and feel like Mesos to applications that have been coded for that cluster controller and its frameworks but actually be running atop Grid Engine.

Pricing for the Container Edition has not been set yet, but it will carry an incremental charge over the base Grid Engine license and customers will be able to add this feature to existing clusters. The base Grid Engine software costs $100 per core per year to license, including a support contract, and the URB add-on costs another $50 per core per year for the machines that make use of it. Univa does not expect that companies will necessarily buy licenses to either URB or Container Edition that span their entire clusters, but rather for the number of cores where Mesos or Docker jobs will run. Docker is, for the moment, a Linux phenomenon, but Docker will eventually run atop Windows Server 2016 and if enough customers want it, Univa can bring Docker-on-Windows support to Grid Engine. (Univa started supporting Windows Server with Grid Engine with its 8.2.0 release last September.)

Univa is not the first of the HPC-class workload schedulers to support Docker. As we reported in the spring, IBM supports Docker containers on top of its Platform LSF scheduler.

The thing that Univa is stressing that is can bring immediately to the table is scale. As we reported this week, the Kubernetes 1.0 container controller can scale to around 100 nodes and launch maybe one container per second, and the members of the Cloud Native group are working to extend that to 1,000 nodes. Docker Swarm, the container management system from the eponymous creator of Docker, might be running on tens of thousands of clusters out there in the world, but the data that Tyreman has seen says the average cluster size is 42 nodes. This is not exactly enterprise scale.

Tyreman tells The Next Platform that Grid Engine already can launch 1,000 containers per second, and as for scalability limits, the ceiling is not in Grid Engine but in how fast you can get data out of the Docker repository and how fast you can move Docker images through the network to the servers. As for node count, Grid Engine has customers with fewer than a hundred nodes all the way up to over 20,000 server nodes in the installed base, which numbers over 10,000 installations worldwide including those running the open source version. Univa has more than 400 customers that use the supported version of its Grid Engine and extensions.

This is by no means the end of the Docker story for Grid Engine. Univa has something else up its sleeves, and it is only hinting at what that might be.

“We are not going to compete with Kubernetes,” Tyreman says right off the bat, and we quipped that perhaps Univa would compete as Kubernetes, which got a laugh. “We all know that Google’s enterprise experience is limited, so check box there. Kubernetes has limitations at only 100 nodes and given its dependencies. We are actually participating quite actively in the projects at the Cloud Native Computing Foundation scheduling and scale special interest groups and a couple of others. What we have done already we are going to bring to Kubernetes. That is our intent, although we have not put our product plans out yet. We bring to this group experience with enterprise at scale with complex, messy, heterogeneous environments – often with different architectures and with a mix of bare metal and virtualization – that we drive high utilization and efficiencies from.”

The way to think about this future unnamed product – we called it K Engine for fun – is that a follow-on product supporting Docker containers from Univa will take some of the scheduling smarts of Grid Engine and get it into Kubernetes to scale it and speed it up. Precisely how this happens has not been established yet, and it could involve the licensing of some intellectual property to the Cloud Native Computing Foundation. “We are not sure if that is what we will end up doing – we are still in discussions,” says Tyreman.

And unlike its path with Mesos, it doesn’t sound like Univa will just be supporting the Kubernetes APIs on top of Grid Engine.

“In the future, you will see a Kubernetes product that we will bring to the market, and in that case, we will not be building Kubernetes on the back of Grid Engine, but the reverse. We will take our orchestration and plug it into Kubernetes. We already could support Kubernetes the way Mesosphere does, on top of Mesos, but that is not the right way – it is upside down. From an architectural perspective, Kubernetes is supposed to be making decisions where to place containers, and orchestrating Kubernetes is going to be a key part of our offering in the future.”

Be the first to comment