The Haswell Xeon E5 processor ramp is continuing a-pace, and Amazon Web Services is putting out a new variant of its EC2 compute instances that employ custom versions of these Intel processors. The new M4 instances are configured a bit differently from the predecessors and also have more computing oomph than you would expect from the Haswell Xeon E5 processors, which is interesting indeed.

The M4 instances are kickers to the M3 series of general purpose EC2 instances that the cloud computing giant last rolled out starting in October 2012 with fat instances and fleshed out further with medium and large instances in January 2014. Amazon doesn’t provide a lot of specifics about its custom servers or the tweaked versions of the processors that it uses inside of its machines, but the company did say that the M4 instances are based on a non-standard Xeon chip from Intel called the E5-2676 v3, which runs at 2.4 GHz and can Turbo Boost as far as 3 GHz if there is enough thermal headroom as the chip is running real workloads. Amazon likes to stick with as few chip SKUs as possible to eliminate variables from it support matrix across its massive infrastructure. The cache size and core count for this custom Xeon E5 part was not revealed.

The C4 instances announced last fall, which are also based on the Haswell Xeon E5 processors, were based on a custom Xeon E5-2666 v3 processor that ran at 2.9 GHz with Turbo Boost up to 3.5 GHz; the core count was not revealed, but everyone assumed it was an 18-core part. The M3 instances were based on a standard “Ivy Bridge” Xeon E5-2680 v2 chip with ten cores and twenty threads per socket. In designing its servers, Amazon has to balance the number of threads, the clock speed, the number of sockets, and the variations and sizes of instances it wants to be able to offer on each machine to pick its processor. This is a tricky business to be sure, and it is not always as obvious as picking the chip with the most cores because Intel’s pricing is certainly not linear on the Xeons – not even for hyperscalers and cloud builders. The more cores and L3 cache you want, the more expensive the chips become.

What you can say about EC2 is that, generally speaking, the amount of compute per vCPU is trending up a little within an EC2 class, such as M series for general purpose or C series for compute-optimized workloads.

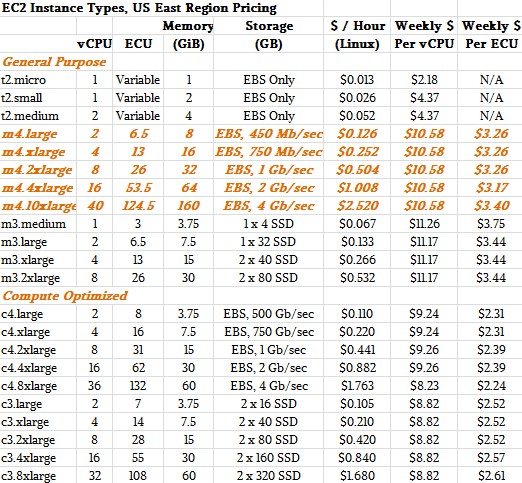

Here is how the new EC2 images, shown in Platform orange, stack up against the earlier M3 instances and the C3 and C4 compute-optimized instances:

In the table above, price/performance comparisons are based on calculating the cost of running an instance for a solid week. (An hourly rate is too small of a unit to calculate relative values upon.) Prices are shown for the US East region in Northern Virginia running the stock Linux Amazon provides. Adding Red Hat or SUSE Linux or Microsoft Windows will cost more.

There are a few things to note. First of all, AWS is not putting local storage inside of the M4 nodes as it did with the M3 and M1 instances. Amazon wants customers to use its Elastic Block Storage (EBS) service for persistent storage, which radically simplifies the server infrastructure. Basically, given remote boot of the operating system and hypervisor, all a server node needs is CPUs, memory, and a network card. EBS is now fast enough and presumably cheap enough for this to make sense, and now Amazon can grow its compute farm separately from its storage farm. (Google Storage is similarly abstracted away from compute on Google Compute Engine, with a mix of flash and disk and sophisticated file system software allowing for Google to dial performance of the network and durability of the data up and down, along with prices.)

With the M4 instances, EBS performance peaks out at 4 Gb/sec of throughput, and the Ethernet network piping into the instance tops out at 10 Gb/sec. As you go down the M4 instance sizes, the network bandwidth drops and so does the EBS throughput. Interestingly, if you do the math, the EBS bandwidth per vCPU goes down as the instance size grows, and so does the storage bandwidth per ECU, which is Amazon’s relative measure of performance. (An EC2 Compute Unit, or ECU, is equivalent to the processing capacity of an Opteron core from AMD or a Xeon core from Intel running at around 1 GHz to 1.2 GHz in 2007 or a Xeon core from Intel running at 1.7 GHz in 2006. When Amazon launched, Intel’s cores clocked higher, but there were fewer of them on the die.)

On a per-ECU basis, the M4 instances are 5.3 percent cheaper than the M3 instances they replace, and that is after a 5 percent cut in price that Amazon announced on the M3 and C4 instances in the US East (Northern Virginia), US West (Oregon), Europe (Ireland), Europe (Frankfurt), Asia Pacific (Tokyo), and Asia Pacific (Sydney) regions.

The M4 instances include Amazon’s Enhanced Networking, which makes use of Single Root I/O Virtualization (SR-IOV) in Ethernet networking cards to reduce latencies between instances in a cluster by 50 percent or more, according to AWS, and to cut back on network and operating system jitter and to deliver up to four times the packet processing rate of EC2 instances without this enhanced networking. On the m4.10xlarge instance, Amazon will also allow customers to change the C state and P state power control mechanisms in the Intel Xeon processors to allow for more sophisticated thermal management.

The new EC2 instance types are available through spot, on-demand, and reserved contracts, and can be fired up in the US East (Northern Virginia), US West (Northern California), US West (Oregon), Europe (Ireland), Europe (Frankfurt), Asia Pacific (Singapore), Asia Pacific (Sydney), and Asia Pacific (Tokyo) regions.

If history is any guide, it won’t be long before Amazon is firing up the C5 instances with future “Broadwell” Xeon E5 processors – probably this fall – and then about this time next year these Broadwell Xeon E5 chips will likely make their way into M5 instances.

Be the first to comment