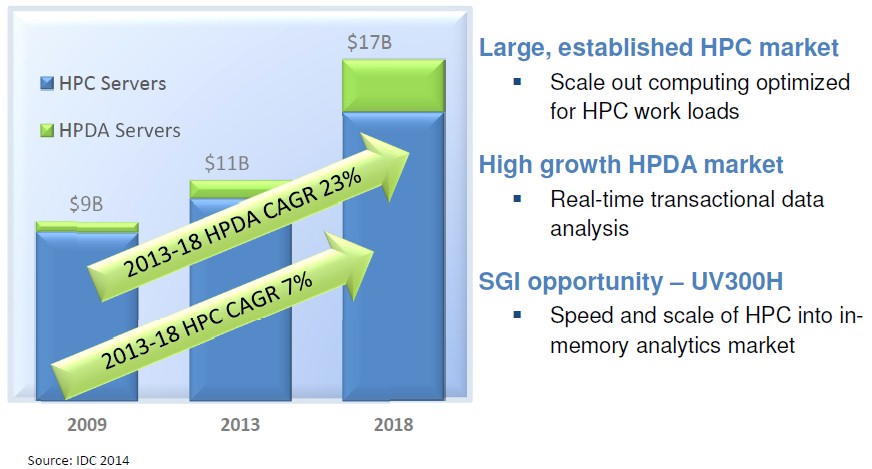

The high performance computing market is about as tough as they come. The technology is complex and expensive to develop, and the customers have very high expectations and are often on tight, even if large, budgets. And for the most part, they do not pay for their systems until they have passed qualification testing. The good news is that the hardware and software that were developed for supercomputers are now applicable for in-memory processing and other data analytics workloads, so companies like SGI can expand into adjacent markets and try to grow their businesses beyond traditional supercomputing.

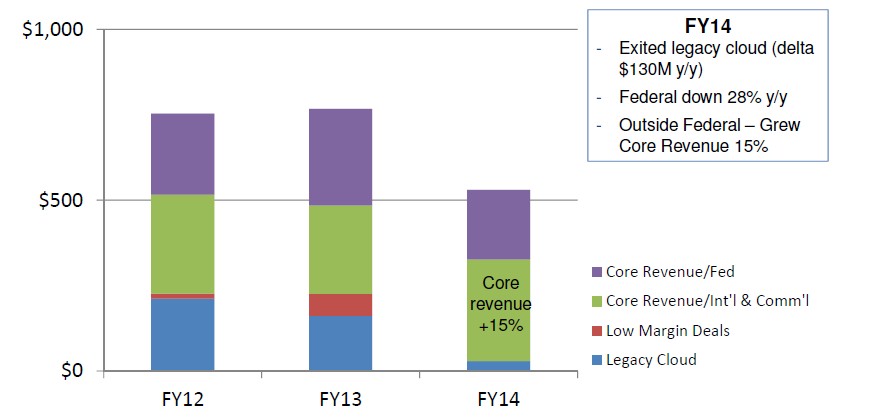

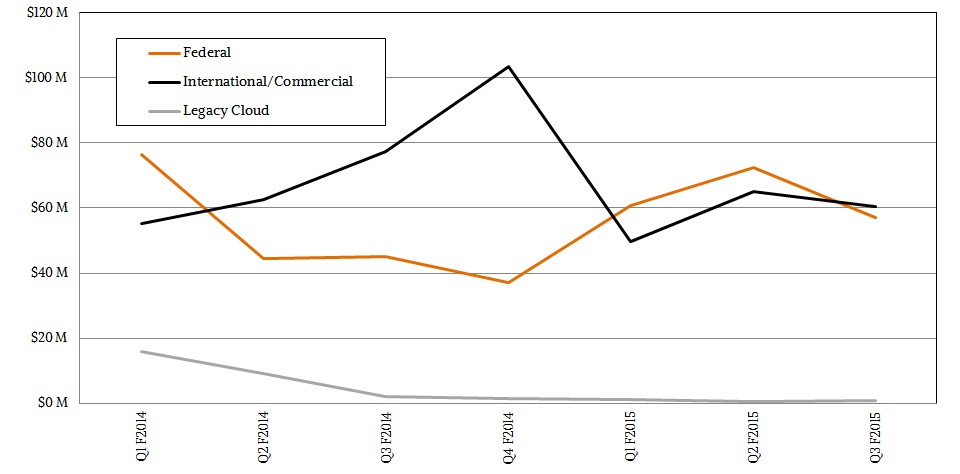

This, of course, is precisely the plan at SGI, which has been winding down its legacy business of selling high-density – and yet not particularly profitable – systems to hyperscale and cloud datacenter operators as it moves into the analytics and in-memory arenas.

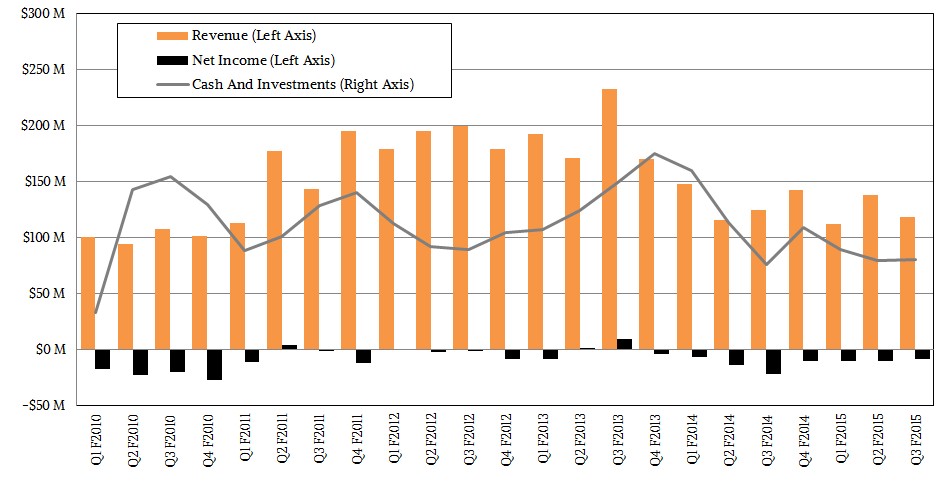

SGI could use this transition to move a bit faster, but it is the nature of enterprise customers, who are conservative, to move a bit more slowly than either HPC or hyperscale shops. In the third quarter of its fiscal 2015 ended in March, SGI’s revenues were $118.5 million, down 4.6 percent. The company shrank its losses, however, booking a net loss of $8.8 million, a significant improvement over the $21.9 million loss it had in Q3 of fiscal 2014. SGI had tight controls on research, development, sales, and marketing, which all dropped much faster than the revenue dip.

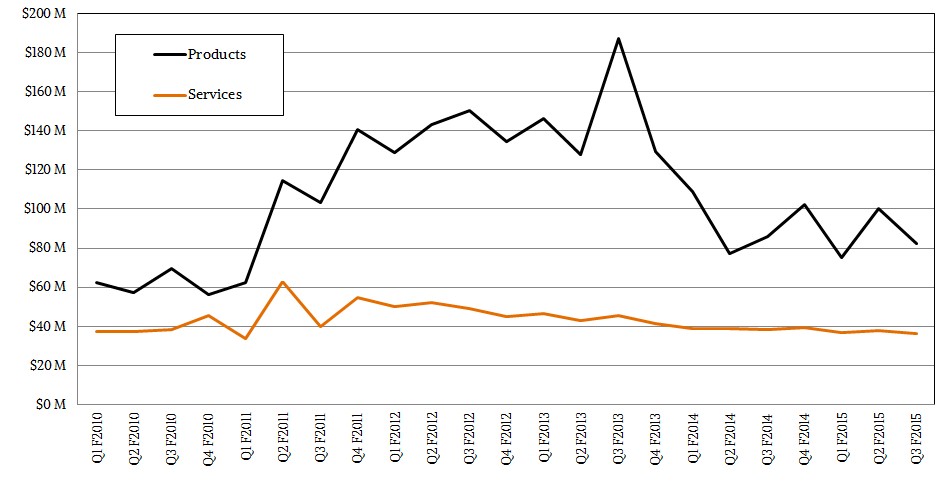

For two years, SGI used to break down product sales by compute and storage, but it stopped doing that a few quarters back. Compute generally average around 85 percent of revenues, with storage bringing in the other 15 percent; the slices of servers and storage wiggled as much as ten points in any given quarter depending on the deals that SGI signed. About 70 percent of SGI’s revenues in Q3 fiscal 2015 came from products, with the other 30 percent coming from services, a more or less normal distribution for the company.

As The Next Platform recently reported, one of the key new systems that are part of SGI’s increasing focus on enterprises is the “Ultra Violet” UV 300 series of machines, which create a tightly coupled shared memory machine with 32 processor sockets and 24 TB of capacity (soon to be 48 TB with fatter memory sticks). This UV 300 does not have some of the issues that SGI’s larger-scale NUMA UV 2000 machines have, which make them difficult to program even if they do scale further. The UV 300 machines are based on the NUMAlink 7 interconnect, which will eventually be deployed in high-end UV 3000 follow-ons to the UV 2000. (The UV 3000s are due sometime in the September quarter, it looks like.)

SGI’s transition away from the cloud business, which largely came from its Rackable Systems unit, to in-memory transaction processing and various kinds of data analytics, which run on a mix of ICE X clusters and UV shared memory systems, was not easy or painless. The company began unwinding this cloud business, which had a run rate of about $130 million a year and which had marquee customers like Amazon and Microsoft and others who sometimes comprised a very large portion of its quarterly revenues. That top line was hard to walk away from, but SGI correctly saw that these companies would be moving to custom machinery made largely by Asian original design manufacturers (ODMs) or, in the case of Microsoft, by Hewlett-Packard and Dell, both eager to preserve their shipments and revenues even if they can’t be making much, if any, margin on their sales to Microsoft. The unfortunate thing for SGI is that the Federal government tightened spending shortly after SGI decided to back away from the hyperscale/cloud infrastructure space in the summer of 2013.

This is not the first time that SGI, in several of its incarnations, has tried to move from HPC into the enterprise space. But the enterprise market, wrestling with epic amounts of data of myriad types and under pressure to make intelligent use of it, may now be more ready to accept SGI systems to run workloads other than traditional simulation and modeling.

This is particularly true with UV machines now running standard Linux from SUSE Linux and Red Hat on standard Xeon processors from Intel, and with the UV 300 presenting a flat memory space to applications that means the operating system and applications do not have to be tuned to cope with memory management issues that affect all large-scale NUMA systems. The flatter memory in the UV 300 systems means code just runs on the machines as it does on systems with fewer sockets and less memory – but it can handle larger datasets and potentially also run jobs faster. And, it is likely that SGI will use the combination of the KVM hypervisor and the OpenStack cloud controller to bring Windows support back onto the UV platform, as we talked about recently, although this is still a bit of a science project at SGI and the company has not yet divulged its plans for Windows support. Suffice it to say that certifying KVM gives SGI a chance to support Windows and its applications without having to certify Windows and those applications individually.

At the moment, SGI is putting a lot of effort into tuning up the UV 300 series for SAP’s HANA in-memory database because it wants to hitch its wagon to the transition to HANA underneath SAP’s various business applications from relational databases from Microsoft, IBM, and Oracle. As of January of this year, the UV for SAP HANA system, also known as the UV 300H, was certified to run across four and eight sockets, with 3 TB or 6 TB of main memory across those sockets, respectively. One customer, Sigma Aldrich, had bought two dozen of the systems to distribute across its operations with an aggregate of more than 50 TB of memory to run its HANA workloads. Earlier this year, SGI shipped a 16-socket machine with 12 TB of memory to the SAP Innovation Lab in Silicon Valley and two 32-socket machines with 24 TB of memory to SAP’s headquarters in Walldorf, Germany for testing. In January, SGI had nine proofs of concept underway on the UV 300H – five with customers and four with system integrators.

Memory expansion is a big deal for in-memory databases. With in-memory systems, once you run out of sockets, you run out of memory, and in some cases, to get a big memory footprint, it makes more economic sense to use skinnier memory sticks across a larger number of memory slots than to use fatter – and wickedly more expensive – memory sticks. This is why being able to scale up beyond four sockets is important for the HANA workload.

Moreover, while it is fine to cluster HANA servers for data warehouse applications (SAP does this with its own data warehouses, by the way), for performance reasons, on transaction processing systems it is much better to have a scale-up box. SAP and SGI have estimated that somewhere between 5 and 10 percent of the customers using HANA will want something fatter than a four-socket box, and this translates into an opportunity of around $230 million in 2015, around $700 million in 2017, and north of $1 billion in 2018. SAP has around 291,000 customers worldwide running its applications, so even a small share of them needing big iron can radically boost SGI’s UV sales.

Now you understand why SGI is so focused on SAP HANA for its UV 300 systems.

This week, SGI announced that it had received certification from SAP to run its HANA in-memory database on UV 300H machines with 12 and 16 sockets using Intel’s “Ivy Bridge-EX” Xeon E7 8890 v2 processors. On a conference call with Wall Street analysts going over SGI’s financial results for the third quarter, CEO Jorge Titinger said that through the end of that quarter SGI had deployed UV 300H machines at thirteen customers and system integrators, and one was a US Federal agency that was testing a move to convert a 60 TB Oracle database system to a single instance of UV running HANA. “Initial feedback has been very positive and the performance of the sixteen-socket system is at the top of its class,” Titinger said, adding that the next step was to certify the full 32-socket UV 300H to put it “well ahead of the competition.”

That competition, for the most part, is Hewlett-Packard’s “Project Kraken” Superdome X system, which only scales to sixteen E7 v2 processors itself. Neither company has said when they plan to upgrade their big NUMA boxes to the forthcoming “Haswell-EX” Xeon E7 v3 chips, but hopefully it will not take long.

On the traditional HPC and data analytics front, the total addressable market is much larger, of course, and for many workloads, a shared memory system with immense memory capacity is preferred over a clustered system. (As SGI chief technology officer, Eng Lim Goh, has explained to us in the past, a cluster with loosely coupled memory is fine when you are sifting through data to find needles in haystacks, but you need a shared memory system for applications where you don’t even know you have needles and hay, the relationship between them, and you are not sure what you are looking for.)

SGI does not break out its revenues by product or market segment, but on the call Titinger said that the company had the strongest bookings in the March quarter that it had seen in more than three years. SGI grew its sales in the Federal sector, which includes the Department of Defense as well as various civilian agencies like NASA and the US Postal Service and of course various intelligence agencies that rarely talk about what they are up to.

SGI captured several large deals in the quarter, ranging in size from $7 million to $35 million, Titinger said, with some expansion into the aviation and telecommunications industry. All told, SGI had $250 million in large deals so far in fiscal 2015, and the average deal size was $16 million, more than twice the company’s historical average. HPC projects accounted for two thirds of the bookings in SGI’s fiscal third quarter, and big deals will comprise about half of SGI’s revenues in fiscal 2015. On the big deals, of course, was the 4.4 petaflops upgrade of the Pangea 2 supercomputer at oil giant Total, which remains the largest commercial supercomputer in the world. The UK Atomic Weapon Establishment and Japan Atomic Energy Agency both inked deals for ICE clusters, the latter for a 2.4 petaflops ICE-X cluster and the former for a new ICE-XA system of unknown size.

Titinger warned Wall Street that one of the big deals it was working on with the US Department of Defense will be delayed a quarter and push out into the first half of fiscal 2016. Some of the revenue hit in the third quarter is no doubt due to the expectation of new UV 3000 systems later this year, and Titinger conceded as much on the call. This is a perfectly normal pause that affects all IT suppliers. The important thing is how SGI will marry its NUMAlink 7 interconnect with Intel’s forthcoming Haswell-EX Xeon E7 v3 chips to get more compute and more memory on large scale simulation, modeling, and analytics workloads.

Looking ahead, SGI says to expect sales in the June quarter, its final one for fiscal 2015, to be in the range of $130 million to $145 million, with a net loss of between $4 million and $8 million. (That loss is on a non-GAAP basis, which probably means it will be deeper as reported, but you can never say for sure until a company reports its figures.) For the full year, SGI expects for revenues to be in the range of $500 million to $515 million, and while SGI did not say this in its forecasts, with losses in the first three quarters and a non-GAAP loss coming in Q4, it will very likely mean the company reports a loss for the fiscal 2015.

Customers don’t invest in the stocks of their vendors, just like we at The Next Platform do not invest in the companies that we write about. The thing to watch with vendors who are in the HPC, hyperscale, and high-end markets is how big the losses are relative to the cash a company has in the bank and to the cash flow the company has to replenish reserves. SGI has to bide its time and ride out the transition away from hyperscale and towards HANA in-memory and analytics while continuing to invest in its core HPC market. And if that means taking a few million dollars in hits each quarter, this is frankly not a big deal in the grand scheme of things.

If you look at the trailing twelve months, SGI’s revenues are down, as expected, but it has cut its losses a bit, and if it can manage to stack up its UV 3000 upgrades and expand with SAP HANA in fiscal 2016, it will not take much to grow revenues and get profitable. SGI is not making any such forecasts, of course, but we hope this is precisely what happens for the good of SGI and its current and future customers. The IT market needs vendors who are innovating beyond the basic technology supplied by processor vendors like Intel, AMD, IBM, Fujitsu, Oracle, and the rising ARM collective. Very few companies can invent their own alternatives likes Google, Facebook, and Amazon do, after all.

“…This is not the first time that SGI, in several of its incarnations, has tried to move from HPC into the enterprise space….”

Finally SGI might be able to run enterprise business workloads. SGI UV2000 scale out server with 256-sockets can only run HPC number crunching workloads, you need a scale-up server to be able to run enterprise business workloads. This UV3000 with 32-sockets might be able to compete with the big Unix boxes in the enterprise market.